2008:Audio Tag Classification Results

Contents

- 1 Introduction

- 2 Overall Summary Results

- 2.1 Summary Positive Example Accuracy (Average Across All Folds)

- 2.2 Summary Negative Example Accuracy (Average Across All Folds)

- 2.3 Summary Binary relevance F-Measure (Average Across All Folds)

- 2.4 Summary Binary Accuracy (Average Across All Folds)

- 2.5 Summary AUC-ROC Tag (Average Across All Folds)

- 3 Friedman test results

- 4 Beta-Binomial test results

- 5 Assorted Results Files for Download

Introduction

These are the results for the 2008 running of the Audio Tag Classification task. For background information about this task set please refer to the 2008:Audio Tag Classification page.

General Legend

Team ID

LB = L. Barrington, D. Turnbull, G. Lanckriet

BBE 1 = T. Bertin-Mahieux, Y. Bengio, D. Eck (KNN)

BBE 2 = T. Bertin-Mahieux, Y. Bengio, D. Eck (NNet)

BBE 3 = T. Bertin-Mahieux, D. Eck, P. Lamere, Y. Bengio (Thierry/Lamere Boosting)

TB = T. Bertin-Mahieux (dumb/smurf)

ME1 = M. I. Mandel, D. P. W. Ellis 1

ME2 = M. I. Mandel, D. P. W. Ellis 2

ME3 = M. I. Mandel, D. P. W. Ellis 3

GP1 = G. Peeters 1

GP2 = G. Peeters 2

TTKV = K. Trohidis, G. Tsoumakas, G. Kalliris, I. Vlahavas

Overall Summary Results

| Measure | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Average Tag Positive Example Accuracy | 0.05 | 1.00 | 0.85 | 0.67 | 0.68 | 0.66 | 0.04 | 0.03 | 0.28 | 0.91 | 0.03 |

| Average Tag Negative Example Accuracy | 0.99 | 0.00 | 0.37 | 0.71 | 0.73 | 0.73 | 0.98 | 0.98 | 0.94 | 0.09 | 0.97 |

| Average Tag F-Measure | 0.06 | 0.15 | 0.19 | 0.24 | 0.26 | 0.26 | 0.03 | 0.02 | 0.28 | 0.15 | 0.04 |

| Average Tag Accuracy | 0.91 | 0.09 | 0.43 | 0.71 | 0.73 | 0.72 | 0.90 | 0.89 | 0.90 | 0.17 | 0.90 |

| Average AUC-ROC Clip | 0.82 | 0.49 | 0.81 | 0.77 | 0.79 | 0.78 | n/a | n/a | 0.84 | 0.69 | 0.78 |

| Average AUC-ROC Tag | 0.66 | 0.50 | 0.74 | 0.75 | 0.77 | 0.76 | n/a | n/a | 0.77 | 0.50 | 0.50 |

| Overall Beta-Binomial Positive Example Accuracy | 0.01 | 1.00 | 0.89 | 0.68 | 0.69 | 0.67 | 0.00 | 0.00 | 0.26 | 1.00 | 0.00 |

| Overall Beta-Binomial Negative Example Accuracy | 1.00 | 0.00 | 0.35 | 0.72 | 0.74 | 0.73 | 1.00 | 1.00 | 0.95 | 0.00 | 1.00 |

Summary Positive Example Accuracy (Average Across All Folds)

| Tag | Positive examples | Negative examples | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| metal | 35 | 2180 | 0 | 1 | 0.75 | 0.78 | 0.66 | 0.67 | 0 | 0.1 | 0.18 | 0.33 | 0 |

| ambient | 60 | 2155 | 0 | 0.99 | 0.43 | 0.56 | 0.62 | 0.64 | 0 | 0.08 | 0.27 | 1 | 0 |

| fast | 109 | 2106 | 0 | 1 | 0.89 | 0.7 | 0.7 | 0.74 | 0 | 0.02 | 0.22 | 1 | 0 |

| solo | 53 | 2162 | 0 | 1 | 0.98 | 0.61 | 0.59 | 0.59 | 0 | 0 | 0.1 | 1 | 0 |

| jazz | 138 | 2077 | 0.05 | 1 | 0.91 | 0.7 | 0.74 | 0.72 | 0.04 | 0 | 0.4 | 1 | 0.02 |

| instrumental | 102 | 2113 | 0 | 1 | 0.73 | 0.59 | 0.62 | 0.59 | 0.33 | 0.04 | 0.08 | 1 | 0 |

| horns | 40 | 2175 | 0 | 1 | 0.8 | 0.54 | 0.54 | 0.47 | 0 | 0.06 | 0.02 | 0.67 | 0 |

| house | 65 | 2150 | 0 | 1 | 0.87 | 0.82 | 0.77 | 0.74 | 0 | 0.06 | 0.2 | 1 | 0 |

| male | 738 | 1477 | 0.12 | 1 | 1 | 0.71 | 0.72 | 0.72 | 0.01 | 0 | 0.49 | 1 | 0.2 |

| beat | 137 | 2078 | 0 | 1 | 0.8 | 0.74 | 0.78 | 0.74 | 0 | 0.04 | 0.25 | 1 | 0 |

| strings | 55 | 2160 | 0.08 | 1 | 0.85 | 0.71 | 0.73 | 0.78 | 0 | 0 | 0.22 | 1 | 0 |

| saxophone | 68 | 2147 | 0 | 1 | 0.85 | 0.8 | 0.82 | 0.72 | 0 | 0 | 0.33 | 1 | 0 |

| piano | 185 | 2030 | 0.06 | 1 | 0.65 | 0.67 | 0.72 | 0.74 | 0 | 0 | 0.34 | 1 | 0 |

| loud | 37 | 2178 | 0 | 1 | 0.8 | 0.71 | 0.67 | 0.71 | 0 | 0 | 0.17 | 0.67 | 0 |

| noise | 42 | 2173 | 0.26 | 0.97 | 0.71 | 0.59 | 0.51 | 0.55 | 0 | 0 | 0.16 | 0.67 | 0 |

| 80s | 117 | 2098 | 0.01 | 1 | 0.93 | 0.6 | 0.59 | 0.63 | 0 | 0.17 | 0.23 | 1 | 0 |

| pop | 479 | 1736 | 0.1 | 1 | 0.98 | 0.67 | 0.69 | 0.71 | 0.02 | 0 | 0.45 | 1 | 0 |

| female | 345 | 1870 | 0.12 | 1 | 0.95 | 0.75 | 0.72 | 0.73 | 0.01 | 0.02 | 0.44 | 1 | 0 |

| slow | 159 | 2056 | 0 | 1 | 0.97 | 0.64 | 0.69 | 0.68 | 0.08 | 0 | 0.33 | 1 | 0 |

| funk | 37 | 2178 | 0 | 1 | 0.74 | 0.72 | 0.75 | 0.69 | 0 | 0.02 | 0.03 | 0.67 | 0 |

| keyboard | 42 | 2173 | 0 | 1 | 0.91 | 0.45 | 0.54 | 0.46 | 0 | 0 | 0 | 1 | 0 |

| electronica | 166 | 2049 | 0.06 | 1 | 0.94 | 0.68 | 0.72 | 0.68 | 0 | 0.03 | 0.28 | 1 | 0 |

| rock | 664 | 1551 | 0.24 | 1 | 0.98 | 0.75 | 0.75 | 0.73 | 0.05 | 0.01 | 0.69 | 1 | 0.24 |

| vocal | 270 | 1945 | 0 | 1 | 0.94 | 0.61 | 0.63 | 0.6 | 0 | 0 | 0.22 | 1 | 0 |

| acoustic | 38 | 2177 | 0 | 1 | 0.74 | 0.65 | 0.62 | 0.64 | 0 | 0 | 0.18 | 0.33 | 0 |

| hip hop | 152 | 2063 | 0.12 | 1 | 0.9 | 0.83 | 0.86 | 0.82 | 0.23 | 0 | 0.58 | 1 | 0.04 |

| guitar | 849 | 1366 | 0.12 | 1 | 0.99 | 0.72 | 0.72 | 0.73 | 0.15 | 0 | 0.64 | 1 | 0.37 |

| bass | 420 | 1795 | 0 | 1 | 0.95 | 0.5 | 0.53 | 0.54 | 0 | 0.07 | 0.23 | 1 | 0 |

| british | 82 | 2133 | 0.01 | 1 | 0.84 | 0.62 | 0.63 | 0.66 | 0 | 0 | 0.11 | 1 | 0 |

| dance | 324 | 1891 | 0.04 | 1 | 0.99 | 0.73 | 0.75 | 0.72 | 0.04 | 0 | 0.54 | 1 | 0.04 |

| r&b | 38 | 2177 | 0.02 | 1 | 0.56 | 0.6 | 0.65 | 0.7 | 0.02 | 0 | 0.18 | 0.33 | 0 |

| electronic | 485 | 1730 | 0.2 | 1 | 0.97 | 0.68 | 0.7 | 0.69 | 0.18 | 0 | 0.52 | 1 | 0.16 |

| drum | 927 | 1288 | 0.01 | 1 | 1 | 0.61 | 0.59 | 0.57 | 0.35 | 0 | 0.5 | 1 | 0.28 |

| soft | 62 | 2153 | 0 | 1 | 0.92 | 0.74 | 0.81 | 0.69 | 0.09 | 0 | 0.2 | 1 | 0 |

| punk | 51 | 2164 | 0 | 1 | 0.71 | 0.76 | 0.71 | 0.63 | 0 | 0 | 0.3 | 1 | 0 |

| country | 74 | 2141 | 0.18 | 1 | 0.98 | 0.6 | 0.62 | 0.61 | 0.05 | 0 | 0.15 | 1 | 0 |

| drum machine | 89 | 2126 | 0 | 1 | 0.89 | 0.66 | 0.69 | 0.69 | 0 | 0 | 0.21 | 1 | 0 |

| voice | 148 | 2067 | 0 | 1 | 0.97 | 0.53 | 0.59 | 0.52 | 0 | 0.36 | 0.08 | 1 | 0 |

| quiet | 43 | 2172 | 0.16 | 0.86 | 0.55 | 0.63 | 0.75 | 0.7 | 0 | 0.13 | 0.25 | 1 | 0 |

| distortion | 60 | 2155 | 0 | 1 | 0.82 | 0.68 | 0.63 | 0.64 | 0 | 0 | 0.13 | 1 | 0 |

| synth | 480 | 1735 | 0.02 | 1 | 0.97 | 0.68 | 0.63 | 0.62 | 0 | 0 | 0.4 | 1 | 0.02 |

| rap | 157 | 2058 | 0.46 | 1 | 0.68 | 0.87 | 0.88 | 0.85 | 0 | 0 | 0.65 | 1 | 0.05 |

| techno | 248 | 1967 | 0 | 1 | 0.92 | 0.76 | 0.8 | 0.75 | 0 | 0 | 0.5 | 1 | 0.02 |

| organ | 35 | 2180 | 0 | 1 | 0.68 | 0.4 | 0.31 | 0.33 | 0 | 0 | 0 | 0.67 | 0 |

| trumpet | 39 | 2176 | 0 | 1 | 0.81 | 0.63 | 0.69 | 0.58 | 0 | 0 | 0.14 | 0.67 | 0 |

| MEAN | 198.76 | 2016.24 | 0.05 | 1 | 0.85 | 0.67 | 0.68 | 0.66 | 0.04 | 0.03 | 0.28 | 0.91 | 0.03 |

Summary Negative Example Accuracy (Average Across All Folds)

| Tag | Positive examples | Negative examples | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| metal | 35 | 2180 | 1 | 0 | 0.64 | 0.79 | 0.79 | 0.79 | 1 | 0.97 | 0.99 | 0.67 | 1 |

| ambient | 60 | 2155 | 1 | 0 | 0.87 | 0.75 | 0.81 | 0.75 | 1 | 0.97 | 0.98 | 0 | 1 |

| fast | 109 | 2106 | 1 | 0 | 0.41 | 0.68 | 0.69 | 0.7 | 1 | 0.97 | 0.96 | 0 | 1 |

| solo | 53 | 2162 | 1 | 0 | 0.17 | 0.74 | 0.73 | 0.74 | 1 | 1 | 0.98 | 0 | 1 |

| jazz | 138 | 2077 | 0.99 | 0 | 0.47 | 0.85 | 0.87 | 0.87 | 1 | 1 | 0.96 | 0 | 0.98 |

| instrumental | 102 | 2113 | 1 | 0 | 0.37 | 0.63 | 0.65 | 0.64 | 0.67 | 0.95 | 0.96 | 0 | 1 |

| horns | 40 | 2175 | 1 | 0 | 0.31 | 0.66 | 0.67 | 0.64 | 1 | 0.94 | 0.98 | 0.33 | 1 |

| house | 65 | 2150 | 1 | 0 | 0.41 | 0.76 | 0.8 | 0.76 | 1 | 0.96 | 0.98 | 0 | 1 |

| male | 738 | 1477 | 0.91 | 0 | 0.05 | 0.72 | 0.74 | 0.74 | 0.99 | 1 | 0.75 | 0 | 0.8 |

| beat | 137 | 2078 | 1 | 0 | 0.55 | 0.7 | 0.74 | 0.75 | 1 | 0.95 | 0.95 | 0 | 1 |

| strings | 55 | 2160 | 0.97 | 0 | 0.46 | 0.76 | 0.75 | 0.77 | 1 | 1 | 0.98 | 0 | 1 |

| saxophone | 68 | 2147 | 1 | 0 | 0.45 | 0.85 | 0.85 | 0.84 | 1 | 0.99 | 0.98 | 0 | 1 |

| piano | 185 | 2030 | 0.96 | 0 | 0.44 | 0.65 | 0.68 | 0.69 | 1 | 1 | 0.94 | 0 | 1 |

| loud | 37 | 2178 | 1 | 0 | 0.56 | 0.81 | 0.82 | 0.81 | 1 | 1 | 0.99 | 0.33 | 1 |

| noise | 42 | 2173 | 0.95 | 0 | 0.69 | 0.76 | 0.78 | 0.78 | 1 | 0.99 | 0.98 | 0.33 | 1 |

| 80s | 117 | 2098 | 1 | 0 | 0.13 | 0.68 | 0.68 | 0.7 | 1 | 0.92 | 0.96 | 0 | 1 |

| pop | 479 | 1736 | 0.93 | 0 | 0.1 | 0.68 | 0.68 | 0.68 | 0.99 | 1 | 0.85 | 0 | 1 |

| female | 345 | 1870 | 0.93 | 0 | 0.17 | 0.81 | 0.82 | 0.8 | 1 | 0.98 | 0.9 | 0 | 1 |

| slow | 159 | 2056 | 1 | 0 | 0.27 | 0.65 | 0.68 | 0.68 | 0.98 | 1 | 0.95 | 0 | 1 |

| funk | 37 | 2178 | 1 | 0 | 0.43 | 0.71 | 0.71 | 0.7 | 1 | 0.98 | 0.98 | 0.33 | 1 |

| keyboard | 42 | 2173 | 1 | 0 | 0.16 | 0.59 | 0.56 | 0.53 | 1 | 1 | 0.98 | 0 | 1 |

| electronica | 166 | 2049 | 0.99 | 0 | 0.39 | 0.68 | 0.73 | 0.73 | 1 | 0.96 | 0.94 | 0 | 1 |

| rock | 664 | 1551 | 0.92 | 0 | 0.28 | 0.79 | 0.81 | 0.8 | 0.99 | 0.99 | 0.87 | 0 | 0.72 |

| vocal | 270 | 1945 | 1 | 0 | 0.08 | 0.58 | 0.61 | 0.6 | 1 | 0.99 | 0.89 | 0 | 1 |

| acoustic | 38 | 2177 | 1 | 0 | 0.45 | 0.68 | 0.68 | 0.66 | 1 | 0.96 | 0.99 | 0.67 | 1 |

| hip hop | 152 | 2063 | 0.99 | 0 | 0.67 | 0.83 | 0.87 | 0.87 | 0.99 | 1 | 0.97 | 0 | 0.95 |

| guitar | 849 | 1366 | 0.94 | 0.01 | 0.22 | 0.71 | 0.73 | 0.73 | 0.95 | 1 | 0.78 | 0 | 0.62 |

| bass | 420 | 1795 | 1 | 0 | 0.05 | 0.57 | 0.58 | 0.6 | 1 | 0.94 | 0.82 | 0 | 1 |

| british | 82 | 2133 | 1 | 0 | 0.22 | 0.69 | 0.7 | 0.67 | 1 | 1 | 0.97 | 0 | 1 |

| dance | 324 | 1891 | 1 | 0 | 0.19 | 0.75 | 0.8 | 0.8 | 0.99 | 1 | 0.92 | 0 | 0.94 |

| r&b | 38 | 2177 | 0.99 | 0 | 0.66 | 0.75 | 0.76 | 0.74 | 1 | 1 | 0.99 | 0.67 | 1 |

| electronic | 485 | 1730 | 0.97 | 0 | 0.27 | 0.72 | 0.77 | 0.77 | 0.96 | 1 | 0.86 | 0 | 0.83 |

| drum | 927 | 1288 | 1 | 0.01 | 0.02 | 0.56 | 0.57 | 0.6 | 0.66 | 1 | 0.64 | 0 | 0.7 |

| soft | 62 | 2153 | 1 | 0 | 0.3 | 0.68 | 0.69 | 0.68 | 0.99 | 1 | 0.98 | 0 | 1 |

| punk | 51 | 2164 | 1 | 0 | 0.6 | 0.8 | 0.82 | 0.81 | 1 | 1 | 0.98 | 0 | 1 |

| country | 74 | 2141 | 0.95 | 0 | 0.19 | 0.73 | 0.73 | 0.76 | 0.98 | 1 | 0.97 | 0 | 1 |

| drum machine | 89 | 2126 | 1 | 0 | 0.43 | 0.65 | 0.69 | 0.68 | 1 | 1 | 0.97 | 0 | 1 |

| voice | 148 | 2067 | 1 | 0 | 0.06 | 0.52 | 0.54 | 0.54 | 1 | 0.65 | 0.93 | 0 | 1 |

| quiet | 43 | 2172 | 0.99 | 4.42E-004 | 0.83 | 0.84 | 0.85 | 0.85 | 1 | 0.97 | 0.99 | 0 | 1 |

| distortion | 60 | 2155 | 1 | 0 | 0.53 | 0.77 | 0.78 | 0.78 | 1 | 1 | 0.98 | 0 | 1 |

| synth | 480 | 1735 | 1 | 0 | 0.14 | 0.65 | 0.68 | 0.68 | 1 | 1 | 0.83 | 0 | 0.98 |

| rap | 157 | 2058 | 0.97 | 0 | 0.64 | 0.85 | 0.89 | 0.89 | 1 | 1 | 0.97 | 0 | 0.95 |

| techno | 248 | 1967 | 1 | 0 | 0.52 | 0.74 | 0.8 | 0.8 | 1 | 1 | 0.94 | 0 | 0.97 |

| organ | 35 | 2180 | 1 | 0 | 0.39 | 0.55 | 0.55 | 0.53 | 1 | 0.99 | 0.98 | 0.33 | 1 |

| trumpet | 39 | 2176 | 1 | 0 | 0.39 | 0.77 | 0.76 | 0.74 | 1 | 1 | 0.98 | 0.33 | 1 |

| MEAN | 198.76 | 2016.24 | 0.99 | 0 | 0.37 | 0.71 | 0.73 | 0.73 | 0.98 | 0.98 | 0.94 | 0.09 | 0.97 |

Summary Binary relevance F-Measure (Average Across All Folds)

| Tag | Positive Examples | Negative Examples | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| metal | 35 | 2180 | 0 | 0.03 | 0.09 | 0.1 | 0.09 | 0.09 | 0 | 0.06 | 0.17 | 0.01 | 0 |

| ambient | 60 | 2155 | 0 | 0.05 | 0.1 | 0.09 | 0.14 | 0.12 | 0 | 0.07 | 0.26 | 0.05 | 0 |

| fast | 109 | 2106 | 0 | 0.09 | 0.14 | 0.17 | 0.18 | 0.19 | 0 | 0.02 | 0.21 | 0.09 | 0 |

| solo | 53 | 2162 | 0 | 0.05 | 0.06 | 0.1 | 0.09 | 0.1 | 0 | 0 | 0.1 | 0.05 | 0 |

| jazz | 138 | 2077 | 0.08 | 0.12 | 0.21 | 0.36 | 0.4 | 0.39 | 0.07 | 0 | 0.4 | 0.12 | 0.02 |

| instrumental | 102 | 2113 | 0 | 0.09 | 0.1 | 0.13 | 0.14 | 0.13 | 0.03 | 0.02 | 0.08 | 0.09 | 0 |

| horns | 40 | 2175 | 0 | 0.04 | 0.04 | 0.05 | 0.06 | 0.05 | 0 | 0.02 | 0.02 | 0.02 | 0 |

| house | 65 | 2150 | 0 | 0.06 | 0.08 | 0.17 | 0.19 | 0.15 | 0 | 0.04 | 0.2 | 0.06 | 0 |

| male | 738 | 1477 | 0.17 | 0.5 | 0.51 | 0.63 | 0.64 | 0.65 | 0.02 | 0 | 0.49 | 0.5 | 0.24 |

| beat | 137 | 2078 | 0 | 0.12 | 0.2 | 0.24 | 0.28 | 0.27 | 0 | 0.03 | 0.25 | 0.12 | 0 |

| strings | 55 | 2160 | 0.05 | 0.05 | 0.08 | 0.13 | 0.13 | 0.14 | 0 | 0 | 0.22 | 0.05 | 0 |

| saxophone | 68 | 2147 | 0 | 0.06 | 0.09 | 0.26 | 0.26 | 0.22 | 0 | 0 | 0.31 | 0.06 | 0 |

| piano | 185 | 2030 | 0.07 | 0.15 | 0.11 | 0.24 | 0.27 | 0.29 | 0 | 0 | 0.34 | 0.15 | 0 |

| loud | 37 | 2178 | 0 | 0.03 | 0.1 | 0.11 | 0.11 | 0.11 | 0 | 0 | 0.19 | 0.02 | 0 |

| noise | 42 | 2173 | 0.05 | 0.04 | 0.09 | 0.08 | 0.08 | 0.09 | 0 | 0 | 0.15 | 0.02 | 0 |

| 80s | 117 | 2098 | 0.01 | 0.1 | 0.11 | 0.16 | 0.16 | 0.18 | 0 | 0.05 | 0.23 | 0.1 | 0 |

| pop | 479 | 1736 | 0.12 | 0.36 | 0.38 | 0.47 | 0.49 | 0.5 | 0.04 | 0 | 0.45 | 0.36 | 0 |

| female | 345 | 1870 | 0.14 | 0.27 | 0.29 | 0.54 | 0.52 | 0.51 | 0.01 | 0.03 | 0.42 | 0.27 | 0.01 |

| slow | 159 | 2056 | 0 | 0.13 | 0.17 | 0.21 | 0.24 | 0.23 | 0.08 | 0 | 0.33 | 0.13 | 0 |

| funk | 37 | 2178 | 0 | 0.03 | 0.05 | 0.08 | 0.08 | 0.07 | 0 | 0.02 | 0.03 | 0.02 | 0 |

| keyboard | 42 | 2173 | 0 | 0.04 | 0.04 | 0.04 | 0.05 | 0.04 | 0 | 0 | 0 | 0.04 | 0 |

| electronica | 166 | 2049 | 0.09 | 0.14 | 0.2 | 0.24 | 0.28 | 0.27 | 0 | 0.03 | 0.27 | 0.14 | 0 |

| rock | 664 | 1551 | 0.27 | 0.46 | 0.54 | 0.67 | 0.68 | 0.67 | 0.08 | 0.02 | 0.69 | 0.46 | 0.26 |

| vocal | 270 | 1945 | 0 | 0.22 | 0.22 | 0.26 | 0.28 | 0.27 | 0 | 0 | 0.22 | 0.22 | 0 |

| acoustic | 38 | 2177 | 0 | 0.03 | 0.05 | 0.07 | 0.06 | 0.06 | 0 | 0 | 0.18 | 0.01 | 0 |

| hip hop | 152 | 2063 | 0.15 | 0.13 | 0.28 | 0.4 | 0.47 | 0.46 | 0.22 | 0 | 0.56 | 0.13 | 0.05 |

| guitar | 849 | 1366 | 0.18 | 0.56 | 0.61 | 0.66 | 0.67 | 0.67 | 0.18 | 0 | 0.64 | 0.55 | 0.37 |

| bass | 420 | 1795 | 0 | 0.32 | 0.32 | 0.3 | 0.32 | 0.33 | 0 | 0.1 | 0.23 | 0.32 | 0 |

| british | 82 | 2133 | 0.02 | 0.07 | 0.08 | 0.13 | 0.13 | 0.13 | 0 | 0 | 0.1 | 0.07 | 0 |

| dance | 324 | 1891 | 0.07 | 0.25 | 0.29 | 0.46 | 0.52 | 0.5 | 0.06 | 0 | 0.54 | 0.25 | 0.06 |

| r&b | 38 | 2177 | 0.04 | 0.03 | 0.06 | 0.08 | 0.09 | 0.09 | 0.04 | 0 | 0.18 | 0.01 | 0 |

| electronic | 485 | 1730 | 0.28 | 0.36 | 0.42 | 0.5 | 0.55 | 0.55 | 0.19 | 0 | 0.51 | 0.36 | 0.18 |

| drum | 927 | 1288 | 0.01 | 0.59 | 0.6 | 0.55 | 0.54 | 0.53 | 0.23 | 0 | 0.5 | 0.59 | 0.33 |

| soft | 62 | 2153 | 0 | 0.05 | 0.07 | 0.11 | 0.13 | 0.11 | 0.08 | 0 | 0.2 | 0.05 | 0 |

| punk | 51 | 2164 | 0 | 0.05 | 0.08 | 0.15 | 0.15 | 0.13 | 0 | 0 | 0.29 | 0.05 | 0 |

| country | 74 | 2141 | 0.13 | 0.06 | 0.08 | 0.14 | 0.14 | 0.15 | 0.03 | 0 | 0.15 | 0.06 | 0 |

| drum machine | 89 | 2126 | 0 | 0.08 | 0.12 | 0.13 | 0.15 | 0.15 | 0 | 0 | 0.22 | 0.08 | 0 |

| voice | 148 | 2067 | 0 | 0.13 | 0.13 | 0.13 | 0.15 | 0.13 | 0 | 0.12 | 0.08 | 0.13 | 0 |

| quiet | 43 | 2172 | 0.19 | 0.03 | 0.21 | 0.13 | 0.16 | 0.15 | 0 | 0.09 | 0.25 | 0.04 | 0 |

| distortion | 60 | 2155 | 0 | 0.05 | 0.09 | 0.14 | 0.13 | 0.13 | 0 | 0 | 0.12 | 0.05 | 0 |

| synth | 480 | 1735 | 0.03 | 0.36 | 0.38 | 0.46 | 0.45 | 0.45 | 0 | 0 | 0.39 | 0.35 | 0.04 |

| rap | 157 | 2058 | 0.48 | 0.13 | 0.21 | 0.46 | 0.52 | 0.52 | 0 | 0 | 0.63 | 0.13 | 0.06 |

| techno | 248 | 1967 | 0 | 0.2 | 0.33 | 0.39 | 0.47 | 0.45 | 0 | 0 | 0.49 | 0.2 | 0.02 |

| organ | 35 | 2180 | 0 | 0.03 | 0.03 | 0.03 | 0.02 | 0.02 | 0 | 0 | 0 | 0.02 | 0 |

| trumpet | 39 | 2176 | 0 | 0.03 | 0.05 | 0.08 | 0.09 | 0.07 | 0 | 0 | 0.13 | 0.02 | 0 |

| MEAN | 198.76 | 2016.24 | 0.06 | 0.15 | 0.19 | 0.24 | 0.26 | 0.26 | 0.03 | 0.02 | 0.28 | 0.15 | 0.04 |

Summary Binary Accuracy (Average Across All Folds)

| Tag | Positive Examples | Negative Examples | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| metal | 35 | 2180 | 0.98 | 0.02 | 0.64 | 0.79 | 0.79 | 0.78 | 0.98 | 0.95 | 0.97 | 0.66 | 0.98 |

| ambient | 60 | 2155 | 0.97 | 0.03 | 0.86 | 0.74 | 0.8 | 0.74 | 0.97 | 0.95 | 0.96 | 0.03 | 0.97 |

| fast | 109 | 2106 | 0.95 | 0.05 | 0.43 | 0.68 | 0.69 | 0.7 | 0.95 | 0.92 | 0.92 | 0.05 | 0.95 |

| solo | 53 | 2162 | 0.98 | 0.03 | 0.19 | 0.74 | 0.72 | 0.73 | 0.98 | 0.98 | 0.96 | 0.02 | 0.98 |

| jazz | 138 | 2077 | 0.93 | 0.07 | 0.5 | 0.84 | 0.86 | 0.86 | 0.94 | 0.94 | 0.93 | 0.06 | 0.92 |

| instrumental | 102 | 2113 | 0.95 | 0.05 | 0.39 | 0.63 | 0.65 | 0.63 | 0.65 | 0.91 | 0.92 | 0.05 | 0.95 |

| horns | 40 | 2175 | 0.98 | 0.02 | 0.32 | 0.66 | 0.67 | 0.64 | 0.98 | 0.92 | 0.96 | 0.34 | 0.98 |

| house | 65 | 2150 | 0.97 | 0.03 | 0.43 | 0.76 | 0.8 | 0.76 | 0.97 | 0.93 | 0.95 | 0.03 | 0.97 |

| male | 738 | 1477 | 0.64 | 0.34 | 0.37 | 0.72 | 0.73 | 0.74 | 0.66 | 0.66 | 0.66 | 0.34 | 0.59 |

| beat | 137 | 2078 | 0.94 | 0.06 | 0.57 | 0.71 | 0.75 | 0.75 | 0.94 | 0.9 | 0.91 | 0.06 | 0.94 |

| strings | 55 | 2160 | 0.95 | 0.03 | 0.47 | 0.76 | 0.75 | 0.77 | 0.97 | 0.98 | 0.96 | 0.02 | 0.98 |

| saxophone | 68 | 2147 | 0.97 | 0.03 | 0.46 | 0.85 | 0.85 | 0.84 | 0.97 | 0.95 | 0.96 | 0.03 | 0.97 |

| piano | 185 | 2030 | 0.88 | 0.09 | 0.45 | 0.66 | 0.68 | 0.7 | 0.92 | 0.92 | 0.89 | 0.08 | 0.92 |

| loud | 37 | 2178 | 0.98 | 0.02 | 0.56 | 0.81 | 0.82 | 0.81 | 0.98 | 0.98 | 0.97 | 0.34 | 0.98 |

| noise | 42 | 2173 | 0.93 | 0.02 | 0.69 | 0.76 | 0.77 | 0.78 | 0.98 | 0.97 | 0.97 | 0.34 | 0.98 |

| 80s | 117 | 2098 | 0.95 | 0.06 | 0.17 | 0.68 | 0.68 | 0.69 | 0.95 | 0.88 | 0.92 | 0.05 | 0.95 |

| pop | 479 | 1736 | 0.75 | 0.22 | 0.29 | 0.68 | 0.68 | 0.69 | 0.78 | 0.78 | 0.76 | 0.22 | 0.78 |

| female | 345 | 1870 | 0.8 | 0.16 | 0.29 | 0.8 | 0.8 | 0.79 | 0.85 | 0.83 | 0.82 | 0.15 | 0.85 |

| slow | 159 | 2056 | 0.93 | 0.07 | 0.32 | 0.65 | 0.69 | 0.68 | 0.92 | 0.93 | 0.9 | 0.07 | 0.93 |

| funk | 37 | 2178 | 0.98 | 0.02 | 0.43 | 0.71 | 0.71 | 0.7 | 0.98 | 0.96 | 0.97 | 0.34 | 0.98 |

| keyboard | 42 | 2173 | 0.98 | 0.02 | 0.17 | 0.59 | 0.56 | 0.53 | 0.98 | 0.98 | 0.96 | 0.02 | 0.98 |

| electronica | 166 | 2049 | 0.92 | 0.08 | 0.42 | 0.69 | 0.73 | 0.73 | 0.93 | 0.89 | 0.89 | 0.07 | 0.93 |

| rock | 664 | 1551 | 0.72 | 0.3 | 0.49 | 0.78 | 0.79 | 0.78 | 0.71 | 0.7 | 0.81 | 0.3 | 0.58 |

| vocal | 270 | 1945 | 0.88 | 0.13 | 0.19 | 0.59 | 0.61 | 0.6 | 0.88 | 0.87 | 0.81 | 0.12 | 0.88 |

| acoustic | 38 | 2177 | 0.98 | 0.02 | 0.45 | 0.68 | 0.68 | 0.66 | 0.98 | 0.94 | 0.97 | 0.66 | 0.98 |

| hip hop | 152 | 2063 | 0.93 | 0.07 | 0.69 | 0.83 | 0.87 | 0.87 | 0.94 | 0.93 | 0.94 | 0.07 | 0.89 |

| guitar | 849 | 1366 | 0.63 | 0.39 | 0.52 | 0.72 | 0.73 | 0.73 | 0.64 | 0.62 | 0.72 | 0.38 | 0.52 |

| bass | 420 | 1795 | 0.81 | 0.19 | 0.22 | 0.56 | 0.57 | 0.59 | 0.81 | 0.78 | 0.71 | 0.19 | 0.81 |

| british | 82 | 2133 | 0.96 | 0.04 | 0.24 | 0.69 | 0.7 | 0.67 | 0.96 | 0.96 | 0.93 | 0.04 | 0.96 |

| dance | 324 | 1891 | 0.86 | 0.15 | 0.31 | 0.75 | 0.8 | 0.79 | 0.85 | 0.85 | 0.87 | 0.15 | 0.81 |

| r&b | 38 | 2177 | 0.98 | 0.02 | 0.66 | 0.75 | 0.76 | 0.74 | 0.98 | 0.98 | 0.97 | 0.66 | 0.98 |

| electronic | 485 | 1730 | 0.8 | 0.22 | 0.42 | 0.71 | 0.76 | 0.76 | 0.8 | 0.78 | 0.79 | 0.22 | 0.69 |

| drum | 927 | 1288 | 0.58 | 0.42 | 0.43 | 0.58 | 0.58 | 0.59 | 0.52 | 0.58 | 0.58 | 0.42 | 0.53 |

| soft | 62 | 2153 | 0.97 | 0.03 | 0.32 | 0.68 | 0.69 | 0.68 | 0.97 | 0.97 | 0.95 | 0.03 | 0.97 |

| punk | 51 | 2164 | 0.98 | 0.03 | 0.6 | 0.8 | 0.81 | 0.8 | 0.98 | 0.98 | 0.97 | 0.02 | 0.98 |

| country | 74 | 2141 | 0.92 | 0.04 | 0.22 | 0.73 | 0.73 | 0.75 | 0.95 | 0.97 | 0.94 | 0.03 | 0.97 |

| drum machine | 89 | 2126 | 0.96 | 0.04 | 0.45 | 0.65 | 0.69 | 0.69 | 0.96 | 0.96 | 0.94 | 0.04 | 0.96 |

| voice | 148 | 2067 | 0.93 | 0.07 | 0.12 | 0.52 | 0.54 | 0.54 | 0.93 | 0.63 | 0.88 | 0.07 | 0.93 |

| quiet | 43 | 2172 | 0.98 | 0.02 | 0.83 | 0.84 | 0.85 | 0.84 | 0.98 | 0.95 | 0.97 | 0.02 | 0.98 |

| distortion | 60 | 2155 | 0.97 | 0.03 | 0.54 | 0.77 | 0.78 | 0.77 | 0.97 | 0.97 | 0.95 | 0.03 | 0.97 |

| synth | 480 | 1735 | 0.79 | 0.22 | 0.32 | 0.66 | 0.67 | 0.67 | 0.78 | 0.78 | 0.74 | 0.22 | 0.77 |

| rap | 157 | 2058 | 0.93 | 0.07 | 0.65 | 0.86 | 0.89 | 0.89 | 0.93 | 0.93 | 0.95 | 0.07 | 0.89 |

| techno | 248 | 1967 | 0.89 | 0.11 | 0.56 | 0.74 | 0.8 | 0.8 | 0.89 | 0.89 | 0.89 | 0.11 | 0.86 |

| organ | 35 | 2180 | 0.98 | 0.02 | 0.39 | 0.55 | 0.54 | 0.52 | 0.98 | 0.97 | 0.97 | 0.34 | 0.98 |

| trumpet | 39 | 2176 | 0.98 | 0.02 | 0.39 | 0.77 | 0.76 | 0.73 | 0.98 | 0.98 | 0.97 | 0.34 | 0.98 |

| MEAN | 198.76 | 2016.24 | 0.91 | 0.09 | 0.43 | 0.71 | 0.73 | 0.72 | 0.9 | 0.89 | 0.9 | 0.17 | 0.9 |

Summary AUC-ROC Tag (Average Across All Folds)

| Tag | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|

| metal | 0.78 | 0.3 | 0.84 | 0.85 | 0.85 | 0.85 | 0.87 | 0.48 | 0.52 |

| ambient | 0.76 | 0.76 | 0.76 | 0.74 | 0.79 | 0.76 | 0.82 | 0.5 | 0.5 |

| fast | 0.58 | 0.38 | 0.77 | 0.73 | 0.77 | 0.78 | 0.75 | 0.55 | 0.52 |

| solo | 0.57 | 0.6 | 0.69 | 0.74 | 0.71 | 0.7 | 0.64 | 0.59 | 0.6 |

| jazz | 0.78 | 0.69 | 0.85 | 0.89 | 0.9 | 0.89 | 0.84 | 0.49 | 0.52 |

| instrumental | 0.53 | 0.6 | 0.6 | 0.66 | 0.69 | 0.67 | 0.57 | 0.46 | 0.47 |

| horns | 0.57 | 0.48 | 0.56 | 0.63 | 0.64 | 0.57 | 0.64 | 0.38 | 0.38 |

| house | 0.68 | 0.4 | 0.79 | 0.83 | 0.87 | 0.84 | 0.84 | 0.49 | 0.49 |

| male | 0.6 | 0.44 | 0.75 | 0.79 | 0.8 | 0.8 | 0.69 | 0.49 | 0.49 |

| beat | 0.68 | 0.43 | 0.79 | 0.79 | 0.82 | 0.81 | 0.84 | 0.5 | 0.49 |

| strings | 0.67 | 0.58 | 0.74 | 0.79 | 0.82 | 0.82 | 0.84 | 0.48 | 0.5 |

| saxophone | 0.78 | 0.7 | 0.81 | 0.9 | 0.9 | 0.88 | 0.84 | 0.52 | 0.5 |

| piano | 0.67 | 0.68 | 0.77 | 0.75 | 0.78 | 0.78 | 0.81 | 0.49 | 0.5 |

| loud | 0.82 | 0.24 | 0.87 | 0.86 | 0.86 | 0.87 | 0.87 | 0.49 | 0.5 |

| noise | 0.73 | 0.4 | 0.83 | 0.75 | 0.77 | 0.77 | 0.8 | 0.45 | 0.46 |

| 80s | 0.56 | 0.5 | 0.67 | 0.69 | 0.7 | 0.73 | 0.76 | 0.5 | 0.5 |

| pop | 0.64 | 0.43 | 0.73 | 0.74 | 0.75 | 0.76 | 0.74 | 0.49 | 0.48 |

| female | 0.59 | 0.48 | 0.75 | 0.86 | 0.85 | 0.84 | 0.75 | 0.51 | 0.5 |

| slow | 0.72 | 0.63 | 0.79 | 0.7 | 0.75 | 0.74 | 0.82 | 0.52 | 0.5 |

| funk | 0.66 | 0.42 | 0.67 | 0.81 | 0.81 | 0.79 | 0.76 | 0.58 | 0.52 |

| keyboard | 0.51 | 0.6 | 0.58 | 0.55 | 0.54 | 0.51 | 0.62 | 0.5 | 0.53 |

| electronica | 0.65 | 0.5 | 0.79 | 0.76 | 0.78 | 0.77 | 0.77 | 0.45 | 0.46 |

| rock | 0.75 | 0.37 | 0.86 | 0.85 | 0.86 | 0.86 | 0.87 | 0.51 | 0.5 |

| vocal | 0.55 | 0.47 | 0.57 | 0.63 | 0.65 | 0.65 | 0.66 | 0.49 | 0.48 |

| acoustic | 0.67 | 0.62 | 0.73 | 0.74 | 0.73 | 0.72 | 0.84 | 0.52 | 0.53 |

| hip hop | 0.82 | 0.37 | 0.89 | 0.92 | 0.93 | 0.92 | 0.93 | 0.54 | 0.52 |

| guitar | 0.69 | 0.42 | 0.81 | 0.79 | 0.8 | 0.8 | 0.79 | 0.5 | 0.5 |

| bass | 0.5 | 0.41 | 0.58 | 0.55 | 0.57 | 0.61 | 0.58 | 0.5 | 0.5 |

| british | 0.61 | 0.45 | 0.57 | 0.69 | 0.7 | 0.69 | 0.72 | 0.51 | 0.5 |

| dance | 0.74 | 0.41 | 0.87 | 0.8 | 0.85 | 0.84 | 0.86 | 0.49 | 0.49 |

| r&b | 0.7 | 0.45 | 0.74 | 0.8 | 0.81 | 0.81 | 0.83 | 0.48 | 0.45 |

| electronic | 0.71 | 0.48 | 0.81 | 0.78 | 0.81 | 0.8 | 0.77 | 0.49 | 0.49 |

| drum | 0.57 | 0.42 | 0.63 | 0.61 | 0.61 | 0.61 | 0.61 | 0.51 | 0.49 |

| soft | 0.73 | 0.67 | 0.8 | 0.75 | 0.79 | 0.74 | 0.83 | 0.49 | 0.49 |

| punk | 0.71 | 0.39 | 0.67 | 0.87 | 0.84 | 0.83 | 0.84 | 0.53 | 0.53 |

| country | 0.67 | 0.5 | 0.81 | 0.75 | 0.75 | 0.75 | 0.82 | 0.51 | 0.49 |

| drum machine | 0.64 | 0.47 | 0.76 | 0.73 | 0.76 | 0.75 | 0.78 | 0.5 | 0.5 |

| voice | 0.54 | 0.46 | 0.52 | 0.52 | 0.53 | 0.53 | 0.6 | 0.53 | 0.51 |

| quiet | 0.7 | 0.75 | 0.8 | 0.86 | 0.89 | 0.86 | 0.93 | 0.5 | 0.48 |

| distortion | 0.66 | 0.41 | 0.78 | 0.77 | 0.79 | 0.8 | 0.82 | 0.46 | 0.46 |

| synth | 0.59 | 0.51 | 0.72 | 0.71 | 0.72 | 0.72 | 0.68 | 0.5 | 0.5 |

| rap | 0.85 | 0.36 | 0.93 | 0.94 | 0.95 | 0.94 | 0.93 | 0.53 | 0.53 |

| techno | 0.75 | 0.41 | 0.86 | 0.82 | 0.87 | 0.86 | 0.85 | 0.46 | 0.47 |

| organ | 0.51 | 0.62 | 0.54 | 0.5 | 0.46 | 0.51 | 0.54 | 0.54 | 0.55 |

| trumpet | 0.64 | 0.61 | 0.7 | 0.8 | 0.79 | 0.73 | 0.71 | 0.53 | 0.51 |

| MEAN | 0.66 | 0.5 | 0.74 | 0.75 | 0.77 | 0.76 | 0.77 | 0.5 | 0.5 |

Friedman test results

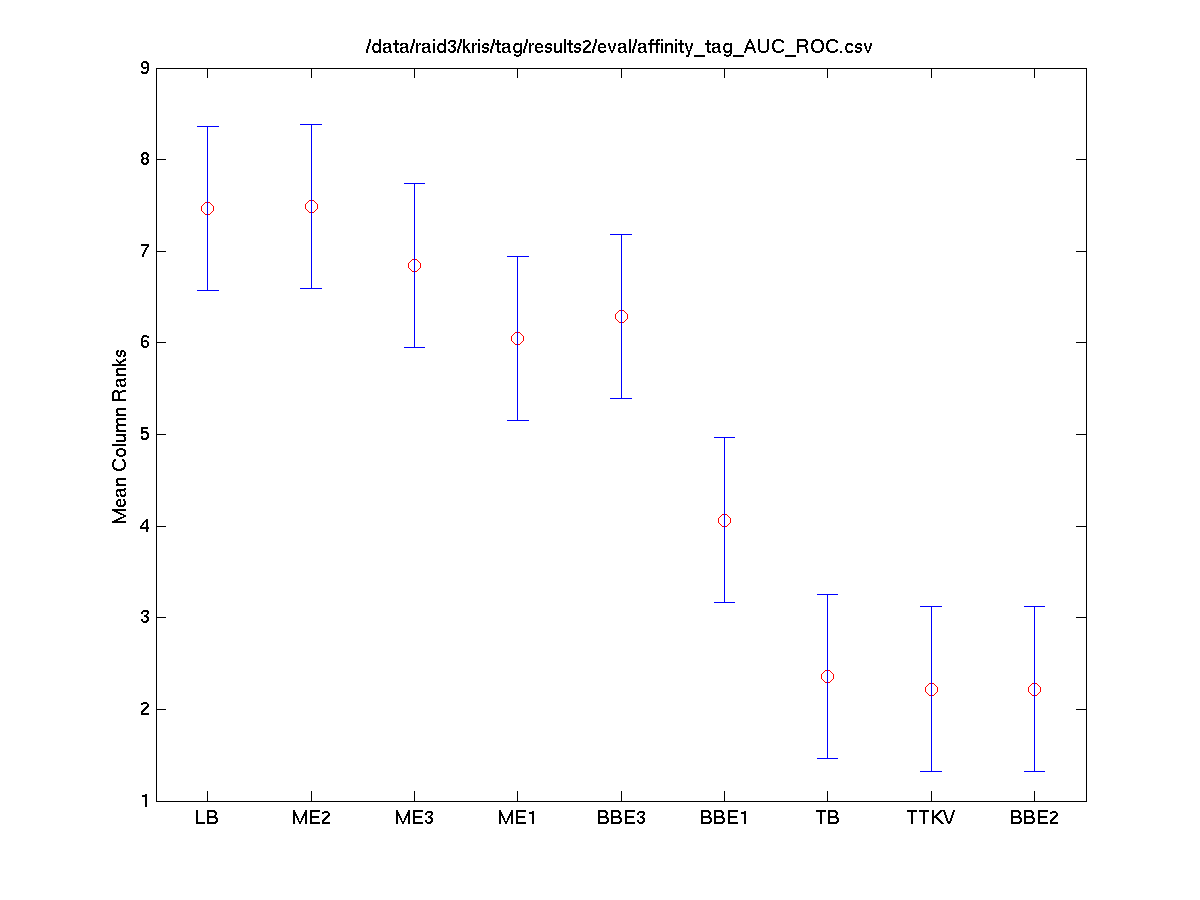

AUC-ROC Tag Friedman test

The following table and plot show the results of Friedman's ANOVA with Tukey-Kramer multiple comparisons computed over the Area Under the ROC curve (AUC-ROC) for each tag in the test, averaged over all folds.

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| BBE1 | BBE2 | -1.8130 | -0.0222 | 1.7686 | FALSE |

| BBE1 | BBE3 | -1.1686 | 0.6222 | 2.4130 | FALSE |

| BBE1 | ME1 | -0.3686 | 1.4222 | 3.2130 | FALSE |

| BBE1 | ME2 | -0.6130 | 1.1778 | 2.9686 | FALSE |

| BBE1 | ME3 | 1.6092 | 3.4000 | 5.1908 | TRUE |

| BBE1 | LB | 3.3203 | 5.1111 | 6.9019 | TRUE |

| BBE1 | TB | 3.4537 | 5.2444 | 7.0352 | TRUE |

| BBE1 | TTKV | 3.4537 | 5.2444 | 7.0352 | TRUE |

| BBE2 | BBE3 | -1.1463 | 0.6444 | 2.4352 | FALSE |

| BBE2 | ME1 | -0.3463 | 1.4444 | 3.2352 | FALSE |

| BBE2 | ME2 | -0.5908 | 1.2000 | 2.9908 | FALSE |

| BBE2 | ME3 | 1.6314 | 3.4222 | 5.2130 | TRUE |

| BBE2 | LB | 3.3425 | 5.1333 | 6.9241 | TRUE |

| BBE2 | TB | 3.4759 | 5.2667 | 7.0575 | TRUE |

| BBE2 | TTKV | 3.4759 | 5.2667 | 7.0575 | TRUE |

| BBE3 | ME1 | -0.9908 | 0.8000 | 2.5908 | FALSE |

| BBE3 | ME2 | -1.2352 | 0.5556 | 2.3463 | FALSE |

| BBE3 | ME3 | 0.9870 | 2.7778 | 4.5686 | TRUE |

| BBE3 | LB | 2.6981 | 4.4889 | 6.2797 | TRUE |

| BBE3 | TB | 2.8314 | 4.6222 | 6.4130 | TRUE |

| BBE3 | TTKV | 2.8314 | 4.6222 | 6.4130 | TRUE |

| ME1 | ME2 | -2.0352 | -0.2444 | 1.5463 | FALSE |

| ME1 | ME3 | 0.1870 | 1.9778 | 3.7686 | TRUE |

| ME1 | LB | 1.8981 | 3.6889 | 5.4797 | TRUE |

| ME1 | TB | 2.0314 | 3.8222 | 5.6130 | TRUE |

| ME1 | TTKV | 2.0314 | 3.8222 | 5.6130 | TRUE |

| ME2 | ME3 | 0.4314 | 2.2222 | 4.0130 | TRUE |

| ME2 | LB | 2.1425 | 3.9333 | 5.7241 | TRUE |

| ME2 | TB | 2.2759 | 4.0667 | 5.8575 | TRUE |

| ME2 | TTKV | 2.2759 | 4.0667 | 5.8575 | TRUE |

| ME3 | LB | -0.0797 | 1.7111 | 3.5019 | FALSE |

| ME3 | TB | 0.0537 | 1.8444 | 3.6352 | TRUE |

| ME3 | TTKV | 0.0537 | 1.8444 | 3.6352 | TRUE |

| LB | TB | -1.6575 | 0.1333 | 1.9241 | FALSE |

| LB | TTKV | -1.6575 | 0.1333 | 1.9241 | FALSE |

| TB | TTKV | -1.7908 | 0.0000 | 1.7908 | FALSE |

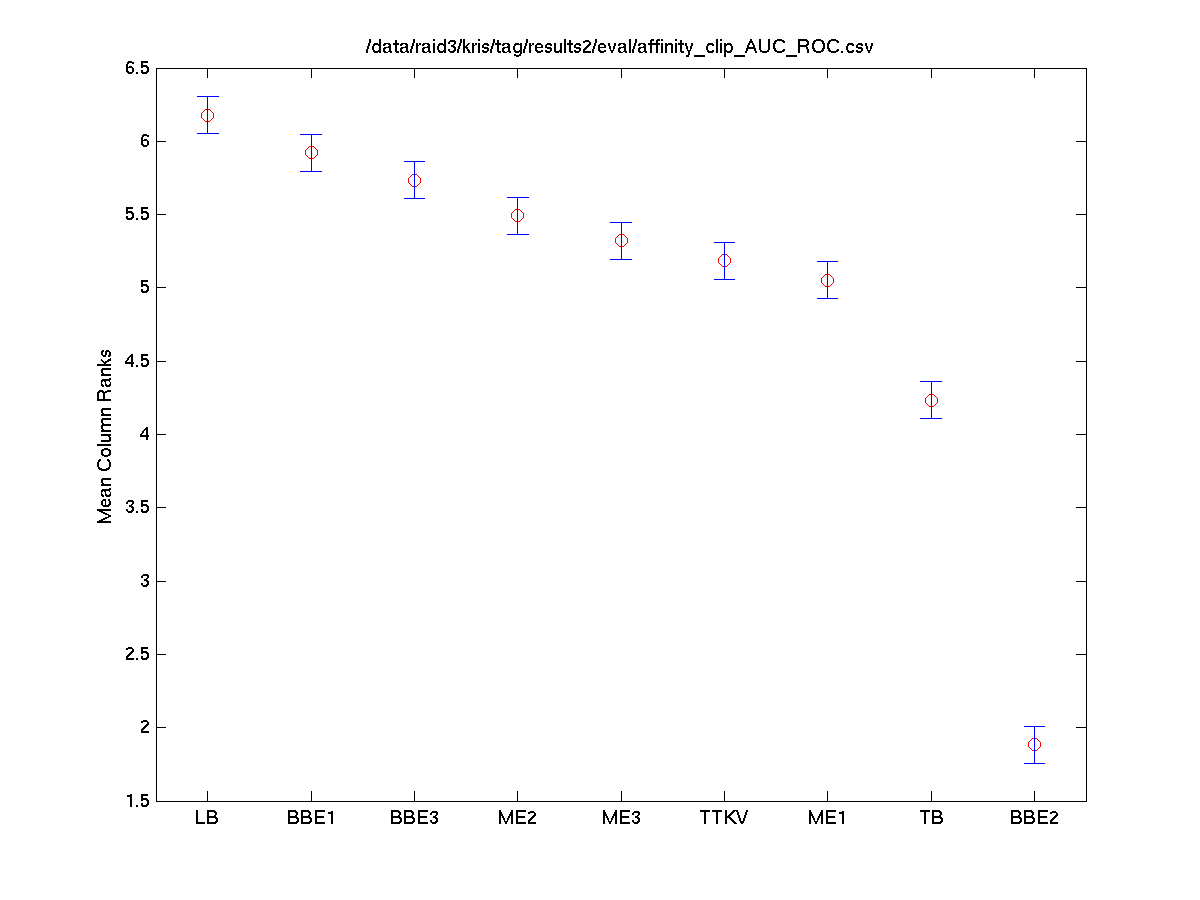

AUC-ROC Track Friedman test

The following table and plot show the results of Friedman's ANOVA with Tukey-Kramer multiple comparisons computed over the Area Under the ROC curve (AUC-ROC) for each track in the test. Each track appears in exactly once over all three folds of the test. However, we are uncertain if these measurements are truly independent as an multiple tracks from each artist are used.

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| BBE1 | BBE2 | 0.0026 | 0.2567 | 0.5107 | TRUE |

| BBE1 | BBE3 | 0.1900 | 0.4440 | 0.6981 | TRUE |

| BBE1 | ME1 | 0.4320 | 0.6860 | 0.9401 | TRUE |

| BBE1 | ME2 | 0.6019 | 0.8560 | 1.1100 | TRUE |

| BBE1 | ME3 | 0.7392 | 0.9932 | 1.2473 | TRUE |

| BBE1 | LB | 0.8712 | 1.1253 | 1.3793 | TRUE |

| BBE1 | TB | 1.6886 | 1.9427 | 2.1967 | TRUE |

| BBE1 | TTKV | 4.0410 | 4.2950 | 4.5491 | TRUE |

| BBE2 | BBE3 | -0.0667 | 0.1874 | 0.4414 | FALSE |

| BBE2 | ME1 | 0.1753 | 0.4293 | 0.6834 | TRUE |

| BBE2 | ME2 | 0.3453 | 0.5993 | 0.8534 | TRUE |

| BBE2 | ME3 | 0.4825 | 0.7366 | 0.9906 | TRUE |

| BBE2 | LB | 0.6146 | 0.8686 | 1.1227 | TRUE |

| BBE2 | TB | 1.4320 | 1.6860 | 1.9401 | TRUE |

| BBE2 | TTKV | 3.7843 | 4.0384 | 4.2924 | TRUE |

| BBE3 | ME1 | -0.0121 | 0.2420 | 0.4960 | FALSE |

| BBE3 | ME2 | 0.1579 | 0.4120 | 0.6660 | TRUE |

| BBE3 | ME3 | 0.2952 | 0.5492 | 0.8033 | TRUE |

| BBE3 | LB | 0.4272 | 0.6813 | 0.9353 | TRUE |

| BBE3 | TB | 1.2446 | 1.4986 | 1.7527 | TRUE |

| BBE3 | TTKV | 3.5970 | 3.8510 | 4.1051 | TRUE |

| ME1 | ME2 | -0.0841 | 0.1700 | 0.4240 | FALSE |

| ME1 | ME3 | 0.0532 | 0.3072 | 0.5613 | TRUE |

| ME1 | LB | 0.1852 | 0.4393 | 0.6933 | TRUE |

| ME1 | TB | 1.0026 | 1.2567 | 1.5107 | TRUE |

| ME1 | TTKV | 3.3550 | 3.6090 | 3.8631 | TRUE |

| ME2 | ME3 | -0.1168 | 0.1372 | 0.3913 | FALSE |

| ME2 | LB | 0.0153 | 0.2693 | 0.5233 | TRUE |

| ME2 | TB | 0.8326 | 1.0867 | 1.3407 | TRUE |

| ME2 | TTKV | 3.1850 | 3.4391 | 3.6931 | TRUE |

| ME3 | LB | -0.1220 | 0.1321 | 0.3861 | FALSE |

| ME3 | TB | 0.6954 | 0.9494 | 1.2035 | TRUE |

| ME3 | TTKV | 3.0478 | 3.3018 | 3.5559 | TRUE |

| LB | TB | 0.5633 | 0.8174 | 1.0714 | TRUE |

| LB | TTKV | 2.9157 | 3.1698 | 3.4238 | TRUE |

| TB | TTKV | 2.0983 | 2.3524 | 2.6064 | TRUE |

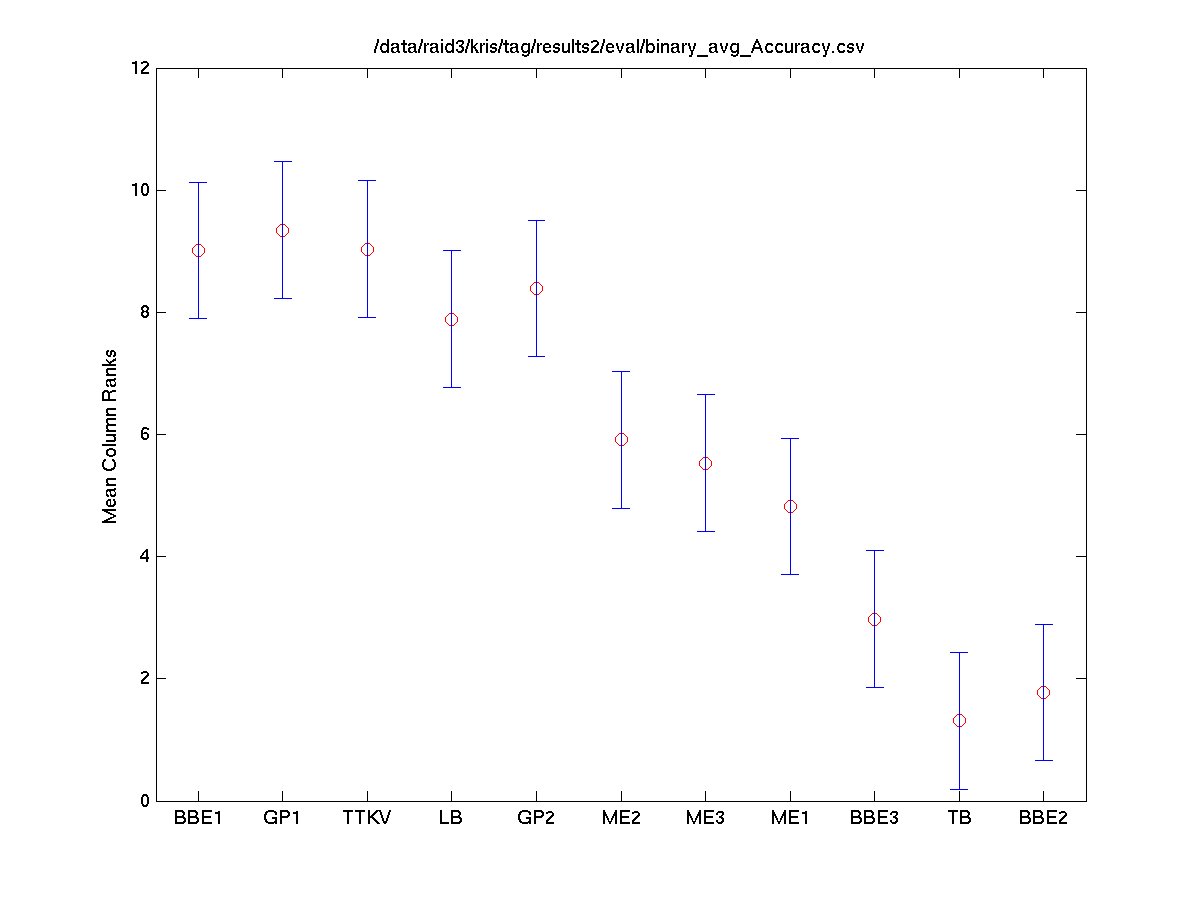

Tag Classification Accuracy Friedman test

The following table and plot show the results of Friedman's ANOVA with Tukey-Kramer multiple comparisons computed over the classification accuracy for each tag in the test, averaged over all folds.

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| BBE1 | BBE2 | -2.5705 | -0.3333 | 1.9038 | FALSE |

| BBE1 | BBE3 | -2.2594 | -0.0222 | 2.2149 | FALSE |

| BBE1 | ME1 | -1.1149 | 1.1222 | 3.3594 | FALSE |

| BBE1 | ME2 | -1.6149 | 0.6222 | 2.8594 | FALSE |

| BBE1 | ME3 | 0.8628 | 3.1000 | 5.3372 | TRUE |

| BBE1 | GP1 | 1.2406 | 3.4778 | 5.7149 | TRUE |

| BBE1 | GP2 | 1.9517 | 4.1889 | 6.4261 | TRUE |

| BBE1 | LB | 3.7962 | 6.0333 | 8.2705 | TRUE |

| BBE1 | TB | 5.4628 | 7.7000 | 9.9372 | TRUE |

| BBE1 | TTKV | 4.9962 | 7.2333 | 9.4705 | TRUE |

| BBE2 | BBE3 | -1.9261 | 0.3111 | 2.5483 | FALSE |

| BBE2 | ME1 | -0.7816 | 1.4556 | 3.6927 | FALSE |

| BBE2 | ME2 | -1.2816 | 0.9556 | 3.1927 | FALSE |

| BBE2 | ME3 | 1.1962 | 3.4333 | 5.6705 | TRUE |

| BBE2 | GP1 | 1.5739 | 3.8111 | 6.0483 | TRUE |

| BBE2 | GP2 | 2.2851 | 4.5222 | 6.7594 | TRUE |

| BBE2 | LB | 4.1295 | 6.3667 | 8.6038 | TRUE |

| BBE2 | TB | 5.7962 | 8.0333 | 10.2705 | TRUE |

| BBE2 | TTKV | 5.3295 | 7.5667 | 9.8038 | TRUE |

| BBE3 | ME1 | -1.0927 | 1.1444 | 3.3816 | FALSE |

| BBE3 | ME2 | -1.5927 | 0.6444 | 2.8816 | FALSE |

| BBE3 | ME3 | 0.8851 | 3.1222 | 5.3594 | TRUE |

| BBE3 | GP1 | 1.2628 | 3.5000 | 5.7372 | TRUE |

| BBE3 | GP2 | 1.9739 | 4.2111 | 6.4483 | TRUE |

| BBE3 | LB | 3.8184 | 6.0556 | 8.2927 | TRUE |

| BBE3 | TB | 5.4851 | 7.7222 | 9.9594 | TRUE |

| BBE3 | TTKV | 5.0184 | 7.2556 | 9.4927 | TRUE |

| ME1 | ME2 | -2.7372 | -0.5000 | 1.7372 | FALSE |

| ME1 | ME3 | -0.2594 | 1.9778 | 4.2149 | FALSE |

| ME1 | GP1 | 0.1184 | 2.3556 | 4.5927 | TRUE |

| ME1 | GP2 | 0.8295 | 3.0667 | 5.3038 | TRUE |

| ME1 | LB | 2.6739 | 4.9111 | 7.1483 | TRUE |

| ME1 | TB | 4.3406 | 6.5778 | 8.8149 | TRUE |

| ME1 | TTKV | 3.8739 | 6.1111 | 8.3483 | TRUE |

| ME2 | ME3 | 0.2406 | 2.4778 | 4.7149 | TRUE |

| ME2 | GP1 | 0.6184 | 2.8556 | 5.0927 | TRUE |

| ME2 | GP2 | 1.3295 | 3.5667 | 5.8038 | TRUE |

| ME2 | LB | 3.1739 | 5.4111 | 7.6483 | TRUE |

| ME2 | TB | 4.8406 | 7.0778 | 9.3149 | TRUE |

| ME2 | TTKV | 4.3739 | 6.6111 | 8.8483 | TRUE |

| ME3 | GP1 | -1.8594 | 0.3778 | 2.6149 | FALSE |

| ME3 | GP2 | -1.1483 | 1.0889 | 3.3261 | FALSE |

| ME3 | LB | 0.6962 | 2.9333 | 5.1705 | TRUE |

| ME3 | TB | 2.3628 | 4.6000 | 6.8372 | TRUE |

| ME3 | TTKV | 1.8962 | 4.1333 | 6.3705 | TRUE |

| GP1 | GP2 | -1.5261 | 0.7111 | 2.9483 | FALSE |

| GP1 | LB | 0.3184 | 2.5556 | 4.7927 | TRUE |

| GP1 | TB | 1.9851 | 4.2222 | 6.4594 | TRUE |

| GP1 | TTKV | 1.5184 | 3.7556 | 5.9927 | TRUE |

| GP2 | LB | -0.3927 | 1.8444 | 4.0816 | FALSE |

| GP2 | TB | 1.2739 | 3.5111 | 5.7483 | TRUE |

| GP2 | TTKV | 0.8073 | 3.0444 | 5.2816 | TRUE |

| LB | TB | -0.5705 | 1.6667 | 3.9038 | FALSE |

| LB | TTKV | -1.0372 | 1.2000 | 3.4372 | FALSE |

| TB | TTKV | -2.7038 | -0.4667 | 1.7705 | FALSE |

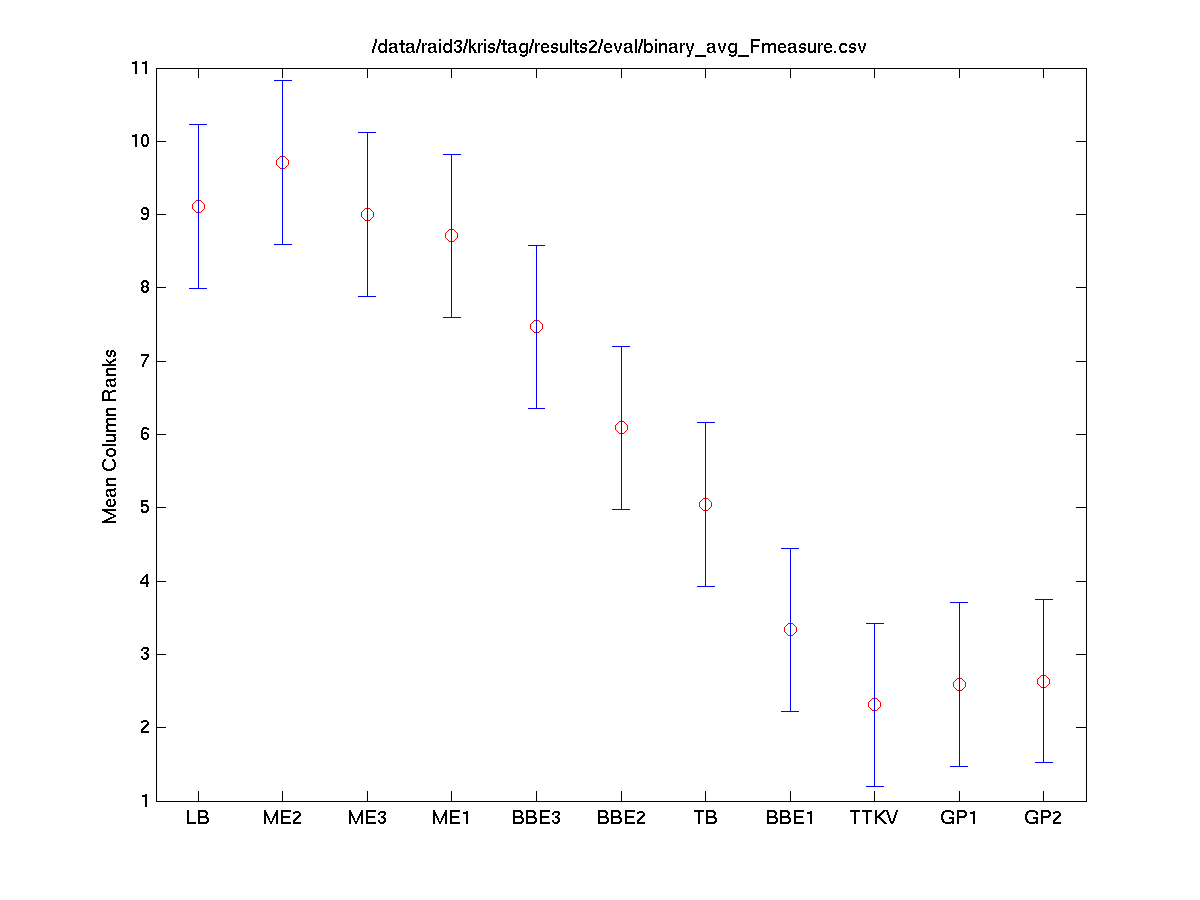

Tag F-measure Friedman test

The following table and plot show the results of Friedman's ANOVA with Tukey-Kramer multiple comparisons computed over the F-measure for each tag in the test, averaged over all folds.

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| BBE1 | BBE2 | -2.8274 | -0.6000 | 1.6274 | FALSE |

| BBE1 | BBE3 | -2.1163 | 0.1111 | 2.3385 | FALSE |

| BBE1 | ME1 | -1.8274 | 0.4000 | 2.6274 | FALSE |

| BBE1 | ME2 | -0.5830 | 1.6444 | 3.8719 | FALSE |

| BBE1 | ME3 | 0.7948 | 3.0222 | 5.2496 | TRUE |

| BBE1 | GP1 | 1.8392 | 4.0667 | 6.2941 | TRUE |

| BBE1 | GP2 | 3.5504 | 5.7778 | 8.0052 | TRUE |

| BBE1 | LB | 4.5726 | 6.8000 | 9.0274 | TRUE |

| BBE1 | TB | 4.2948 | 6.5222 | 8.7496 | TRUE |

| BBE1 | TTKV | 4.2504 | 6.4778 | 8.7052 | TRUE |

| BBE2 | BBE3 | -1.5163 | 0.7111 | 2.9385 | FALSE |

| BBE2 | ME1 | -1.2274 | 1.0000 | 3.2274 | FALSE |

| BBE2 | ME2 | 0.0170 | 2.2444 | 4.4719 | TRUE |

| BBE2 | ME3 | 1.3948 | 3.6222 | 5.8496 | TRUE |

| BBE2 | GP1 | 2.4392 | 4.6667 | 6.8941 | TRUE |

| BBE2 | GP2 | 4.1504 | 6.3778 | 8.6052 | TRUE |

| BBE2 | LB | 5.1726 | 7.4000 | 9.6274 | TRUE |

| BBE2 | TB | 4.8948 | 7.1222 | 9.3496 | TRUE |

| BBE2 | TTKV | 4.8504 | 7.0778 | 9.3052 | TRUE |

| BBE3 | ME1 | -1.9385 | 0.2889 | 2.5163 | FALSE |

| BBE3 | ME2 | -0.6941 | 1.5333 | 3.7608 | FALSE |

| BBE3 | ME3 | 0.6837 | 2.9111 | 5.1385 | TRUE |

| BBE3 | GP1 | 1.7281 | 3.9556 | 6.1830 | TRUE |

| BBE3 | GP2 | 3.4392 | 5.6667 | 7.8941 | TRUE |

| BBE3 | LB | 4.4615 | 6.6889 | 8.9163 | TRUE |

| BBE3 | TB | 4.1837 | 6.4111 | 8.6385 | TRUE |

| BBE3 | TTKV | 4.1392 | 6.3667 | 8.5941 | TRUE |

| ME1 | ME2 | -0.9830 | 1.2444 | 3.4719 | FALSE |

| ME1 | ME3 | 0.3948 | 2.6222 | 4.8496 | TRUE |

| ME1 | GP1 | 1.4392 | 3.6667 | 5.8941 | TRUE |

| ME1 | GP2 | 3.1504 | 5.3778 | 7.6052 | TRUE |

| ME1 | LB | 4.1726 | 6.4000 | 8.6274 | TRUE |

| ME1 | TB | 3.8948 | 6.1222 | 8.3496 | TRUE |

| ME1 | TTKV | 3.8504 | 6.0778 | 8.3052 | TRUE |

| ME2 | ME3 | -0.8496 | 1.3778 | 3.6052 | FALSE |

| ME2 | GP1 | 0.1948 | 2.4222 | 4.6496 | TRUE |

| ME2 | GP2 | 1.9059 | 4.1333 | 6.3608 | TRUE |

| ME2 | LB | 2.9281 | 5.1556 | 7.3830 | TRUE |

| ME2 | TB | 2.6504 | 4.8778 | 7.1052 | TRUE |

| ME2 | TTKV | 2.6059 | 4.8333 | 7.0608 | TRUE |

| ME3 | GP1 | -1.1830 | 1.0444 | 3.2719 | FALSE |

| ME3 | GP2 | 0.5281 | 2.7556 | 4.9830 | TRUE |

| ME3 | LB | 1.5504 | 3.7778 | 6.0052 | TRUE |

| ME3 | TB | 1.2726 | 3.5000 | 5.7274 | TRUE |

| ME3 | TTKV | 1.2281 | 3.4556 | 5.6830 | TRUE |

| GP1 | GP2 | -0.5163 | 1.7111 | 3.9385 | FALSE |

| GP1 | LB | 0.5059 | 2.7333 | 4.9608 | TRUE |

| GP1 | TB | 0.2281 | 2.4556 | 4.6830 | TRUE |

| GP1 | TTKV | 0.1837 | 2.4111 | 4.6385 | TRUE |

| GP2 | LB | -1.2052 | 1.0222 | 3.2496 | FALSE |

| GP2 | TB | -1.4830 | 0.7444 | 2.9719 | FALSE |

| GP2 | TTKV | -1.5274 | 0.7000 | 2.9274 | FALSE |

| LB | TB | -2.5052 | -0.2778 | 1.9496 | FALSE |

| LB | TTKV | -2.5496 | -0.3222 | 1.9052 | FALSE |

| TB | TTKV | -2.2719 | -0.0444 | 2.1830 | FALSE |

Beta-Binomial test results

Accuracy on positive examples Beta-Binomial results

The following table and plot show the results of simulations from the Beta-Binomial model using the accuracy of each algorithm's classification only on the positive examples. It only shows the relative proportion of true positives and false negatives, and should be considered with the classification accuracy on the negative examples. The image shows the estimate of the overall performance with 95% confidence intervals.

| Tag | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 80s | 0.006 | 1.000 | 0.930 | 0.597 | 0.587 | 0.629 | 0.000 | 0.171 | 0.225 | 1.000 | 0.000 |

| acoustic | 0.000 | 1.000 | 0.737 | 0.650 | 0.617 | 0.639 | 0.000 | 0.000 | 0.185 | 0.346 | 0.000 |

| ambient | 0.000 | 0.988 | 0.420 | 0.552 | 0.620 | 0.639 | 0.000 | 0.083 | 0.273 | 1.000 | 0.000 |

| bass | 0.000 | 1.000 | 0.954 | 0.499 | 0.539 | 0.546 | 0.000 | 0.072 | 0.228 | 1.000 | 0.000 |

| beat | 0.000 | 1.000 | 0.800 | 0.747 | 0.787 | 0.738 | 0.000 | 0.035 | 0.250 | 1.000 | 0.000 |

| british | 0.008 | 1.000 | 0.842 | 0.613 | 0.628 | 0.658 | 0.000 | 0.000 | 0.107 | 1.000 | 0.000 |

| country | 0.177 | 1.000 | 0.979 | 0.601 | 0.619 | 0.606 | 0.052 | 0.000 | 0.148 | 1.000 | 0.000 |

| dance | 0.040 | 1.000 | 0.990 | 0.729 | 0.747 | 0.725 | 0.041 | 0.000 | 0.540 | 1.000 | 0.042 |

| distortion | 0.000 | 1.000 | 0.822 | 0.675 | 0.626 | 0.640 | 0.000 | 0.000 | 0.133 | 1.000 | 0.000 |

| drum | 0.008 | 1.000 | 1.000 | 0.609 | 0.588 | 0.573 | 0.358 | 0.000 | 0.495 | 1.000 | 0.281 |

| drum machine | 0.000 | 1.000 | 0.893 | 0.662 | 0.693 | 0.692 | 0.000 | 0.000 | 0.217 | 1.000 | 0.000 |

| electronic | 0.196 | 0.998 | 0.968 | 0.687 | 0.702 | 0.690 | 0.190 | 0.000 | 0.519 | 1.000 | 0.157 |

| electronica | 0.059 | 1.000 | 0.943 | 0.682 | 0.724 | 0.682 | 0.000 | 0.036 | 0.283 | 1.000 | 0.000 |

| fast | 0.000 | 1.000 | 0.889 | 0.697 | 0.695 | 0.740 | 0.000 | 0.019 | 0.223 | 1.000 | 0.000 |

| female | 0.121 | 1.000 | 0.949 | 0.755 | 0.716 | 0.727 | 0.006 | 0.023 | 0.437 | 1.000 | 0.004 |

| funk | 0.000 | 1.000 | 0.748 | 0.726 | 0.754 | 0.697 | 0.000 | 0.021 | 0.029 | 0.653 | 0.000 |

| guitar | 0.121 | 1.000 | 0.987 | 0.722 | 0.715 | 0.723 | 0.158 | 0.000 | 0.643 | 1.000 | 0.368 |

| hip hop | 0.123 | 1.000 | 0.896 | 0.831 | 0.856 | 0.817 | 0.241 | 0.000 | 0.582 | 1.000 | 0.044 |

| horns | 0.000 | 1.000 | 0.805 | 0.539 | 0.539 | 0.481 | 0.000 | 0.057 | 0.020 | 0.659 | 0.000 |

| house | 0.000 | 1.000 | 0.876 | 0.819 | 0.775 | 0.742 | 0.000 | 0.064 | 0.199 | 1.000 | 0.000 |

| instrumental | 0.000 | 1.000 | 0.727 | 0.587 | 0.621 | 0.591 | 0.312 | 0.036 | 0.076 | 1.000 | 0.000 |

| jazz | 0.052 | 1.000 | 0.915 | 0.702 | 0.742 | 0.723 | 0.043 | 0.000 | 0.407 | 1.000 | 0.017 |

| keyboard | 0.000 | 1.000 | 0.907 | 0.450 | 0.547 | 0.462 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 |

| loud | 0.000 | 1.000 | 0.802 | 0.696 | 0.666 | 0.696 | 0.000 | 0.000 | 0.165 | 0.688 | 0.000 |

| male | 0.125 | 1.000 | 0.997 | 0.714 | 0.717 | 0.722 | 0.011 | 0.000 | 0.497 | 1.000 | 0.197 |

| metal | 0.000 | 1.000 | 0.741 | 0.774 | 0.657 | 0.673 | 0.000 | 0.104 | 0.179 | 0.341 | 0.000 |

| noise | 0.240 | 0.968 | 0.698 | 0.581 | 0.501 | 0.544 | 0.000 | 0.000 | 0.161 | 0.659 | 0.000 |

| organ | 0.000 | 1.000 | 0.675 | 0.410 | 0.321 | 0.333 | 0.000 | 0.000 | 0.000 | 0.659 | 0.000 |

| piano | 0.056 | 1.000 | 0.673 | 0.671 | 0.721 | 0.747 | 0.000 | 0.000 | 0.338 | 1.000 | 0.000 |

| pop | 0.098 | 1.000 | 0.985 | 0.667 | 0.690 | 0.713 | 0.023 | 0.000 | 0.450 | 1.000 | 0.000 |

| punk | 0.000 | 1.000 | 0.709 | 0.752 | 0.707 | 0.632 | 0.000 | 0.000 | 0.305 | 1.000 | 0.000 |

| quiet | 0.165 | 0.865 | 0.544 | 0.624 | 0.751 | 0.703 | 0.000 | 0.128 | 0.254 | 1.000 | 0.000 |

| r&b | 0.025 | 1.000 | 0.560 | 0.600 | 0.647 | 0.696 | 0.025 | 0.000 | 0.179 | 0.341 | 0.000 |

| rap | 0.461 | 1.000 | 0.677 | 0.861 | 0.876 | 0.852 | 0.000 | 0.000 | 0.647 | 1.000 | 0.052 |

| rock | 0.241 | 1.000 | 0.981 | 0.745 | 0.747 | 0.733 | 0.049 | 0.011 | 0.684 | 1.000 | 0.244 |

| saxophone | 0.000 | 1.000 | 0.846 | 0.795 | 0.821 | 0.724 | 0.000 | 0.000 | 0.339 | 1.000 | 0.000 |

| slow | 0.000 | 1.000 | 0.969 | 0.637 | 0.689 | 0.675 | 0.080 | 0.000 | 0.329 | 1.000 | 0.000 |

| soft | 0.000 | 1.000 | 0.916 | 0.741 | 0.805 | 0.690 | 0.094 | 0.000 | 0.194 | 1.000 | 0.000 |

| solo | 0.000 | 1.000 | 0.979 | 0.612 | 0.594 | 0.594 | 0.000 | 0.000 | 0.097 | 1.000 | 0.000 |

| strings | 0.077 | 1.000 | 0.846 | 0.711 | 0.735 | 0.777 | 0.000 | 0.000 | 0.219 | 1.000 | 0.000 |

| synth | 0.016 | 1.000 | 0.974 | 0.677 | 0.636 | 0.623 | 0.000 | 0.002 | 0.395 | 1.000 | 0.020 |

| techno | 0.000 | 1.000 | 0.920 | 0.764 | 0.800 | 0.747 | 0.000 | 0.000 | 0.498 | 1.000 | 0.016 |

| trumpet | 0.000 | 1.000 | 0.809 | 0.623 | 0.686 | 0.580 | 0.000 | 0.000 | 0.141 | 0.653 | 0.000 |

| vocal | 0.000 | 1.000 | 0.943 | 0.613 | 0.631 | 0.602 | 0.000 | 0.000 | 0.219 | 1.000 | 0.000 |

| voice | 0.000 | 1.000 | 0.966 | 0.531 | 0.592 | 0.516 | 0.000 | 0.366 | 0.087 | 1.000 | 0.000 |

| overall | 0.000 | 1.000 | 0.890 | 0.669 | 0.681 | 0.664 | 0.000 | 0.000 | 0.226 | 1.000 | 0.000 |

File:Binary per fold positive example Accuracy.png

The plots for each tag are more interesting and the 95% confidence intervals are much tighter. Since there are so many of them, it is difficult to post them to the wiki. You can download a tar.gz zip file containing all of them here.

Accuracy on negative examples Beta-Binomial results

The following table and plot show the results of simulations from the Beta-Binomial model using the accuracy of each algorithm's classification only on the negative examples. It only shows the relative proportion of true negatives and false positives, and should be considered with the classification accuracy on the positive examples. The image shows the estimate of the overall performance with 95% confidence intervals.

| Tag | BBE1 | BBE2 | BBE3 | ME1 | ME2 | ME3 | GP1 | GP2 | LB | TB | TTKV |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 80s | 1.000 | 0.003 | 0.129 | 0.682 | 0.684 | 0.697 | 1.000 | 0.921 | 0.957 | 0.000 | 1.000 |

| acoustic | 0.998 | 0.003 | 0.457 | 0.681 | 0.684 | 0.664 | 1.000 | 0.958 | 0.986 | 0.653 | 1.000 |

| ambient | 1.000 | 0.003 | 0.873 | 0.746 | 0.806 | 0.748 | 0.999 | 0.968 | 0.980 | 0.000 | 1.000 |

| bass | 1.000 | 0.003 | 0.055 | 0.572 | 0.579 | 0.602 | 1.000 | 0.942 | 0.821 | 0.000 | 1.000 |

| beat | 1.000 | 0.003 | 0.549 | 0.701 | 0.742 | 0.748 | 1.000 | 0.955 | 0.951 | 0.000 | 1.000 |

| british | 0.998 | 0.003 | 0.214 | 0.694 | 0.700 | 0.676 | 1.000 | 1.000 | 0.965 | 0.000 | 1.000 |

| country | 0.951 | 0.003 | 0.197 | 0.732 | 0.733 | 0.758 | 0.981 | 1.000 | 0.969 | 0.000 | 1.000 |

| dance | 0.996 | 0.003 | 0.192 | 0.748 | 0.802 | 0.794 | 0.991 | 1.000 | 0.923 | 0.000 | 0.944 |

| distortion | 1.000 | 0.003 | 0.525 | 0.775 | 0.784 | 0.777 | 1.000 | 1.000 | 0.976 | 0.000 | 1.000 |

| drum | 0.996 | 0.003 | 0.023 | 0.564 | 0.572 | 0.595 | 0.648 | 1.000 | 0.640 | 0.000 | 0.705 |

| drum machine | 1.000 | 0.003 | 0.428 | 0.653 | 0.690 | 0.684 | 1.000 | 1.000 | 0.968 | 0.000 | 1.000 |

| electronic | 0.966 | 0.003 | 0.279 | 0.714 | 0.768 | 0.772 | 0.954 | 1.000 | 0.867 | 0.000 | 0.833 |

| electronica | 0.991 | 0.003 | 0.390 | 0.684 | 0.725 | 0.726 | 1.000 | 0.960 | 0.941 | 0.000 | 1.000 |

| fast | 1.000 | 0.003 | 0.412 | 0.680 | 0.693 | 0.696 | 0.999 | 0.967 | 0.960 | 0.000 | 1.000 |

| female | 0.930 | 0.003 | 0.177 | 0.807 | 0.817 | 0.804 | 0.999 | 0.985 | 0.896 | 0.000 | 0.999 |

| funk | 1.000 | 0.003 | 0.426 | 0.708 | 0.712 | 0.696 | 1.000 | 0.982 | 0.984 | 0.347 | 1.000 |

| guitar | 0.939 | 0.003 | 0.222 | 0.711 | 0.730 | 0.730 | 0.950 | 1.000 | 0.777 | 0.000 | 0.617 |

| hip hop | 0.994 | 0.003 | 0.675 | 0.831 | 0.866 | 0.869 | 0.988 | 1.000 | 0.968 | 0.000 | 0.955 |

| horns | 1.000 | 0.003 | 0.303 | 0.660 | 0.674 | 0.644 | 1.000 | 0.935 | 0.982 | 0.342 | 1.000 |

| house | 1.000 | 0.003 | 0.413 | 0.756 | 0.797 | 0.756 | 1.000 | 0.955 | 0.975 | 0.000 | 1.000 |

| instrumental | 1.000 | 0.003 | 0.374 | 0.631 | 0.648 | 0.638 | 0.688 | 0.951 | 0.955 | 0.000 | 1.000 |

| jazz | 0.991 | 0.003 | 0.465 | 0.846 | 0.865 | 0.867 | 1.000 | 1.000 | 0.960 | 0.000 | 0.981 |

| keyboard | 1.000 | 0.003 | 0.163 | 0.590 | 0.557 | 0.530 | 1.000 | 1.000 | 0.981 | 0.000 | 1.000 |

| loud | 1.000 | 0.003 | 0.552 | 0.813 | 0.825 | 0.814 | 1.000 | 1.000 | 0.986 | 0.312 | 1.000 |

| male | 0.913 | 0.003 | 0.048 | 0.721 | 0.740 | 0.744 | 0.989 | 1.000 | 0.746 | 0.000 | 0.796 |

| metal | 1.000 | 0.003 | 0.647 | 0.788 | 0.791 | 0.785 | 1.000 | 0.969 | 0.987 | 0.659 | 1.000 |

| noise | 0.949 | 0.003 | 0.697 | 0.760 | 0.778 | 0.784 | 0.998 | 0.989 | 0.984 | 0.342 | 1.000 |

| organ | 1.000 | 0.003 | 0.393 | 0.555 | 0.547 | 0.527 | 1.000 | 0.991 | 0.984 | 0.341 | 1.000 |

| piano | 0.960 | 0.003 | 0.421 | 0.655 | 0.679 | 0.692 | 1.000 | 1.000 | 0.940 | 0.000 | 1.000 |

| pop | 0.931 | 0.003 | 0.102 | 0.680 | 0.683 | 0.682 | 0.988 | 1.000 | 0.849 | 0.000 | 1.000 |

| punk | 1.000 | 0.003 | 0.602 | 0.802 | 0.816 | 0.807 | 1.000 | 1.000 | 0.983 | 0.000 | 1.000 |

| quiet | 0.994 | 0.003 | 0.842 | 0.839 | 0.853 | 0.844 | 1.000 | 0.967 | 0.985 | 0.000 | 0.999 |

| r&b | 0.994 | 0.003 | 0.652 | 0.751 | 0.756 | 0.734 | 0.999 | 1.000 | 0.986 | 0.658 | 1.000 |

| rap | 0.967 | 0.003 | 0.639 | 0.853 | 0.885 | 0.887 | 1.000 | 1.000 | 0.972 | 0.000 | 0.950 |

| rock | 0.922 | 0.003 | 0.286 | 0.788 | 0.806 | 0.800 | 0.986 | 0.991 | 0.866 | 0.000 | 0.721 |

| saxophone | 1.000 | 0.003 | 0.455 | 0.852 | 0.852 | 0.841 | 1.000 | 0.985 | 0.978 | 0.000 | 0.999 |

| slow | 1.000 | 0.003 | 0.265 | 0.652 | 0.687 | 0.687 | 0.981 | 1.000 | 0.948 | 0.000 | 1.000 |

| soft | 1.000 | 0.003 | 0.302 | 0.677 | 0.690 | 0.684 | 0.990 | 1.000 | 0.977 | 0.000 | 1.000 |

| solo | 1.000 | 0.003 | 0.165 | 0.740 | 0.725 | 0.737 | 1.000 | 1.000 | 0.978 | 0.000 | 1.000 |

| strings | 0.969 | 0.003 | 0.466 | 0.759 | 0.754 | 0.771 | 0.998 | 1.000 | 0.980 | 0.000 | 1.000 |

| synth | 0.997 | 0.003 | 0.135 | 0.655 | 0.677 | 0.679 | 1.000 | 0.996 | 0.834 | 0.000 | 0.981 |

| techno | 1.000 | 0.003 | 0.526 | 0.737 | 0.799 | 0.798 | 1.000 | 1.000 | 0.937 | 0.000 | 0.973 |

| trumpet | 1.000 | 0.003 | 0.386 | 0.775 | 0.760 | 0.735 | 1.000 | 0.996 | 0.984 | 0.347 | 1.000 |

| vocal | 1.000 | 0.003 | 0.081 | 0.584 | 0.608 | 0.603 | 1.000 | 0.995 | 0.892 | 0.000 | 1.000 |

| voice | 1.000 | 0.003 | 0.054 | 0.527 | 0.540 | 0.541 | 1.000 | 0.654 | 0.935 | 0.000 | 1.000 |

| overall | 0.999 | 0.003 | 0.346 | 0.719 | 0.737 | 0.731 | 1.000 | 1.000 | 0.950 | 0.000 | 1.000 |

File:Binary per fold negative example Accuracy.png

The plots for each tag are more interesting and the 95% confidence intervals are much tighter. Since there are so many of them, it is difficult to post them to the wiki. You can download a tar.gz file containing all of them here.

Assorted Results Files for Download

AUC-ROC Clip Data

(Too large for easy Wiki viewing)

tag.affinity_clip_AUC_ROC.csv

CSV Files Without Rounding (Averaged across folds)

tag.affinity.clip.auc.roc.csv

tag.affinity.clip.auc.roc.csv

tag.binary.accuracy.csv

tag.binary.fmeasure.csv

tag.binary.negative.example.accuracy.csv

tag.binary.positive.example.accuracy.csv

CSV Files Without Rounding (Fold information)

tag.binary.per.fold.positive.example.accuracy.csv

tag.binary.per.fold.negative.example.accuracy.csv

tag.binary.per.fold.fmeasure.csv

tag.binary.per.fold.accuracy.csv

tag.affinity.tag.per.fold.auc.roc.csv

Results By Algorithm

(.tar.gz)

LB = L. Barrington, D. Turnbull, G. Lanckriet

BBE 1 = T. Bertin-Mahieux, Y. Bengio, D. Eck (KNN)

BBE 2 = T. Bertin-Mahieux, Y. Bengio, D. Eck (NNet)

BBE 3 = T. Bertin-Mahieux, D. Eck, P. Lamere, Y. Bengio (Thierry/Lamere Boosting)

TB = Bertin-Mahieux (dumb/smurf)

ME1 = M. I. Mandel, D. P. W. Ellis 1

ME2 = M. I. Mandel, D. P. W. Ellis 2

ME3 = M. I. Mandel, D. P. W. Ellis 3

GP1 = G. Peeters 1

GP2 = G. Peeters 2

TTKV = K. Trohidis, G. Tsoumakas, G. Kalliris, I. Vlahavas

.