2007:Multiple Fundamental Frequency Estimation & Tracking Results

Contents

Introduction

These are the results for the 2007 running of the Multiple Fundamental Frequency Estimation and Tracking task. For background information about this task set please refer to the 2007:Multiple Fundamental Frequency Estimation & Tracking page.

General Legend

Team ID

CC1 = Chuan Cao, Ming Li, Jian Liu, Yonghong Yan 1

CC2 = Chuan Cao, Ming Li, Jian Liu, Yonghong Yan 2

AC1 = Arshia Cont 1

AC2 = Arshia Cont 2

AC3 = Arshia Cont 3

AC4 = Arshia Cont 4

KE1 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama 1

KE2 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama 2

KE3 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama 3

KE4 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama 4

VE1 = Valentin Emiya, Roland Badeau, Bertrand David 1

VE2 = Valentin Emiya, Roland Badeau, Bertrand David 2

PL = Pierre Leveau

PI1 = Antonio Pertusa, José Manuel Iñesta 1

PI2 = Antonio Pertusa, José Manuel Iñesta 2

PI3 = Antonio Pertusa, José Manuel Iñesta 3

PE1 = Graham Poliner, Daniel P. W. Ellis 1

PE2 = Graham Poliner, Daniel P. W. Ellis 2

SR = Stanisław A. Raczyński, Nobutaka Ono, Shigeki Sagayama

RK = Matt Ryynänen, Anssi Klapuri

EV1 = Emmanuel Vincent, Nancy Bertin, Roland Badeau 1

EV2 = Emmanuel Vincent, Nancy Bertin, Roland Badeau 2

EV3 = Emmanuel Vincent, Nancy Bertin, Roland Badeau 3

EV4 = Emmanuel Vincent, Nancy Bertin, Roland Badeau 4

CY = Chunghsin Yeh

ZR = Ruohua Zhou, Joshua D. Reiss

Overall Summary Results Task1

Below are the average scores across 28 test files. These files consisted of 7 groups, each group having 4 files ranging from 2 polyphony to 5 polyphony. 20 real recordings, 8 synthesized from RWC samples.

| AC1 | AC2 | CC1 | CC2 | CY | EV1 | EV2 | KE1 | KE2 | PE1 | PI1 | PL | RK | SR | VE | ZR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | 0.298 | 0.373 | 0.567 | 0.359 | 0.765 | 0.659 | 0.687 | 0.335 | 0.348 | 0.734 | 0.827 | 0.689 | 0.690 | 0.614 | 0.530 | 0.710 |

| Recall | 0.530 | 0.431 | 0.671 | 0.767 | 0.655 | 0.513 | 0.625 | 0.618 | 0.546 | 0.505 | 0.608 | 0.417 | 0.709 | 0.595 | 0.157 | 0.661 |

| Acc | 0.277 | 0.311 | 0.510 | 0.359 | 0.589 | 0.466 | 0.543 | 0.327 | 0.336 | 0.444 | 0.580 | 0.394 | 0.605 | 0.484 | 0.145 | 0.582 |

| Etot | 1.444 | 0.990 | 0.685 | 1.678 | 0.460 | 0.594 | 0.538 | 1.427 | 1.188 | 0.639 | 0.445 | 0.639 | 0.474 | 0.670 | 0.957 | 0.498 |

| Esubs | 0.332 | 0.348 | 0.200 | 0.232 | 0.108 | 0.171 | 0.135 | 0.339 | 0.401 | 0.120 | 0.094 | 0.151 | 0.158 | 0.185 | 0.070 | 0.141 |

| Emiss | 0.138 | 0.221 | 0.128 | 0.001 | 0.238 | 0.317 | 0.240 | 0.046 | 0.052 | 0.375 | 0.298 | 0.432 | 0.133 | 0.219 | 0.767 | 0.197 |

| Efa | 0.974 | 0.421 | 0.356 | 1.445 | 0.115 | 0.107 | 0.163 | 1.042 | 0.734 | 0.144 | 0.053 | 0.055 | 0.183 | 0.265 | 0.120 | 0.160 |

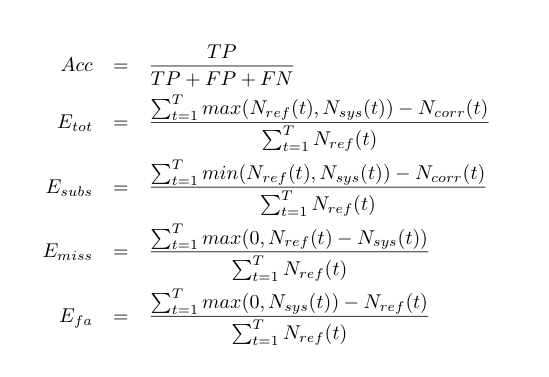

Where

- Nref is the number of non-zero elements in the ground truth data.

- Nsys is the number of active elements returned by the system.

- Ncorr is the number of correctly identified elements.

Individual Results Files for Task 1

Individual results: Scores per Query

AC1 = Arshia Cont

AC2 = Arshia Cont

CC1 = Chuan Cao, Ming Li, Jian Liu, Yonghong Yan 1

CC2 = Chuan Cao, Ming Li, Jian Liu, Yonghong Yan 1

CY = Chunghsin Yeh

EV1 = Emmanuel Vincent, Nancy Bertin, Roland Badeau

EV2 = Emmanuel Vincent, Nancy Bertin, Roland Badeau

KE1 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama

KE2 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama

PE = Graham Poliner, Daniel P. W. Ellis

PI1 = Antonio Pertusa, José Manuel Iñesta 1

PL = Pierre Leveau

RK = Matt Ryynänen, Anssi Klapuri

SR = Stanislaw A. Raczynski, Nobutaka Ono, Shigeki Sagayama

VE1 = Valentin Emiya, Roland Badeau, Bertrand David

ZR = JRuohua Zhou, Joshua D. Reiss

Info about the filenames

The filenames starting with part* comes from acoustic woodwind recording, the ones starting with RWC are synthesized. The legend about the instruments are:

bs = bassoon

cl = clarinet

fl = flute

hn = horn

ob = oboe

vl = violin

cel = cello

gtr = guitar

sax saxophone

bass = electric bass guitar

Run Times

| Participant | Run time (sec) | Machine |

|---|---|---|

| CC1 | 2513 | ALE Nodes |

| CC2 | 2520 | ALE Nodes |

| KE1 | 38640 | ALE Nodes |

| KE2 | 19320 | ALE Nodes |

| VE | 364560 | ALE Nodes |

| RK | 3540 | SANDBOX |

| CY | 132300 | ALE Nodes |

| PL | 14700 | ALE Nodes |

| ZR | 271 | BLACK |

| SR | 41160 | ALE Nodes |

| AP1 | 364 | ALE Nodes |

| EV1 | 2366 | ALE Nodes |

| EV2 | 2233 | ALE Nodes |

| PE1 | 4564 | ALE Nodes |

| AC1 | 840 | MAC |

| AC2 | 840 | MAC |

Overall Summary Results Task II

This subtask is evaluated in two different ways. In the first setup , a returned note is assumed correct if its onset is within +-50ms of a ref note and its F0 is within +- quarter tone of the corresponding reference note, ignoring the returned offset values. In the second setup, on top of the above requirements, a correct returned note is required to have an offset value within 20% of the ref notes duration around the ref note`s offset, or within 50ms whichever is larger.

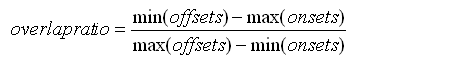

The Overlap ratio is calculated for an individual correctly identified note as

A total of 30 files were used in this task: 16 real recordings, 8 synthesized from RWC samples, and 6 piano. The results below are the average of these 30 files.

Results Based on Onset Only

| AC3 | AC4 | EV3 | EV4 | KE3 | KE4 | PE2 | PI2 | PI3 | RK | VE2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | 0.067 | 0.070 | 0.412 | 0.447 | 0.216 | 0.263 | 0.533 | 0.371 | 0.203 | 0.578 | 0.338 |

| Recall | 0.137 | 0.172 | 0.554 | 0.692 | 0.323 | 0.301 | 0.485 | 0.474 | 0.296 | 0.678 | 0.171 |

| Ave. F-measure | 0.087 | 0.093 | 0.453 | 0.527 | 0.246 | 0.268 | 0.485 | 0.408 | 0.219 | 0.614 | 0.202 |

| Ave. Overlap | 0.523 | 0.536 | 0.622 | 0.636 | 0.610 | 0.557 | 0.740 | 0.665 | 0.628 | 0.699 | 0.486 |

Results Based on Onset-Offset

| AC3 | AC4 | EV3 | EV4 | KE3 | KE4 | PE2 | PI2 | PI3 | RK | VE2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | 0.013 | 0.015 | 0.142 | 0.162 | 0.071 | 0.063 | 0.305 | 0.206 | 0.094 | 0.312 | 0.098 |

| Recall | 0.028 | 0.044 | 0.226 | 0.277 | 0.130 | 0.084 | 0.278 | 0.262 | 0.130 | 0.382 | 0.052 |

| Ave. F-measure | 0.020 | 0.023 | 0.166 | 0.204 | 0.090 | 0.069 | 0.277 | 0.226 | 0.101 | 0.337 | 0.076 |

| Ave. Overlap | 0.825 | 0.831 | 0.850 | 0.859 | 0.847 | 0.864 | 0.879 | 0.844 | 0.857 | 0.884 | 0.804 |

Piano Results based on Onset Only

| AC3 | AC4 | EV3 | EV4 | KE3 | KE4 | PE2 | PI2 | PI3 | RK | VE2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | 0.169 | 0.159 | 0.591 | 0.541 | 0.400 | 0.441 | 0.672 | 0.320 | 0.240 | 0.720 | 0.653 |

| Recall | 0.147 | 0.174 | 0.651 | 0.723 | 0.421 | 0.361 | 0.630 | 0.376 | 0.194 | 0.669 | 0.156 |

| Ave. F-measure | 0.168 | 0.183 | 0.602 | 0.601 | 0.405 | 0.386 | 0.647 | 0.343 | 0.186 | 0.692 | 0.230 |

| Ave. Overlap | 0.438 | 0.428 | 0.513 | 0.543 | 0.440 | 0.368 | 0.615 | 0.495 | 0.573 | 0.606 | 0.516 |

Individual Results Files for Task 2

For Onset only Evaluation

AC3 = Arshia Cont

AC4 = Arshia Cont

EV3 = Emmanuel Vincent, Nancy Bertin, Roland Badeau

EV4 = Emmanuel Vincent, Nancy Bertin, Roland Badeau

KE3 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama

KE4 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama

PE2 = Graham Poliner, Daniel P. W. Ellis

PI2 = Antonio Pertusa, José Manuel Iñesta 1

PI3 = Antonio Pertusa, José Manuel Iñesta 1

RK = Matt Ryynänen, Anssi Klapuri

VE2 = Valentin Emiya, Roland Badeau, Bertrand David

For Onset/Offset Evaluation

AC3 = Arshia Cont

AC4 = Arshia Cont

EV3 = Emmanuel Vincent, Nancy Bertin, Roland Badeau

EV4 = Emmanuel Vincent, Nancy Bertin, Roland Badeau

KE3 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama

KE4 = Koji Egashira, Hirokazu Kameoka, Shigeki Sagayama

PE2 = Graham Poliner, Daniel P. W. Ellis

PI2 = Antonio Pertusa, José Manuel Iñesta 1

PI3 = Antonio Pertusa, José Manuel Iñesta 1

RK = Matt Ryynänen, Anssi Klapuri

VE2 = Valentin Emiya, Roland Badeau, Bertrand David

Info About Filenames

The filenames starting with part* comes from acoustic woodwind recording, the ones starting with RWC are synthesized. The piano files are: RA_C030_align.wav,bach_847TESTp.wav,beet_pathetique_3TESTp.wav,mz_333_1TESTp.wav,scn_4TESTp.wav.note, ty_januarTESTp.wav.note

Run Times

| Participant | Run time (sec) | Machine |

|---|---|---|

| AC3 | 900 | MAC |

| AC4 | 900 | MAC |

| RK | 3285 | SANDBOX |

| EV3 | 2535 | ALE Nodes |

| EV4 | 2475 | ALE Nodes |

| KE3 | 4140 | ALE Nodes |

| KE4 | 20700 | ALE Nodes |

| PE2 | 4890 | ALE Nodes |

| PI2 | 165 | ALE Nodes |

| PI3 | 165 | ALE Nodes |

| VE | 390600 | ALE Nodes |