2013:Audio Music Similarity and Retrieval Results

Introduction

These are the results for the 2013 running of the Audio Music Similarity and Retrieval task set. For background information about this task set please refer to the Audio Music Similarity and Retrieval page.

Each system was given 7000 songs chosen from IMIRSEL's "uspop", "uscrap" and "american" "classical" and "sundry" collections. Each system then returned a 7000x7000 distance matrix. 50 songs were randomly selected from the 10 genre groups (5 per genre) as queries and the first 5 most highly ranked songs out of the 7000 were extracted for each query (after filtering out the query itself, returned results from the same artist were also omitted). Then, for each query, the returned results (candidates) from all participants were grouped and were evaluated by human graders using the Evalutron 6000 grading system. Each individual query/candidate set was evaluated by a single grader. For each query/candidate pair, graders provided two scores. Graders were asked to provide 1 categorical BROAD score with 3 categories: NS,SS,VS as explained below, and one FINE score (in the range from 0 to 100). A description and analysis is provided below.

The systems read in 30 second audio clips as their raw data. The same 30 second clips were used in the grading stage.

General Legend

Team ID

| Sub code | Submission name | Abstract | Contributors |

|---|---|---|---|

| DM1 | DM1 | Franz de Leon, Kirk Martinez | |

| DM2 | DM2 | Franz de Leon, Kirk Martinez | |

| GKC1 | ILSP Audio Similarity | [http:// Aggelos Gkiokas], [http:// Vassilis Katsouros], [http:// George Carayannis] | |

| GKC2 | ILSP Audio Similarity 2 | [http:// Aggelos Gkiokas], [http:// Vassilis Katsouros], [http:// George Carayannis] | |

| PS1 | PS09 | Dominik Schnitzer, Tim Pohle | |

| RA1 | SimRet2013 | [http:// Roman Aliyev] | |

| SS2 | cbmr_sim_2013_submission | Klaus Seyerlehner, Markus Schedl | |

| SSPK1 | cbmr_sim_2010_resubmission | Klaus Seyerlehner, Markus Schedl, Tim Pohle, Peter Knees |

Broad Categories

NS = Not Similar

SS = Somewhat Similar

VS = Very Similar

Understanding Summary Measures

Fine = Has a range from 0 (failure) to 100 (perfection).

Broad = Has a range from 0 (failure) to 2 (perfection) as each query/candidate pair is scored with either NS=0, SS=1 or VS=2.

Human Evaluation

Overall Summary Results

| Measure | DM1 | DM2 | GKC1 | GKC2 | PS1 | RA1 | SS2 | SSPK |

|---|---|---|---|---|---|---|---|---|

| Average Fine Score | 46.262 | 48.074 | 43.804 | 48.046 | 53.808 | 18.858 | 55.210 | 54.100 |

| Average Cat Score | 0.926 | 0.992 | 0.896 | 0.978 | 1.142 | 0.276 | 1.184 | 1.164 |

Friedman's Tests

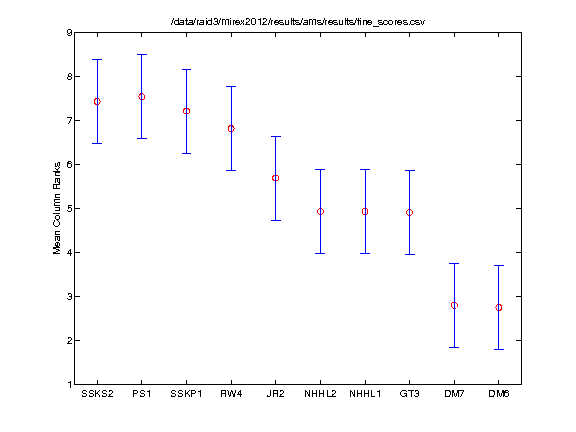

Friedman's Test (FINE Scores)

The Friedman test was run in MATLAB against the Fine summary data over the 50 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| SS2 | SSPK1 | -1.103 | 0.380 | 1.863 | FALSE |

| SS2 | PS1 | -1.063 | 0.420 | 1.903 | FALSE |

| SS2 | DM2 | 0.027 | 1.510 | 2.993 | TRUE |

| SS2 | GKC2 | 0.027 | 1.510 | 2.993 | TRUE |

| SS2 | DM1 | 0.447 | 1.930 | 3.413 | TRUE |

| SS2 | GKC1 | 1.187 | 2.670 | 4.153 | TRUE |

| SS2 | RA1 | 3.457 | 4.940 | 6.423 | TRUE |

| SSPK1 | PS1 | -1.443 | 0.040 | 1.523 | FALSE |

| SSPK1 | DM2 | -0.353 | 1.130 | 2.613 | FALSE |

| SSPK1 | GKC2 | -0.353 | 1.130 | 2.613 | FALSE |

| SSPK1 | DM1 | 0.067 | 1.550 | 3.033 | TRUE |

| SSPK1 | GKC1 | 0.807 | 2.290 | 3.773 | TRUE |

| SSPK1 | RA1 | 3.077 | 4.560 | 6.043 | TRUE |

| PS1 | DM2 | -0.393 | 1.090 | 2.573 | FALSE |

| PS1 | GKC2 | -0.393 | 1.090 | 2.573 | FALSE |

| PS1 | DM1 | 0.027 | 1.510 | 2.993 | TRUE |

| PS1 | GKC1 | 0.767 | 2.250 | 3.733 | TRUE |

| PS1 | RA1 | 3.037 | 4.520 | 6.003 | TRUE |

| DM2 | GKC2 | -1.483 | 0.000 | 1.483 | FALSE |

| DM2 | DM1 | -1.063 | 0.420 | 1.903 | FALSE |

| DM2 | GKC1 | -0.323 | 1.160 | 2.643 | FALSE |

| DM2 | RA1 | 1.947 | 3.430 | 4.913 | TRUE |

| GKC2 | DM1 | -1.063 | 0.420 | 1.903 | FALSE |

| GKC2 | GKC1 | -0.323 | 1.160 | 2.643 | FALSE |

| GKC2 | RA1 | 1.947 | 3.430 | 4.913 | TRUE |

| DM1 | GKC1 | -0.743 | 0.740 | 2.223 | FALSE |

| DM1 | RA1 | 1.527 | 3.010 | 4.493 | TRUE |

| GKC1 | RA1 | 0.787 | 2.270 | 3.753 | TRUE |

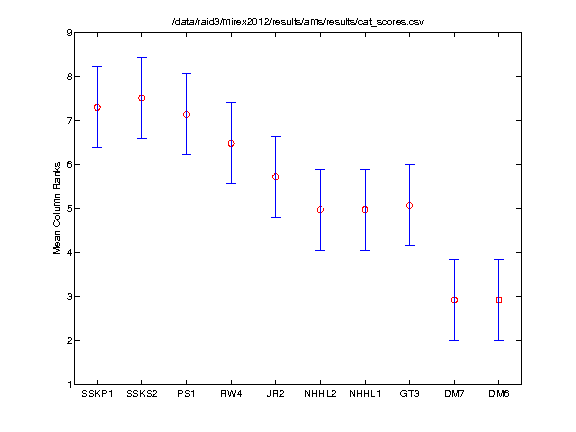

Friedman's Test (BROAD Scores)

The Friedman test was run in MATLAB against the BROAD summary data over the 50 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| SS2 | SSPK1 | -1.037 | 0.400 | 1.837 | FALSE |

| SS2 | PS1 | -1.067 | 0.370 | 1.807 | FALSE |

| SS2 | DM2 | -0.017 | 1.420 | 2.857 | FALSE |

| SS2 | GKC2 | 0.193 | 1.630 | 3.067 | TRUE |

| SS2 | DM1 | 0.573 | 2.010 | 3.447 | TRUE |

| SS2 | GKC1 | 0.993 | 2.430 | 3.867 | TRUE |

| SS2 | RA1 | 3.263 | 4.700 | 6.137 | TRUE |

| SSPK1 | PS1 | -1.467 | -0.030 | 1.407 | FALSE |

| SSPK1 | DM2 | -0.417 | 1.020 | 2.457 | FALSE |

| SSPK1 | GKC2 | -0.207 | 1.230 | 2.667 | FALSE |

| SSPK1 | DM1 | 0.173 | 1.610 | 3.047 | TRUE |

| SSPK1 | GKC1 | 0.593 | 2.030 | 3.467 | TRUE |

| SSPK1 | RA1 | 2.863 | 4.300 | 5.737 | TRUE |

| PS1 | DM2 | -0.387 | 1.050 | 2.487 | FALSE |

| PS1 | GKC2 | -0.177 | 1.260 | 2.697 | FALSE |

| PS1 | DM1 | 0.203 | 1.640 | 3.077 | TRUE |

| PS1 | GKC1 | 0.623 | 2.060 | 3.497 | TRUE |

| PS1 | RA1 | 2.893 | 4.330 | 5.767 | TRUE |

| DM2 | GKC2 | -1.227 | 0.210 | 1.647 | FALSE |

| DM2 | DM1 | -0.847 | 0.590 | 2.027 | FALSE |

| DM2 | GKC1 | -0.427 | 1.010 | 2.447 | FALSE |

| DM2 | RA1 | 1.843 | 3.280 | 4.717 | TRUE |

| GKC2 | DM1 | -1.057 | 0.380 | 1.817 | FALSE |

| GKC2 | GKC1 | -0.637 | 0.800 | 2.237 | FALSE |

| GKC2 | RA1 | 1.633 | 3.070 | 4.507 | TRUE |

| DM1 | GKC1 | -1.017 | 0.420 | 1.857 | FALSE |

| DM1 | RA1 | 1.253 | 2.690 | 4.127 | TRUE |

| GKC1 | RA1 | 0.833 | 2.270 | 3.707 | TRUE |

Summary Results by Query

FINE Scores

These are the mean FINE scores per query assigned by Evalutron graders. The FINE scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 and 100. A perfect score would be 100. Genre labels have been included for reference.

| Genre | Query | DM1 | DM2 | GKC1 | GKC2 | PS1 | RA1 | SS2 | SSPK1 |

|---|---|---|---|---|---|---|---|---|---|

| BAROQUE | d001537 | 88.8 | 85.3 | 76.7 | 84.6 | 82.7 | 14.7 | 86.8 | 87.6 |

| BAROQUE | d004754 | 27.3 | 33.7 | 24.3 | 25.7 | 55.7 | 0.4 | 35.6 | 55.8 |

| BAROQUE | d005709 | 67.0 | 67.0 | 61.2 | 57.0 | 63.3 | 4.9 | 60.0 | 50.0 |

| BAROQUE | d010905 | 64.3 | 40.3 | 61.8 | 59.3 | 70.3 | 9.7 | 76.0 | 76.5 |

| BAROQUE | d012021 | 38.7 | 14.7 | 20.5 | 27.0 | 40.0 | 0.2 | 54.5 | 58.0 |

| BLUES | e000029 | 33.0 | 35.6 | 27.0 | 23.4 | 36.1 | 17.7 | 23.4 | 29.7 |

| BLUES | e001368 | 75.1 | 74.4 | 53.7 | 43.0 | 79.1 | 35.1 | 73.4 | 72.6 |

| BLUES | e005937 | 43.1 | 51.9 | 37.0 | 53.0 | 67.3 | 55.6 | 65.3 | 66.5 |

| BLUES | e015582 | 62.7 | 49.8 | 64.1 | 55.7 | 38.4 | 41.8 | 66.8 | 59.7 |

| BLUES | e018379 | 63.8 | 61.6 | 59.8 | 63.3 | 63.5 | 36.3 | 58.6 | 59.4 |

| CLASSICAL | d004474 | 58.5 | 59.2 | 55.0 | 53.9 | 55.6 | 8.3 | 57.7 | 58.2 |

| CLASSICAL | d007653 | 54.1 | 58.0 | 41.0 | 38.7 | 51.1 | 13.7 | 54.8 | 48.0 |

| CLASSICAL | d007675 | 62.3 | 81.0 | 73.5 | 72.2 | 75.6 | 21.4 | 80.0 | 72.4 |

| CLASSICAL | d019132 | 3.5 | 2.8 | 3.4 | 4.9 | 3 | 0 | 7.5 | 4.5 |

| CLASSICAL | d019948 | 65.5 | 58.0 | 53.5 | 60.0 | 64.5 | 7.5 | 61.5 | 58.5 |

| COUNTRY | e003848 | 36.8 | 36.3 | 29.2 | 29.9 | 39.9 | 15.2 | 41.4 | 40.0 |

| COUNTRY | e007409 | 59.0 | 65.0 | 67.5 | 67.0 | 76.0 | 39.0 | 76.5 | 81.0 |

| COUNTRY | e010612 | 40.7 | 37.5 | 35.1 | 52.9 | 55.1 | 14.1 | 66.0 | 62.7 |

| COUNTRY | e012827 | 67.9 | 71.6 | 68.4 | 69.4 | 80.0 | 29.9 | 87.5 | 76.7 |

| COUNTRY | e019126 | 29.2 | 44.1 | 33.4 | 41.6 | 31.4 | 22.5 | 45.6 | 39.0 |

| EDANCE | a000504 | 86.5 | 88.1 | 68.5 | 80.7 | 84.5 | 24.7 | 87.5 | 83.9 |

| EDANCE | a003842 | 19.5 | 28.5 | 56.8 | 42.5 | 56.6 | 22.7 | 60.8 | 53.8 |

| EDANCE | a004027 | 43.8 | 39.0 | 25.1 | 20.7 | 41.0 | 21.6 | 28.4 | 33.4 |

| EDANCE | b002993 | 57.0 | 49.0 | 51.0 | 57.0 | 37.0 | 29.0 | 68.0 | 68.0 |

| EDANCE | b003885 | 33.9 | 58.1 | 66.6 | 67.6 | 56.0 | 25.4 | 67.5 | 67.6 |

| JAZZ | e001100 | 43.3 | 31.9 | 59.6 | 69.1 | 65.6 | 12.1 | 63.6 | 60.3 |

| JAZZ | e002233 | 56.8 | 59.0 | 54.0 | 59.2 | 51.9 | 31.8 | 52.2 | 63.3 |

| JAZZ | e003036 | 83.5 | 79.8 | 86.1 | 88.5 | 82.2 | 37.7 | 88.5 | 87.9 |

| JAZZ | e007159 | 60.2 | 67.3 | 41.3 | 65.5 | 73.6 | 16.8 | 72.2 | 46.1 |

| JAZZ | e010868 | 61.0 | 73.0 | 65.4 | 72.4 | 69.7 | 41.3 | 71.3 | 85.5 |

| METAL | b005514 | 9.5 | 22.6 | 15.5 | 27.7 | 24.4 | 13.5 | 28.9 | 35.9 |

| METAL | b005832 | 63.6 | 64.5 | 36.5 | 50.0 | 62.8 | 38.1 | 73.9 | 73.3 |

| METAL | b008728 | 13.9 | 18.3 | 15.1 | 26.4 | 24.1 | 12.1 | 24.7 | 16.6 |

| METAL | b015780 | 39.3 | 66.0 | 44.7 | 46.1 | 70.3 | 22.7 | 50.0 | 58.5 |

| METAL | f004405 | 17.4 | 21.6 | 11.0 | 22.4 | 37.7 | 12.6 | 15.9 | 21.2 |

| RAPHIPHOP | a005976 | 52.5 | 58.0 | 50.3 | 56.6 | 58.4 | 23.2 | 62.4 | 59.2 |

| RAPHIPHOP | a007753 | 37.3 | 38.1 | 32.6 | 50.7 | 48.3 | 10.9 | 48.9 | 49.1 |

| RAPHIPHOP | a008296 | 31.1 | 27.2 | 40.4 | 40.4 | 36.1 | 14.3 | 38.7 | 37.1 |

| RAPHIPHOP | b007216 | 12.7 | 22.1 | 36.9 | 44.5 | 46.0 | 10.9 | 40.9 | 38.5 |

| RAPHIPHOP | b009991 | 62.9 | 65.2 | 67.2 | 69.5 | 75.6 | 20.4 | 62.4 | 66.3 |

| ROCKROLL | b002418 | 29.5 | 29.0 | 34.5 | 38.5 | 35.0 | 14.8 | 42.0 | 33.5 |

| ROCKROLL | b012404 | 36.8 | 52.1 | 47.1 | 45.7 | 46.5 | 36.3 | 58.7 | 61.7 |

| ROCKROLL | b012651 | 17.5 | 18.0 | 10.0 | 21.5 | 30.5 | 12.5 | 41.5 | 33.5 |

| ROCKROLL | b018558 | 35.5 | 36.0 | 11.5 | 25.0 | 42.0 | 11.5 | 49.0 | 48.5 |

| ROCKROLL | b019150 | 6 | 11.2 | 12.0 | 11.2 | 13.6 | 3.5 | 12.2 | 13.6 |

| ROMANTIC | d004234 | 27.7 | 20.4 | 17.2 | 24.6 | 28.3 | 11.7 | 13.8 | 27.0 |

| ROMANTIC | d007659 | 65.0 | 62.5 | 22.5 | 40.9 | 72.8 | 1.5 | 74.1 | 67.6 |

| ROMANTIC | d011050 | 54.5 | 58.8 | 44.2 | 44.9 | 52.5 | 7.1 | 53.2 | 47.3 |

| ROMANTIC | d011968 | 76.1 | 67.9 | 74.0 | 59.3 | 78.0 | 6.2 | 73.7 | 73.8 |

| ROMANTIC | d013072 | 37.5 | 42.7 | 46.5 | 50.7 | 60.8 | 8 | 56.7 | 40.2 |

BROAD Scores

These are the mean BROAD scores per query assigned by Evalutron graders. The BROAD scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 (not similar) and 2 (very similar). A perfect score would be 2. Genre labels have been included for reference.

| Genre | Query | DM1 | DM2 | GKC1 | GKC2 | PS1 | RA1 | SS2 | SSPK1 |

|---|---|---|---|---|---|---|---|---|---|

| BAROQUE | d001537 | 2 | 2 | 1.6 | 1.9 | 1.9 | 0.1 | 2 | 2 |

| BAROQUE | d004754 | 0.7 | 0.8 | 0.7 | 0.8 | 1.2 | 0 | 0.7 | 1.2 |

| BAROQUE | d005709 | 1.5 | 1.5 | 1.3 | 1.1 | 1.5 | 0 | 1.4 | 1 |

| BAROQUE | d010905 | 1.7 | 0.8 | 1.5 | 1.4 | 1.8 | 0.1 | 1.9 | 2 |

| BAROQUE | d012021 | 0.9 | 0.3 | 0.5 | 0.4 | 0.7 | 0 | 1.1 | 1.4 |

| BLUES | e000029 | 1 | 1.1 | 0.6 | 0.8 | 1 | 0.4 | 0.8 | 1 |

| BLUES | e001368 | 1.5 | 1.5 | 0.7 | 0.4 | 1.8 | 0.4 | 1.5 | 1.6 |

| BLUES | e005937 | 0.8 | 1.1 | 0.6 | 1.2 | 1.6 | 1.2 | 1.5 | 1.5 |

| BLUES | e015582 | 1.2 | 0.9 | 1.3 | 1 | 0.6 | 0.8 | 1.4 | 1.2 |

| BLUES | e018379 | 0.9 | 0.9 | 1.1 | 1.2 | 1.2 | 0.6 | 1 | 1 |

| CLASSICAL | d004474 | 1.3 | 1.3 | 1.2 | 1.2 | 1.2 | 0 | 1.3 | 1.1 |

| CLASSICAL | d007653 | 1.2 | 1.3 | 0.7 | 0.6 | 0.9 | 0 | 1.2 | 1 |

| CLASSICAL | d007675 | 1.4 | 1.9 | 1.6 | 1.7 | 1.7 | 0.2 | 1.9 | 1.7 |

| CLASSICAL | d019132 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 |

| CLASSICAL | d019948 | 1.1 | 0.9 | 1 | 1 | 1 | 0 | 1 | 1 |

| COUNTRY | e003848 | 0.6 | 0.6 | 0.3 | 0.3 | 0.6 | 0 | 0.7 | 0.6 |

| COUNTRY | e007409 | 1 | 1.2 | 1.3 | 1.2 | 1.6 | 0.6 | 1.6 | 1.8 |

| COUNTRY | e010612 | 0.8 | 0.8 | 0.7 | 1.1 | 1.2 | 0.1 | 1.3 | 1.2 |

| COUNTRY | e012827 | 1.2 | 1.4 | 1.4 | 1.3 | 1.7 | 0.5 | 2 | 1.7 |

| COUNTRY | e019126 | 0.5 | 0.8 | 0.6 | 0.8 | 0.7 | 0.3 | 1 | 0.7 |

| EDANCE | a000504 | 2 | 2 | 1.5 | 1.8 | 1.7 | 0.4 | 1.9 | 1.8 |

| EDANCE | a003842 | 0.1 | 0.5 | 1.4 | 0.9 | 1.4 | 0.4 | 1.6 | 1.4 |

| EDANCE | a004027 | 0.9 | 0.8 | 0.4 | 0.3 | 0.9 | 0.4 | 0.7 | 0.8 |

| EDANCE | b002993 | 1 | 1 | 0.9 | 1 | 0.5 | 0.3 | 1.3 | 1.3 |

| EDANCE | b003885 | 0.5 | 1.2 | 1.8 | 1.8 | 1.2 | 0.3 | 1.6 | 1.6 |

| JAZZ | e001100 | 0.5 | 0.3 | 1.2 | 1.3 | 1.2 | 0 | 1.2 | 1.1 |

| JAZZ | e002233 | 1.1 | 1.2 | 1.2 | 1.3 | 1.1 | 0.6 | 1.1 | 1.3 |

| JAZZ | e003036 | 1.7 | 1.6 | 1.9 | 1.8 | 1.6 | 0.3 | 1.9 | 2 |

| JAZZ | e007159 | 1.3 | 1.5 | 0.7 | 1.3 | 1.6 | 0.3 | 1.5 | 1 |

| JAZZ | e010868 | 1.1 | 1.3 | 1.2 | 1.3 | 1.3 | 0.7 | 1.3 | 1.9 |

| METAL | b005514 | 0.3 | 0.8 | 0.6 | 0.8 | 0.9 | 0.6 | 0.8 | 1.1 |

| METAL | b005832 | 1.3 | 1.3 | 0.5 | 0.7 | 1.2 | 0.6 | 1.5 | 1.6 |

| METAL | b008728 | 0.3 | 0.5 | 0.5 | 0.6 | 0.7 | 0.2 | 0.7 | 0.5 |

| METAL | b015780 | 0.9 | 1.7 | 1 | 1 | 1.7 | 0.4 | 1.1 | 1.3 |

| METAL | f004405 | 0.7 | 0.7 | 0.3 | 0.7 | 1.2 | 0.5 | 0.5 | 0.6 |

| RAPHIPHOP | a005976 | 0.9 | 0.9 | 0.7 | 0.9 | 1 | 0.3 | 1 | 1 |

| RAPHIPHOP | a007753 | 0.8 | 0.7 | 0.5 | 1 | 0.9 | 0 | 1 | 1.1 |

| RAPHIPHOP | a008296 | 0.4 | 0.3 | 0.7 | 0.8 | 0.7 | 0.2 | 0.6 | 0.6 |

| RAPHIPHOP | b007216 | 0.2 | 0.5 | 0.8 | 1 | 1.1 | 0.1 | 1 | 0.8 |

| RAPHIPHOP | b009991 | 1.3 | 1.3 | 1.6 | 1.6 | 1.7 | 0 | 1.2 | 1.4 |

| ROCKROLL | b002418 | 0.4 | 0.4 | 0.6 | 0.7 | 0.5 | 0.1 | 0.6 | 0.4 |

| ROCKROLL | b012404 | 0.7 | 1.3 | 0.9 | 0.7 | 0.9 | 0.5 | 1.5 | 1.4 |

| ROCKROLL | b012651 | 0.2 | 0.3 | 0.2 | 0.4 | 0.5 | 0.3 | 0.7 | 0.5 |

| ROCKROLL | b018558 | 0.8 | 0.8 | 0.2 | 0.7 | 1.1 | 0.4 | 1.3 | 1.3 |

| ROCKROLL | b019150 | 0.2 | 0.5 | 0.5 | 0.4 | 0.6 | 0.4 | 0.5 | 0.5 |

| ROMANTIC | d004234 | 0.2 | 0.2 | 0 | 0 | 0 | 0 | 0.1 | 0.2 |

| ROMANTIC | d007659 | 1.2 | 1.1 | 0.5 | 1 | 1.6 | 0 | 1.4 | 1.3 |

| ROMANTIC | d011050 | 1.1 | 1.2 | 1 | 1 | 1.1 | 0.1 | 1.1 | 1 |

| ROMANTIC | d011968 | 2 | 1.7 | 1.7 | 1.5 | 1.9 | 0 | 1.8 | 1.9 |

| ROMANTIC | d013072 | 0.9 | 0.9 | 1.1 | 1.2 | 1.4 | 0.1 | 1.3 | 0.8 |

Raw Scores

The raw data derived from the Evalutron 6000 human evaluations are located on the 2013:Audio Music Similarity and Retrieval Raw Data page.

Metadata and Distance Space Evaluation

The following reports provide evaluation statistics based on analysis of the distance space and metadata matches and include:

- Neighbourhood clustering by artist, album and genre

- Artist-filtered genre clustering

- How often the triangular inequality holds

- Statistics on 'hubs' (tracks similar to many tracks) and orphans (tracks that are not similar to any other tracks at N results).

Reports

DM1 = Franz de Leon, Kirk Martinez

DM2 = Franz de Leon, Kirk Martinez

GKC1 = Aggelos Gkiokas, Vassilis Katsouros, George Carayannis

GKC2 = Aggelos Gkiokas, Vassilis Katsouros, George Carayannis

PS1 = Dominik Schnitzer, Tim Pohle

RA1 = Roman Aliyev

SS2 = Klaus Seyerlehner, Markus Schedl

SSPK1 = Klaus Seyerlehner, Markus Schedl, Tim Pohle, Peter Knees