Difference between revisions of "2011:Audio Music Similarity and Retrieval Results"

Kahyun Choi (talk | contribs) (→Team ID) |

Kahyun Choi (talk | contribs) (→Team ID) |

||

| Line 72: | Line 72: | ||

| SUMMs for AMS || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2011/ZYC2.pdf PDF] || [http://www.icst.pku.edu.cn/ Dongying Zhang], [http://www.icst.pku.edu.cn/ Deshun Yang], [http://www.icst.pku.edu.cn/team/content_266.htm Xiaoou Chen] | | SUMMs for AMS || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2011/ZYC2.pdf PDF] || [http://www.icst.pku.edu.cn/ Dongying Zhang], [http://www.icst.pku.edu.cn/ Deshun Yang], [http://www.icst.pku.edu.cn/team/content_266.htm Xiaoou Chen] | ||

|- | |- | ||

| − | |||

| − | |||

|} | |} | ||

Revision as of 14:16, 21 October 2011

Introduction

These are the results for the 2011 running of the Audio Music Similarity and Retrieval task set. For background information about this task set please refer to the Audio Music Similarity and Retrieval page.

Each system was given 7000 songs chosen from IMIRSEL's "uspop", "uscrap" and "american" "classical" and "sundry" collections. Each system then returned a 7000x7000 distance matrix. 100 songs were randomly selected from the 10 genre groups (10 per genre) as queries and the first 5 most highly ranked songs out of the 7000 were extracted for each query (after filtering out the query itself, returned results from the same artist were also omitted). Then, for each query, the returned results (candidates) from all participants were grouped and were evaluated by human graders using the Evalutron 6000 grading system. Each individual query/candidate set was evaluated by a single grader. For each query/candidate pair, graders provided two scores. Graders were asked to provide 1 categorical BROAD score with 3 categories: NS,SS,VS as explained below, and one FINE score (in the range from 0 to 100). A description and analysis is provided below.

The systems read in 30 second audio clips as their raw data. The same 30 second clips were used in the grading stage.

General Legend

Team ID

Broad Categories

NS = Not Similar

SS = Somewhat Similar

VS = Very Similar

Understanding Summary Measures

Fine = Has a range from 0 (failure) to 100 (perfection).

Broad = Has a range from 0 (failure) to 2 (perfection) as each query/candidate pair is scored with either NS=0, SS=1 or VS=2.

Human Evaluation

Overall Summary Results

| Measure | CTCP1 | CTCP2 | CTCP3 | DM2 | DM3 | GKC1 | HKHLL1 | ML1 | ML2 | ML3 | PS1 | SSKS3 | SSPK2 | STBD1 | STBD2 | STBD3 | YL1 | ZYC2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Average Fine Score | 57.268 | 58.586 | 56.208 | 50.492 | 50.348 | 31.844 | 42.188 | 47.784 | 47.304 | 47.782 | 57.700 | 58.128 | 58.642 | 33.906 | 30.562 | 30.386 | 42.366 | 50.042 |

| Average Cat Score | 1.260 | 1.296 | 1.222 | 1.088 | 1.078 | 0.594 | 0.874 | 0.990 | 0.988 | 1.008 | 1.272 | 1.292 | 1.312 | 0.636 | 0.578 | 0.574 | 0.886 | 1.078 |

Note:RZ1 is the random result for comparing purpose.

Friedman's Tests

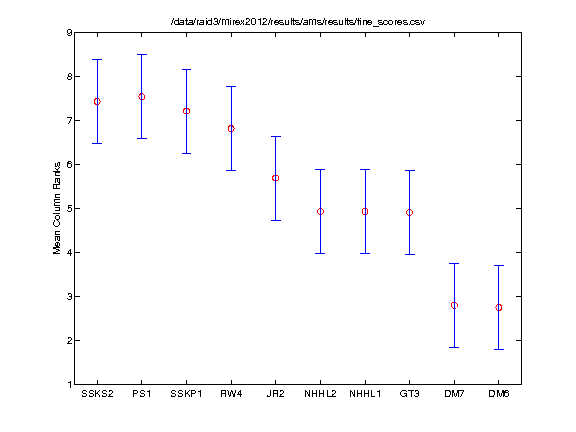

Friedman's Test (FINE Scores)

The Friedman test was run in MATLAB against the Fine summary data over the 100 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| SSPK2 | CTCP2 | -2.005 | 0.620 | 3.245 | FALSE |

| SSPK2 | SSKS3 | -2.120 | 0.505 | 3.130 | FALSE |

| SSPK2 | PS1 | -2.430 | 0.195 | 2.820 | FALSE |

| SSPK2 | CTCP1 | -1.510 | 1.115 | 3.740 | FALSE |

| SSPK2 | CTCP3 | -1.145 | 1.480 | 4.105 | FALSE |

| SSPK2 | DM2 | 0.820 | 3.445 | 6.070 | TRUE |

| SSPK2 | DM3 | 0.840 | 3.465 | 6.090 | TRUE |

| SSPK2 | ZYC2 | 0.620 | 3.245 | 5.870 | TRUE |

| SSPK2 | ML1 | 1.850 | 4.475 | 7.100 | TRUE |

| SSPK2 | ML3 | 2.530 | 5.155 | 7.780 | TRUE |

| SSPK2 | ML2 | 2.435 | 5.060 | 7.685 | TRUE |

| SSPK2 | YL1 | 3.360 | 5.985 | 8.610 | TRUE |

| SSPK2 | HKHLL1 | 4.085 | 6.710 | 9.335 | TRUE |

| SSPK2 | STBD1 | 5.745 | 8.370 | 10.995 | TRUE |

| SSPK2 | GKC1 | 5.880 | 8.505 | 11.130 | TRUE |

| SSPK2 | STBD2 | 6.435 | 9.060 | 11.685 | TRUE |

| SSPK2 | STBD3 | 6.575 | 9.200 | 11.825 | TRUE |

| CTCP2 | SSKS3 | -2.740 | -0.115 | 2.510 | FALSE |

| CTCP2 | PS1 | -3.050 | -0.425 | 2.200 | FALSE |

| CTCP2 | CTCP1 | -2.130 | 0.495 | 3.120 | FALSE |

| CTCP2 | CTCP3 | -1.765 | 0.860 | 3.485 | FALSE |

| CTCP2 | DM2 | 0.200 | 2.825 | 5.450 | TRUE |

| CTCP2 | DM3 | 0.220 | 2.845 | 5.470 | TRUE |

| CTCP2 | ZYC2 | 0.000 | 2.625 | 5.250 | TRUE |

| CTCP2 | ML1 | 1.230 | 3.855 | 6.480 | TRUE |

| CTCP2 | ML3 | 1.910 | 4.535 | 7.160 | TRUE |

| CTCP2 | ML2 | 1.815 | 4.440 | 7.065 | TRUE |

| CTCP2 | YL1 | 2.740 | 5.365 | 7.990 | TRUE |

| CTCP2 | HKHLL1 | 3.465 | 6.090 | 8.715 | TRUE |

| CTCP2 | STBD1 | 5.125 | 7.750 | 10.375 | TRUE |

| CTCP2 | GKC1 | 5.260 | 7.885 | 10.510 | TRUE |

| CTCP2 | STBD2 | 5.815 | 8.440 | 11.065 | TRUE |

| CTCP2 | STBD3 | 5.955 | 8.580 | 11.205 | TRUE |

| SSKS3 | PS1 | -2.935 | -0.310 | 2.315 | FALSE |

| SSKS3 | CTCP1 | -2.015 | 0.610 | 3.235 | FALSE |

| SSKS3 | CTCP3 | -1.650 | 0.975 | 3.600 | FALSE |

| SSKS3 | DM2 | 0.315 | 2.940 | 5.565 | TRUE |

| SSKS3 | DM3 | 0.335 | 2.960 | 5.585 | TRUE |

| SSKS3 | ZYC2 | 0.115 | 2.740 | 5.365 | TRUE |

| SSKS3 | ML1 | 1.345 | 3.970 | 6.595 | TRUE |

| SSKS3 | ML3 | 2.025 | 4.650 | 7.275 | TRUE |

| SSKS3 | ML2 | 1.930 | 4.555 | 7.180 | TRUE |

| SSKS3 | YL1 | 2.855 | 5.480 | 8.105 | TRUE |

| SSKS3 | HKHLL1 | 3.580 | 6.205 | 8.830 | TRUE |

| SSKS3 | STBD1 | 5.240 | 7.865 | 10.490 | TRUE |

| SSKS3 | GKC1 | 5.375 | 8.000 | 10.625 | TRUE |

| SSKS3 | STBD2 | 5.930 | 8.555 | 11.180 | TRUE |

| SSKS3 | STBD3 | 6.070 | 8.695 | 11.320 | TRUE |

| PS1 | CTCP1 | -1.705 | 0.920 | 3.545 | FALSE |

| PS1 | CTCP3 | -1.340 | 1.285 | 3.910 | FALSE |

| PS1 | DM2 | 0.625 | 3.250 | 5.875 | TRUE |

| PS1 | DM3 | 0.645 | 3.270 | 5.895 | TRUE |

| PS1 | ZYC2 | 0.425 | 3.050 | 5.675 | TRUE |

| PS1 | ML1 | 1.655 | 4.280 | 6.905 | TRUE |

| PS1 | ML3 | 2.335 | 4.960 | 7.585 | TRUE |

| PS1 | ML2 | 2.240 | 4.865 | 7.490 | TRUE |

| PS1 | YL1 | 3.165 | 5.790 | 8.415 | TRUE |

| PS1 | HKHLL1 | 3.890 | 6.515 | 9.140 | TRUE |

| PS1 | STBD1 | 5.550 | 8.175 | 10.800 | TRUE |

| PS1 | GKC1 | 5.685 | 8.310 | 10.935 | TRUE |

| PS1 | STBD2 | 6.240 | 8.865 | 11.490 | TRUE |

| PS1 | STBD3 | 6.380 | 9.005 | 11.630 | TRUE |

| CTCP1 | CTCP3 | -2.260 | 0.365 | 2.990 | FALSE |

| CTCP1 | DM2 | -0.295 | 2.330 | 4.955 | FALSE |

| CTCP1 | DM3 | -0.275 | 2.350 | 4.975 | FALSE |

| CTCP1 | ZYC2 | -0.495 | 2.130 | 4.755 | FALSE |

| CTCP1 | ML1 | 0.735 | 3.360 | 5.985 | TRUE |

| CTCP1 | ML3 | 1.415 | 4.040 | 6.665 | TRUE |

| CTCP1 | ML2 | 1.320 | 3.945 | 6.570 | TRUE |

| CTCP1 | YL1 | 2.245 | 4.870 | 7.495 | TRUE |

| CTCP1 | HKHLL1 | 2.970 | 5.595 | 8.220 | TRUE |

| CTCP1 | STBD1 | 4.630 | 7.255 | 9.880 | TRUE |

| CTCP1 | GKC1 | 4.765 | 7.390 | 10.015 | TRUE |

| CTCP1 | STBD2 | 5.320 | 7.945 | 10.570 | TRUE |

| CTCP1 | STBD3 | 5.460 | 8.085 | 10.710 | TRUE |

| CTCP3 | DM2 | -0.660 | 1.965 | 4.590 | FALSE |

| CTCP3 | DM3 | -0.640 | 1.985 | 4.610 | FALSE |

| CTCP3 | ZYC2 | -0.860 | 1.765 | 4.390 | FALSE |

| CTCP3 | ML1 | 0.370 | 2.995 | 5.620 | TRUE |

| CTCP3 | ML3 | 1.050 | 3.675 | 6.300 | TRUE |

| CTCP3 | ML2 | 0.955 | 3.580 | 6.205 | TRUE |

| CTCP3 | YL1 | 1.880 | 4.505 | 7.130 | TRUE |

| CTCP3 | HKHLL1 | 2.605 | 5.230 | 7.855 | TRUE |

| CTCP3 | STBD1 | 4.265 | 6.890 | 9.515 | TRUE |

| CTCP3 | GKC1 | 4.400 | 7.025 | 9.650 | TRUE |

| CTCP3 | STBD2 | 4.955 | 7.580 | 10.205 | TRUE |

| CTCP3 | STBD3 | 5.095 | 7.720 | 10.345 | TRUE |

| DM2 | DM3 | -2.605 | 0.020 | 2.645 | FALSE |

| DM2 | ZYC2 | -2.825 | -0.200 | 2.425 | FALSE |

| DM2 | ML1 | -1.595 | 1.030 | 3.655 | FALSE |

| DM2 | ML3 | -0.915 | 1.710 | 4.335 | FALSE |

| DM2 | ML2 | -1.010 | 1.615 | 4.240 | FALSE |

| DM2 | YL1 | -0.085 | 2.540 | 5.165 | FALSE |

| DM2 | HKHLL1 | 0.640 | 3.265 | 5.890 | TRUE |

| DM2 | STBD1 | 2.300 | 4.925 | 7.550 | TRUE |

| DM2 | GKC1 | 2.435 | 5.060 | 7.685 | TRUE |

| DM2 | STBD2 | 2.990 | 5.615 | 8.240 | TRUE |

| DM2 | STBD3 | 3.130 | 5.755 | 8.380 | TRUE |

| DM3 | ZYC2 | -2.845 | -0.220 | 2.405 | FALSE |

| DM3 | ML1 | -1.615 | 1.010 | 3.635 | FALSE |

| DM3 | ML3 | -0.935 | 1.690 | 4.315 | FALSE |

| DM3 | ML2 | -1.030 | 1.595 | 4.220 | FALSE |

| DM3 | YL1 | -0.105 | 2.520 | 5.145 | FALSE |

| DM3 | HKHLL1 | 0.620 | 3.245 | 5.870 | TRUE |

| DM3 | STBD1 | 2.280 | 4.905 | 7.530 | TRUE |

| DM3 | GKC1 | 2.415 | 5.040 | 7.665 | TRUE |

| DM3 | STBD2 | 2.970 | 5.595 | 8.220 | TRUE |

| DM3 | STBD3 | 3.110 | 5.735 | 8.360 | TRUE |

| ZYC2 | ML1 | -1.395 | 1.230 | 3.855 | FALSE |

| ZYC2 | ML3 | -0.715 | 1.910 | 4.535 | FALSE |

| ZYC2 | ML2 | -0.810 | 1.815 | 4.440 | FALSE |

| ZYC2 | YL1 | 0.115 | 2.740 | 5.365 | TRUE |

| ZYC2 | HKHLL1 | 0.840 | 3.465 | 6.090 | TRUE |

| ZYC2 | STBD1 | 2.500 | 5.125 | 7.750 | TRUE |

| ZYC2 | GKC1 | 2.635 | 5.260 | 7.885 | TRUE |

| ZYC2 | STBD2 | 3.190 | 5.815 | 8.440 | TRUE |

| ZYC2 | STBD3 | 3.330 | 5.955 | 8.580 | TRUE |

| ML1 | ML3 | -1.945 | 0.680 | 3.305 | FALSE |

| ML1 | ML2 | -2.040 | 0.585 | 3.210 | FALSE |

| ML1 | YL1 | -1.115 | 1.510 | 4.135 | FALSE |

| ML1 | HKHLL1 | -0.390 | 2.235 | 4.860 | FALSE |

| ML1 | STBD1 | 1.270 | 3.895 | 6.520 | TRUE |

| ML1 | GKC1 | 1.405 | 4.030 | 6.655 | TRUE |

| ML1 | STBD2 | 1.960 | 4.585 | 7.210 | TRUE |

| ML1 | STBD3 | 2.100 | 4.725 | 7.350 | TRUE |

| ML3 | ML2 | -2.720 | -0.095 | 2.530 | FALSE |

| ML3 | YL1 | -1.795 | 0.830 | 3.455 | FALSE |

| ML3 | HKHLL1 | -1.070 | 1.555 | 4.180 | FALSE |

| ML3 | STBD1 | 0.590 | 3.215 | 5.840 | TRUE |

| ML3 | GKC1 | 0.725 | 3.350 | 5.975 | TRUE |

| ML3 | STBD2 | 1.280 | 3.905 | 6.530 | TRUE |

| ML3 | STBD3 | 1.420 | 4.045 | 6.670 | TRUE |

| ML2 | YL1 | -1.700 | 0.925 | 3.550 | FALSE |

| ML2 | HKHLL1 | -0.975 | 1.650 | 4.275 | FALSE |

| ML2 | STBD1 | 0.685 | 3.310 | 5.935 | TRUE |

| ML2 | GKC1 | 0.820 | 3.445 | 6.070 | TRUE |

| ML2 | STBD2 | 1.375 | 4.000 | 6.625 | TRUE |

| ML2 | STBD3 | 1.515 | 4.140 | 6.765 | TRUE |

| YL1 | HKHLL1 | -1.900 | 0.725 | 3.350 | FALSE |

| YL1 | STBD1 | -0.240 | 2.385 | 5.010 | FALSE |

| YL1 | GKC1 | -0.105 | 2.520 | 5.145 | FALSE |

| YL1 | STBD2 | 0.450 | 3.075 | 5.700 | TRUE |

| YL1 | STBD3 | 0.590 | 3.215 | 5.840 | TRUE |

| HKHLL1 | STBD1 | -0.965 | 1.660 | 4.285 | FALSE |

| HKHLL1 | GKC1 | -0.830 | 1.795 | 4.420 | FALSE |

| HKHLL1 | STBD2 | -0.275 | 2.350 | 4.975 | FALSE |

| HKHLL1 | STBD3 | -0.135 | 2.490 | 5.115 | FALSE |

| STBD1 | GKC1 | -2.490 | 0.135 | 2.760 | FALSE |

| STBD1 | STBD2 | -1.935 | 0.690 | 3.315 | FALSE |

| STBD1 | STBD3 | -1.795 | 0.830 | 3.455 | FALSE |

| GKC1 | STBD2 | -2.070 | 0.555 | 3.180 | FALSE |

| GKC1 | STBD3 | -1.930 | 0.695 | 3.320 | FALSE |

| STBD2 | STBD3 | -2.485 | 0.140 | 2.765 | FALSE |

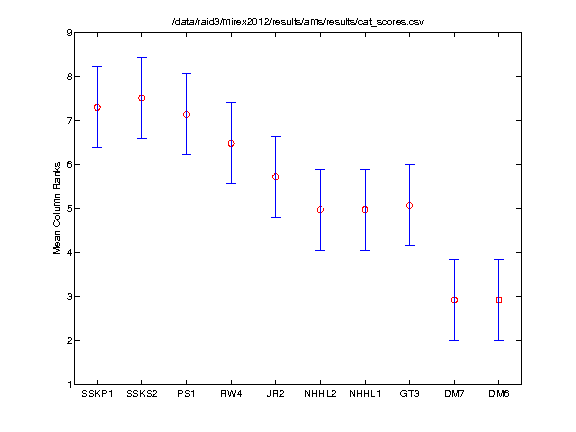

Friedman's Test (BROAD Scores)

The Friedman test was run in MATLAB against the BROAD summary data over the 100 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| SSPK2 | CTCP2 | -2.007 | 0.515 | 3.037 | FALSE |

| SSPK2 | SSKS3 | -2.132 | 0.390 | 2.912 | FALSE |

| SSPK2 | PS1 | -2.262 | 0.260 | 2.782 | FALSE |

| SSPK2 | CTCP1 | -1.432 | 1.090 | 3.612 | FALSE |

| SSPK2 | CTCP3 | -1.207 | 1.315 | 3.837 | FALSE |

| SSPK2 | DM2 | 0.448 | 2.970 | 5.492 | TRUE |

| SSPK2 | DM3 | 0.418 | 2.940 | 5.462 | TRUE |

| SSPK2 | ZYC2 | 0.308 | 2.830 | 5.352 | TRUE |

| SSPK2 | ML3 | 1.893 | 4.415 | 6.937 | TRUE |

| SSPK2 | ML1 | 1.838 | 4.360 | 6.882 | TRUE |

| SSPK2 | ML2 | 1.953 | 4.475 | 6.997 | TRUE |

| SSPK2 | YL1 | 2.828 | 5.350 | 7.872 | TRUE |

| SSPK2 | HKHLL1 | 3.383 | 5.905 | 8.427 | TRUE |

| SSPK2 | STBD1 | 5.173 | 7.695 | 10.217 | TRUE |

| SSPK2 | GKC1 | 5.293 | 7.815 | 10.337 | TRUE |

| SSPK2 | STBD2 | 5.633 | 8.155 | 10.677 | TRUE |

| SSPK2 | STBD3 | 5.938 | 8.460 | 10.982 | TRUE |

| CTCP2 | SSKS3 | -2.647 | -0.125 | 2.397 | FALSE |

| CTCP2 | PS1 | -2.777 | -0.255 | 2.267 | FALSE |

| CTCP2 | CTCP1 | -1.947 | 0.575 | 3.097 | FALSE |

| CTCP2 | CTCP3 | -1.722 | 0.800 | 3.322 | FALSE |

| CTCP2 | DM2 | -0.067 | 2.455 | 4.977 | FALSE |

| CTCP2 | DM3 | -0.097 | 2.425 | 4.947 | FALSE |

| CTCP2 | ZYC2 | -0.207 | 2.315 | 4.837 | FALSE |

| CTCP2 | ML3 | 1.378 | 3.900 | 6.422 | TRUE |

| CTCP2 | ML1 | 1.323 | 3.845 | 6.367 | TRUE |

| CTCP2 | ML2 | 1.438 | 3.960 | 6.482 | TRUE |

| CTCP2 | YL1 | 2.313 | 4.835 | 7.357 | TRUE |

| CTCP2 | HKHLL1 | 2.868 | 5.390 | 7.912 | TRUE |

| CTCP2 | STBD1 | 4.658 | 7.180 | 9.702 | TRUE |

| CTCP2 | GKC1 | 4.778 | 7.300 | 9.822 | TRUE |

| CTCP2 | STBD2 | 5.118 | 7.640 | 10.162 | TRUE |

| CTCP2 | STBD3 | 5.423 | 7.945 | 10.467 | TRUE |

| SSKS3 | PS1 | -2.652 | -0.130 | 2.392 | FALSE |

| SSKS3 | CTCP1 | -1.822 | 0.700 | 3.222 | FALSE |

| SSKS3 | CTCP3 | -1.597 | 0.925 | 3.447 | FALSE |

| SSKS3 | DM2 | 0.058 | 2.580 | 5.102 | TRUE |

| SSKS3 | DM3 | 0.028 | 2.550 | 5.072 | TRUE |

| SSKS3 | ZYC2 | -0.082 | 2.440 | 4.962 | FALSE |

| SSKS3 | ML3 | 1.503 | 4.025 | 6.547 | TRUE |

| SSKS3 | ML1 | 1.448 | 3.970 | 6.492 | TRUE |

| SSKS3 | ML2 | 1.563 | 4.085 | 6.607 | TRUE |

| SSKS3 | YL1 | 2.438 | 4.960 | 7.482 | TRUE |

| SSKS3 | HKHLL1 | 2.993 | 5.515 | 8.037 | TRUE |

| SSKS3 | STBD1 | 4.783 | 7.305 | 9.827 | TRUE |

| SSKS3 | GKC1 | 4.903 | 7.425 | 9.947 | TRUE |

| SSKS3 | STBD2 | 5.243 | 7.765 | 10.287 | TRUE |

| SSKS3 | STBD3 | 5.548 | 8.070 | 10.592 | TRUE |

| PS1 | CTCP1 | -1.692 | 0.830 | 3.352 | FALSE |

| PS1 | CTCP3 | -1.467 | 1.055 | 3.577 | FALSE |

| PS1 | DM2 | 0.188 | 2.710 | 5.232 | TRUE |

| PS1 | DM3 | 0.158 | 2.680 | 5.202 | TRUE |

| PS1 | ZYC2 | 0.048 | 2.570 | 5.092 | TRUE |

| PS1 | ML3 | 1.633 | 4.155 | 6.677 | TRUE |

| PS1 | ML1 | 1.578 | 4.100 | 6.622 | TRUE |

| PS1 | ML2 | 1.693 | 4.215 | 6.737 | TRUE |

| PS1 | YL1 | 2.568 | 5.090 | 7.612 | TRUE |

| PS1 | HKHLL1 | 3.123 | 5.645 | 8.167 | TRUE |

| PS1 | STBD1 | 4.913 | 7.435 | 9.957 | TRUE |

| PS1 | GKC1 | 5.033 | 7.555 | 10.077 | TRUE |

| PS1 | STBD2 | 5.373 | 7.895 | 10.417 | TRUE |

| PS1 | STBD3 | 5.678 | 8.200 | 10.722 | TRUE |

| CTCP1 | CTCP3 | -2.297 | 0.225 | 2.747 | FALSE |

| CTCP1 | DM2 | -0.642 | 1.880 | 4.402 | FALSE |

| CTCP1 | DM3 | -0.672 | 1.850 | 4.372 | FALSE |

| CTCP1 | ZYC2 | -0.782 | 1.740 | 4.262 | FALSE |

| CTCP1 | ML3 | 0.803 | 3.325 | 5.847 | TRUE |

| CTCP1 | ML1 | 0.748 | 3.270 | 5.792 | TRUE |

| CTCP1 | ML2 | 0.863 | 3.385 | 5.907 | TRUE |

| CTCP1 | YL1 | 1.738 | 4.260 | 6.782 | TRUE |

| CTCP1 | HKHLL1 | 2.293 | 4.815 | 7.337 | TRUE |

| CTCP1 | STBD1 | 4.083 | 6.605 | 9.127 | TRUE |

| CTCP1 | GKC1 | 4.203 | 6.725 | 9.247 | TRUE |

| CTCP1 | STBD2 | 4.543 | 7.065 | 9.587 | TRUE |

| CTCP1 | STBD3 | 4.848 | 7.370 | 9.892 | TRUE |

| CTCP3 | DM2 | -0.867 | 1.655 | 4.177 | FALSE |

| CTCP3 | DM3 | -0.897 | 1.625 | 4.147 | FALSE |

| CTCP3 | ZYC2 | -1.007 | 1.515 | 4.037 | FALSE |

| CTCP3 | ML3 | 0.578 | 3.100 | 5.622 | TRUE |

| CTCP3 | ML1 | 0.523 | 3.045 | 5.567 | TRUE |

| CTCP3 | ML2 | 0.638 | 3.160 | 5.682 | TRUE |

| CTCP3 | YL1 | 1.513 | 4.035 | 6.557 | TRUE |

| CTCP3 | HKHLL1 | 2.068 | 4.590 | 7.112 | TRUE |

| CTCP3 | STBD1 | 3.858 | 6.380 | 8.902 | TRUE |

| CTCP3 | GKC1 | 3.978 | 6.500 | 9.022 | TRUE |

| CTCP3 | STBD2 | 4.318 | 6.840 | 9.362 | TRUE |

| CTCP3 | STBD3 | 4.623 | 7.145 | 9.667 | TRUE |

| DM2 | DM3 | -2.552 | -0.030 | 2.492 | FALSE |

| DM2 | ZYC2 | -2.662 | -0.140 | 2.382 | FALSE |

| DM2 | ML3 | -1.077 | 1.445 | 3.967 | FALSE |

| DM2 | ML1 | -1.132 | 1.390 | 3.912 | FALSE |

| DM2 | ML2 | -1.017 | 1.505 | 4.027 | FALSE |

| DM2 | YL1 | -0.142 | 2.380 | 4.902 | FALSE |

| DM2 | HKHLL1 | 0.413 | 2.935 | 5.457 | TRUE |

| DM2 | STBD1 | 2.203 | 4.725 | 7.247 | TRUE |

| DM2 | GKC1 | 2.323 | 4.845 | 7.367 | TRUE |

| DM2 | STBD2 | 2.663 | 5.185 | 7.707 | TRUE |

| DM2 | STBD3 | 2.968 | 5.490 | 8.012 | TRUE |

| DM3 | ZYC2 | -2.632 | -0.110 | 2.412 | FALSE |

| DM3 | ML3 | -1.047 | 1.475 | 3.997 | FALSE |

| DM3 | ML1 | -1.102 | 1.420 | 3.942 | FALSE |

| DM3 | ML2 | -0.987 | 1.535 | 4.057 | FALSE |

| DM3 | YL1 | -0.112 | 2.410 | 4.932 | FALSE |

| DM3 | HKHLL1 | 0.443 | 2.965 | 5.487 | TRUE |

| DM3 | STBD1 | 2.233 | 4.755 | 7.277 | TRUE |

| DM3 | GKC1 | 2.353 | 4.875 | 7.397 | TRUE |

| DM3 | STBD2 | 2.693 | 5.215 | 7.737 | TRUE |

| DM3 | STBD3 | 2.998 | 5.520 | 8.042 | TRUE |

| ZYC2 | ML3 | -0.937 | 1.585 | 4.107 | FALSE |

| ZYC2 | ML1 | -0.992 | 1.530 | 4.052 | FALSE |

| ZYC2 | ML2 | -0.877 | 1.645 | 4.167 | FALSE |

| ZYC2 | YL1 | -0.002 | 2.520 | 5.042 | FALSE |

| ZYC2 | HKHLL1 | 0.553 | 3.075 | 5.597 | TRUE |

| ZYC2 | STBD1 | 2.343 | 4.865 | 7.387 | TRUE |

| ZYC2 | GKC1 | 2.463 | 4.985 | 7.507 | TRUE |

| ZYC2 | STBD2 | 2.803 | 5.325 | 7.847 | TRUE |

| ZYC2 | STBD3 | 3.108 | 5.630 | 8.152 | TRUE |

| ML3 | ML1 | -2.577 | -0.055 | 2.467 | FALSE |

| ML3 | ML2 | -2.462 | 0.060 | 2.582 | FALSE |

| ML3 | YL1 | -1.587 | 0.935 | 3.457 | FALSE |

| ML3 | HKHLL1 | -1.032 | 1.490 | 4.012 | FALSE |

| ML3 | STBD1 | 0.758 | 3.280 | 5.802 | TRUE |

| ML3 | GKC1 | 0.878 | 3.400 | 5.922 | TRUE |

| ML3 | STBD2 | 1.218 | 3.740 | 6.262 | TRUE |

| ML3 | STBD3 | 1.523 | 4.045 | 6.567 | TRUE |

| ML1 | ML2 | -2.407 | 0.115 | 2.637 | FALSE |

| ML1 | YL1 | -1.532 | 0.990 | 3.512 | FALSE |

| ML1 | HKHLL1 | -0.977 | 1.545 | 4.067 | FALSE |

| ML1 | STBD1 | 0.813 | 3.335 | 5.857 | TRUE |

| ML1 | GKC1 | 0.933 | 3.455 | 5.977 | TRUE |

| ML1 | STBD2 | 1.273 | 3.795 | 6.317 | TRUE |

| ML1 | STBD3 | 1.578 | 4.100 | 6.622 | TRUE |

| ML2 | YL1 | -1.647 | 0.875 | 3.397 | FALSE |

| ML2 | HKHLL1 | -1.092 | 1.430 | 3.952 | FALSE |

| ML2 | STBD1 | 0.698 | 3.220 | 5.742 | TRUE |

| ML2 | GKC1 | 0.818 | 3.340 | 5.862 | TRUE |

| ML2 | STBD2 | 1.158 | 3.680 | 6.202 | TRUE |

| ML2 | STBD3 | 1.463 | 3.985 | 6.507 | TRUE |

| YL1 | HKHLL1 | -1.967 | 0.555 | 3.077 | FALSE |

| YL1 | STBD1 | -0.177 | 2.345 | 4.867 | FALSE |

| YL1 | GKC1 | -0.057 | 2.465 | 4.987 | FALSE |

| YL1 | STBD2 | 0.283 | 2.805 | 5.327 | TRUE |

| YL1 | STBD3 | 0.588 | 3.110 | 5.632 | TRUE |

| HKHLL1 | STBD1 | -0.732 | 1.790 | 4.312 | FALSE |

| HKHLL1 | GKC1 | -0.612 | 1.910 | 4.432 | FALSE |

| HKHLL1 | STBD2 | -0.272 | 2.250 | 4.772 | FALSE |

| HKHLL1 | STBD3 | 0.033 | 2.555 | 5.077 | TRUE |

| STBD1 | GKC1 | -2.402 | 0.120 | 2.642 | FALSE |

| STBD1 | STBD2 | -2.062 | 0.460 | 2.982 | FALSE |

| STBD1 | STBD3 | -1.757 | 0.765 | 3.287 | FALSE |

| GKC1 | STBD2 | -2.182 | 0.340 | 2.862 | FALSE |

| GKC1 | STBD3 | -1.877 | 0.645 | 3.167 | FALSE |

| STBD2 | STBD3 | -2.217 | 0.305 | 2.827 | FALSE |

Summary Results by Query

FINE Scores

These are the mean FINE scores per query assigned by Evalutron graders. The FINE scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 and 100. A perfect score would be 100. Genre labels have been included for reference.

| Genre | Query | CTCP1 | CTCP2 | CTCP3 | DM2 | DM3 | GKC1 | HKHLL1 | ML1 | ML2 | ML3 | PS1 | SSKS3 | SSPK2 | STBD1 | STBD2 | STBD3 | YL1 | ZYC2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BAROQUE | d006677 | 83.4 | 84.2 | 84.8 | 84.2 | 84.2 | 51.4 | 78.6 | 74.4 | 77.8 | 73.8 | 84.0 | 87.4 | 85.8 | 65.0 | 69.8 | 51.6 | 84.8 | 83.2 |

| BAROQUE | d006696 | 78.8 | 96.2 | 80.0 | 86.6 | 86.8 | 52.6 | 87.2 | 86.8 | 86.8 | 78.0 | 86.8 | 79.0 | 88.0 | 32.2 | 39.0 | 15.6 | 52.0 | 74.4 |

| BAROQUE | d007449 | 71.8 | 86.0 | 71.8 | 85.2 | 85.2 | 48.8 | 54.4 | 69.6 | 75.0 | 81.4 | 84.6 | 86.4 | 85.6 | 60.2 | 74.0 | 59.4 | 17.6 | 72.4 |

| BAROQUE | d008986 | 82.4 | 82.8 | 64.4 | 65.0 | 68.0 | 13.4 | 66.8 | 77.4 | 61.4 | 72.0 | 81.4 | 70.8 | 83.8 | 22.0 | 0.0 | 20.2 | 8.8 | 62.2 |

| BAROQUE | d012398 | 67.0 | 83.0 | 66.0 | 81.0 | 76.0 | 72.0 | 71.0 | 62.0 | 74.0 | 74.0 | 81.0 | 75.0 | 76.0 | 70.0 | 55.0 | 43.0 | 79.0 | 74.0 |

| BAROQUE | d013785 | 18.6 | 12.0 | 20.6 | 0.0 | 0.0 | 8.4 | 0.0 | 4.0 | 0.0 | 0.0 | 11.0 | 11.8 | 34.6 | 0.0 | 21.2 | 1.8 | 0.0 | 13.6 |

| BAROQUE | d014514 | 75.8 | 73.8 | 76.4 | 34.6 | 35.2 | 19.4 | 73.4 | 71.8 | 75.2 | 75.2 | 76.6 | 66.6 | 70.0 | 29.6 | 17.8 | 18.6 | 44.4 | 75.6 |

| BAROQUE | d014776 | 61.2 | 59.2 | 68.6 | 62.2 | 62.2 | 22.2 | 44.6 | 43.6 | 37.2 | 38.6 | 61.4 | 67.4 | 71.6 | 33.2 | 36.6 | 25.8 | 44.6 | 69.8 |

| BAROQUE | d015410 | 21.6 | 25.8 | 21.0 | 24.6 | 30.6 | 31.0 | 22.0 | 22.2 | 22.0 | 22.6 | 35.0 | 35.6 | 36.8 | 33.0 | 19.0 | 32.0 | 24.4 | 34.4 |

| BAROQUE | d016296 | 67.8 | 69.4 | 43.8 | 53.2 | 53.2 | 18.8 | 32.0 | 61.0 | 54.6 | 65.8 | 68.6 | 53.0 | 55.4 | 13.2 | 5.0 | 47.2 | 43.0 | 54.2 |

| BLUES | e003498 | 23.0 | 19.2 | 21.8 | 7.8 | 7.8 | 12.0 | 3.0 | 12.4 | 6.8 | 6.8 | 29.2 | 23.2 | 8.0 | 21.0 | 8.6 | 3.2 | 8.4 | 13.8 |

| BLUES | e006591 | 35.2 | 37.8 | 36.2 | 33.4 | 33.4 | 14.0 | 30.2 | 39.6 | 33.2 | 44.8 | 56.6 | 45.0 | 51.2 | 36.8 | 13.6 | 45.8 | 47.4 | 27.0 |

| BLUES | e006594 | 83.2 | 67.6 | 83.2 | 87.4 | 87.4 | 4.6 | 84.0 | 70.0 | 33.2 | 67.8 | 78.0 | 86.0 | 85.0 | 41.0 | 55.4 | 32.4 | 69.4 | 42.8 |

| BLUES | e007332 | 81.6 | 80.0 | 81.6 | 68.0 | 64.4 | 32.2 | 76.4 | 76.0 | 71.8 | 74.6 | 81.2 | 81.0 | 85.2 | 44.0 | 61.2 | 36.2 | 60.6 | 82.8 |

| BLUES | e010207 | 59.8 | 63.6 | 65.0 | 49.0 | 49.0 | 22.2 | 22.8 | 23.2 | 22.4 | 24.2 | 80.0 | 75.2 | 59.6 | 25.2 | 19.4 | 46.6 | 53.4 | 39.6 |

| BLUES | e010715 | 74.2 | 68.2 | 76.0 | 63.8 | 63.8 | 23.8 | 40.6 | 67.0 | 49.6 | 70.0 | 76.2 | 75.2 | 72.8 | 51.6 | 56.2 | 35.6 | 54.2 | 10.4 |

| BLUES | e012145 | 64.8 | 64.8 | 57.4 | 50.0 | 50.0 | 49.0 | 35.2 | 32.6 | 14.2 | 43.8 | 62.2 | 53.0 | 49.2 | 49.8 | 44.4 | 22.6 | 58.4 | 50.8 |

| BLUES | e012250 | 71.6 | 75.6 | 73.2 | 65.2 | 65.2 | 60.2 | 66.6 | 29.6 | 58.8 | 61.0 | 77.6 | 67.4 | 62.8 | 28.0 | 46.6 | 33.8 | 37.4 | 66.0 |

| BLUES | e012576 | 40.0 | 36.0 | 20.0 | 17.0 | 23.0 | 50.0 | 23.0 | 28.0 | 18.0 | 17.0 | 64.0 | 33.0 | 28.0 | 26.0 | 57.6 | 46.0 | 61.0 | 40.0 |

| BLUES | e015955 | 80.8 | 77.8 | 82.8 | 67.2 | 67.2 | 33.0 | 61.6 | 39.2 | 52.4 | 49.4 | 84.8 | 81.8 | 82.2 | 27.4 | 34.6 | 41.0 | 50.8 | 54.4 |

| CLASSICAL | d002664 | 29.6 | 39.8 | 35.2 | 30.0 | 30.0 | 16.0 | 20.8 | 48.6 | 44.0 | 31.6 | 34.6 | 33.6 | 33.0 | 3.6 | 2.0 | 1.0 | 37.6 | 16.4 |

| CLASSICAL | d008359 | 71.4 | 69.0 | 62.6 | 69.4 | 69.2 | 32.8 | 69.0 | 58.6 | 60.0 | 60.6 | 74.8 | 69.4 | 74.0 | 37.0 | 37.2 | 28.4 | 63.8 | 52.4 |

| CLASSICAL | d008847 | 68.0 | 64.2 | 66.6 | 69.8 | 66.8 | 55.4 | 64.6 | 62.4 | 59.6 | 53.4 | 71.0 | 72.6 | 71.2 | 44.6 | 13.4 | 55.2 | 21.6 | 66.4 |

| CLASSICAL | d009363 | 31.0 | 25.0 | 30.2 | 26.8 | 28.0 | 41.4 | 28.0 | 38.2 | 39.2 | 32.6 | 43.8 | 28.8 | 30.6 | 32.8 | 29.6 | 26.2 | 36.2 | 38.8 |

| CLASSICAL | d010273 | 84.0 | 82.0 | 83.8 | 81.0 | 78.0 | 37.2 | 78.6 | 83.0 | 82.2 | 83.0 | 83.6 | 82.6 | 65.0 | 65.6 | 15.6 | 49.2 | 79.8 | 78.8 |

| CLASSICAL | d012347 | 87.6 | 82.8 | 88.4 | 86.2 | 86.2 | 10.6 | 76.4 | 79.2 | 85.6 | 82.6 | 90.0 | 90.6 | 90.0 | 7.4 | 14.0 | 73.6 | 66.6 | 69.0 |

| CLASSICAL | d013474 | 64.4 | 74.6 | 73.8 | 75.8 | 75.8 | 67.4 | 64.2 | 68.8 | 69.2 | 62.8 | 71.6 | 69.8 | 77.6 | 39.2 | 33.8 | 44.8 | 48.6 | 61.2 |

| CLASSICAL | d013555 | 12.6 | 9.2 | 25.6 | 23.8 | 16.8 | 14.6 | 21.0 | 22.2 | 21.6 | 21.8 | 17.2 | 23.6 | 27.4 | 9.2 | 14.2 | 6.2 | 20.2 | 24.6 |

| CLASSICAL | d013745 | 79.8 | 81.4 | 79.0 | 62.0 | 62.0 | 16.0 | 72.8 | 73.4 | 76.6 | 75.6 | 81.6 | 71.4 | 72.0 | 59.0 | 23.2 | 48.4 | 63.4 | 72.6 |

| CLASSICAL | d019428 | 70.8 | 72.6 | 67.2 | 60.6 | 60.6 | 25.8 | 36.4 | 58.2 | 57.4 | 56.2 | 64.0 | 65.6 | 61.2 | 53.2 | 42.0 | 7.8 | 39.8 | 50.2 |

| COUNTRY | b007754 | 55.2 | 65.0 | 50.0 | 51.0 | 55.0 | 5.0 | 0.0 | 60.8 | 44.8 | 33.2 | 58.2 | 41.0 | 49.2 | 23.0 | 33.8 | 14.4 | 54.8 | 40.2 |

| COUNTRY | e000004 | 56.4 | 65.2 | 54.4 | 35.0 | 35.0 | 28.4 | 50.6 | 20.0 | 39.2 | 26.8 | 26.2 | 70.4 | 67.0 | 24.4 | 32.2 | 15.2 | 41.0 | 28.4 |

| COUNTRY | e005074 | 62.0 | 73.0 | 65.0 | 50.0 | 48.0 | 46.0 | 68.0 | 42.0 | 52.0 | 62.0 | 61.0 | 58.0 | 68.0 | 31.0 | 46.0 | 10.0 | 48.0 | 67.0 |

| COUNTRY | e006998 | 71.0 | 71.0 | 53.0 | 27.0 | 34.0 | 23.0 | 28.0 | 40.0 | 27.0 | 35.0 | 66.0 | 74.0 | 59.0 | 3.0 | 23.0 | 15.0 | 24.0 | 41.0 |

| COUNTRY | e008019 | 76.0 | 76.0 | 70.0 | 66.0 | 66.0 | 50.0 | 58.0 | 68.0 | 60.0 | 70.0 | 82.0 | 68.0 | 70.0 | 66.0 | 36.0 | 24.0 | 66.0 | 78.0 |

| COUNTRY | e010395 | 53.4 | 52.2 | 47.6 | 54.2 | 51.8 | 23.2 | 36.8 | 31.0 | 37.4 | 26.4 | 55.4 | 50.8 | 65.6 | 43.2 | 36.2 | 18.6 | 19.6 | 46.4 |

| COUNTRY | e012084 | 68.8 | 71.6 | 82.2 | 76.2 | 72.0 | 13.0 | 75.2 | 63.6 | 51.2 | 57.8 | 82.6 | 69.2 | 80.4 | 55.4 | 45.2 | 70.8 | 72.8 | 70.4 |

| COUNTRY | e014772 | 42.2 | 40.4 | 41.0 | 46.4 | 49.2 | 33.8 | 40.2 | 39.2 | 35.8 | 38.6 | 41.0 | 40.8 | 50.0 | 37.0 | 37.2 | 39.2 | 38.8 | 51.2 |

| COUNTRY | e019603 | 47.8 | 45.2 | 38.8 | 47.8 | 47.8 | 13.6 | 22.8 | 37.6 | 38.0 | 31.8 | 36.8 | 33.4 | 51.6 | 16.4 | 8.6 | 9.8 | 45.4 | 30.6 |

| COUNTRY | e019738 | 71.0 | 59.0 | 65.0 | 67.0 | 67.0 | 38.0 | 59.0 | 62.0 | 64.0 | 61.0 | 57.0 | 64.0 | 67.0 | 43.0 | 49.0 | 33.0 | 55.0 | 65.0 |

| EDANCE | a005135 | 54.0 | 57.0 | 57.0 | 27.0 | 30.0 | 41.0 | 19.0 | 2.2 | 31.0 | 53.0 | 41.0 | 39.0 | 43.0 | 54.0 | 32.0 | 28.0 | 46.0 | 0.0 |

| EDANCE | a005589 | 54.4 | 37.4 | 32.4 | 22.6 | 22.6 | 22.0 | 0.0 | 28.0 | 13.8 | 44.2 | 33.6 | 26.8 | 39.8 | 3.4 | 21.0 | 0.0 | 45.0 | 35.2 |

| EDANCE | a007506 | 61.2 | 57.4 | 68.8 | 52.2 | 52.2 | 72.6 | 10.4 | 35.2 | 43.2 | 24.4 | 64.8 | 71.4 | 70.4 | 56.0 | 25.6 | 23.4 | 42.0 | 56.6 |

| EDANCE | b003709 | 44.4 | 42.8 | 45.2 | 50.6 | 50.6 | 38.6 | 5.8 | 36.6 | 37.6 | 38.8 | 45.6 | 44.2 | 57.6 | 29.6 | 18.0 | 30.6 | 21.0 | 12.0 |

| EDANCE | b005745 | 26.2 | 17.2 | 26.4 | 24.0 | 24.0 | 14.6 | 25.8 | 28.6 | 20.4 | 24.6 | 30.8 | 33.8 | 29.0 | 12.0 | 18.4 | 14.2 | 19.6 | 26.6 |

| EDANCE | b006254 | 35.6 | 28.6 | 49.0 | 1.6 | 3.4 | 44.6 | 15.0 | 4.6 | 9.4 | 5.2 | 18.4 | 54.4 | 49.0 | 1.2 | 10.0 | 6.4 | 32.0 | 2.4 |

| EDANCE | b011836 | 34.0 | 40.0 | 40.0 | 34.0 | 42.0 | 42.0 | 24.0 | 52.0 | 40.0 | 40.0 | 32.0 | 46.0 | 54.0 | 18.0 | 40.0 | 32.0 | 24.0 | 24.0 |

| EDANCE | b016602 | 66.0 | 66.0 | 63.0 | 33.4 | 46.6 | 57.0 | 49.8 | 34.6 | 59.6 | 42.0 | 49.2 | 70.6 | 55.0 | 30.4 | 30.4 | 49.2 | 45.6 | 37.4 |

| EDANCE | f003218 | 83.0 | 88.0 | 76.0 | 76.0 | 76.0 | 78.0 | 31.0 | 77.0 | 74.0 | 76.0 | 87.0 | 70.0 | 94.0 | 42.0 | 52.0 | 77.0 | 59.0 | 54.0 |

| EDANCE | f019182 | 32.4 | 53.0 | 28.4 | 42.8 | 30.2 | 35.6 | 15.6 | 16.8 | 18.6 | 23.0 | 34.6 | 51.6 | 26.4 | 24.4 | 29.6 | 17.2 | 65.0 | 34.4 |

| JAZZ | a006733 | 83.0 | 85.0 | 72.0 | 11.0 | 11.0 | 38.0 | 13.0 | 21.0 | 19.0 | 23.0 | 65.0 | 81.0 | 81.0 | 21.0 | 34.0 | 44.0 | 32.0 | 22.0 |

| JAZZ | a009161 | 61.0 | 57.8 | 64.4 | 33.0 | 29.2 | 25.6 | 45.8 | 60.6 | 56.2 | 53.6 | 40.0 | 55.6 | 76.0 | 33.4 | 21.2 | 12.0 | 63.6 | 57.4 |

| JAZZ | e004616 | 67.4 | 74.4 | 70.6 | 74.2 | 73.4 | 53.2 | 69.8 | 69.6 | 69.8 | 69.8 | 78.2 | 77.6 | 80.6 | 61.0 | 57.4 | 44.6 | 64.2 | 75.4 |

| JAZZ | e004656 | 79.0 | 82.6 | 74.2 | 70.2 | 70.2 | 29.0 | 64.8 | 73.4 | 78.4 | 73.4 | 72.4 | 79.0 | 81.0 | 51.0 | 47.2 | 40.2 | 69.8 | 76.4 |

| JAZZ | e008805 | 71.8 | 61.6 | 64.2 | 26.4 | 13.8 | 21.0 | 3.4 | 46.2 | 39.6 | 36.0 | 37.2 | 55.6 | 33.0 | 12.2 | 9.4 | 10.8 | 54.6 | 29.2 |

| JAZZ | e009003 | 87.0 | 83.0 | 83.0 | 74.0 | 74.0 | 45.0 | 45.0 | 58.0 | 77.0 | 74.0 | 82.0 | 85.0 | 83.0 | 41.0 | 57.0 | 64.0 | 10.4 | 87.0 |

| JAZZ | e009926 | 0.0 | 0.0 | 0.0 | 0.2 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 8.0 | 0.2 | 3.2 | 0.4 | 0.0 |

| JAZZ | e010314 | 87.2 | 90.0 | 83.0 | 16.0 | 19.0 | 33.8 | 27.8 | 41.2 | 53.2 | 42.6 | 59.4 | 78.8 | 76.4 | 32.6 | 24.4 | 33.4 | 12.6 | 62.2 |

| JAZZ | e010700 | 71.8 | 81.6 | 68.8 | 28.2 | 23.4 | 22.2 | 52.0 | 53.0 | 41.2 | 36.4 | 33.8 | 56.8 | 47.2 | 14.6 | 30.4 | 26.8 | 14.8 | 41.8 |

| JAZZ | e012958 | 6.0 | 8.0 | 0.0 | 23.2 | 23.2 | 20.4 | 0.0 | 0.0 | 6.0 | 0.0 | 20.6 | 0.6 | 0.0 | 15.0 | 2.0 | 0.0 | 0.0 | 0.0 |

| METAL | a000907 | 21.8 | 23.0 | 39.0 | 32.0 | 39.8 | 8.2 | 23.8 | 21.0 | 8.8 | 19.6 | 22.0 | 29.2 | 39.0 | 2.2 | 1.2 | 8.6 | 19.4 | 20.6 |

| METAL | b001064 | 72.2 | 74.2 | 71.4 | 49.4 | 44.8 | 34.8 | 44.0 | 45.4 | 67.8 | 71.4 | 46.4 | 79.8 | 71.4 | 20.4 | 25.6 | 49.8 | 66.8 | 44.8 |

| METAL | b005963 | 86.0 | 79.0 | 85.0 | 83.0 | 79.0 | 71.0 | 52.0 | 74.0 | 68.0 | 77.0 | 83.0 | 79.0 | 72.0 | 42.0 | 43.0 | 56.0 | 77.0 | 74.0 |

| METAL | b007455 | 59.6 | 68.2 | 57.4 | 57.2 | 63.8 | 11.6 | 48.2 | 55.0 | 55.8 | 50.0 | 65.6 | 41.2 | 65.8 | 36.2 | 24.0 | 11.4 | 48.4 | 61.6 |

| METAL | b008450 | 38.2 | 37.4 | 55.4 | 63.4 | 64.6 | 7.8 | 40.0 | 75.6 | 53.0 | 56.2 | 37.4 | 30.8 | 14.4 | 16.8 | 10.6 | 21.6 | 43.2 | 60.6 |

| METAL | b009939 | 35.0 | 27.0 | 32.4 | 17.0 | 15.0 | 15.8 | 17.0 | 48.0 | 46.0 | 33.4 | 40.0 | 41.0 | 46.0 | 18.2 | 14.0 | 25.2 | 23.0 | 40.0 |

| METAL | b012964 | 46.0 | 44.0 | 33.0 | 42.0 | 42.0 | 10.0 | 58.0 | 45.0 | 41.0 | 35.0 | 49.0 | 46.0 | 43.0 | 30.0 | 8.0 | 0.0 | 19.0 | 49.0 |

| METAL | b019036 | 33.8 | 44.2 | 49.2 | 26.0 | 33.2 | 25.8 | 29.6 | 34.0 | 44.4 | 43.0 | 35.2 | 41.8 | 29.0 | 36.2 | 36.8 | 20.0 | 27.0 | 17.4 |

| METAL | f007939 | 56.4 | 37.0 | 64.0 | 65.8 | 55.6 | 30.8 | 59.0 | 67.2 | 62.2 | 59.0 | 60.8 | 32.4 | 68.8 | 12.2 | 7.4 | 3.8 | 52.8 | 65.4 |

| METAL | f015638 | 73.0 | 74.8 | 81.4 | 64.0 | 64.0 | 4.2 | 52.4 | 61.2 | 61.2 | 59.8 | 77.0 | 83.2 | 90.2 | 19.8 | 15.6 | 31.6 | 68.0 | 67.4 |

| RAPHIPHOP | a000184 | 45.2 | 60.0 | 42.8 | 59.0 | 59.0 | 6.4 | 58.2 | 55.6 | 59.0 | 57.4 | 62.6 | 65.4 | 61.6 | 63.2 | 46.6 | 15.4 | 45.0 | 46.0 |

| RAPHIPHOP | a000369 | 72.6 | 78.4 | 78.0 | 68.8 | 53.8 | 22.4 | 46.2 | 72.4 | 77.4 | 73.2 | 79.2 | 79.2 | 82.2 | 73.8 | 74.0 | 68.8 | 75.2 | 82.0 |

| RAPHIPHOP | a002853 | 52.2 | 56.4 | 26.8 | 57.8 | 57.8 | 38.6 | 57.4 | 49.2 | 75.2 | 66.0 | 64.6 | 57.2 | 56.8 | 35.6 | 15.6 | 59.8 | 28.6 | 51.4 |

| RAPHIPHOP | a006408 | 73.6 | 66.0 | 68.6 | 72.6 | 71.8 | 56.4 | 73.8 | 76.6 | 71.8 | 70.2 | 70.8 | 76.4 | 71.4 | 69.8 | 68.4 | 73.2 | 73.8 | 74.0 |

| RAPHIPHOP | a007204 | 78.6 | 80.8 | 70.8 | 75.4 | 75.4 | 65.0 | 82.8 | 46.8 | 70.4 | 65.4 | 80.6 | 81.6 | 80.0 | 33.2 | 32.8 | 27.4 | 58.4 | 76.6 |

| RAPHIPHOP | a008101 | 68.4 | 73.2 | 63.8 | 57.8 | 51.0 | 11.0 | 49.8 | 69.2 | 63.2 | 66.4 | 71.2 | 71.0 | 68.4 | 45.8 | 31.2 | 55.0 | 54.8 | 68.2 |

| RAPHIPHOP | b001529 | 61.0 | 53.0 | 52.0 | 53.4 | 47.4 | 4.2 | 44.2 | 56.0 | 18.0 | 43.0 | 43.0 | 36.0 | 57.0 | 24.0 | 54.0 | 12.2 | 66.0 | 36.0 |

| RAPHIPHOP | b009504 | 57.0 | 59.0 | 50.0 | 67.0 | 67.0 | 13.2 | 54.4 | 56.4 | 63.0 | 74.2 | 61.6 | 43.6 | 48.8 | 6.2 | 3.0 | 57.4 | 60.6 | 60.2 |

| RAPHIPHOP | b012102 | 39.0 | 59.6 | 47.0 | 62.2 | 62.2 | 29.6 | 38.4 | 62.0 | 65.6 | 81.6 | 59.8 | 74.2 | 59.0 | 63.0 | 29.6 | 45.6 | 25.8 | 71.0 |

| RAPHIPHOP | b018710 | 41.6 | 69.0 | 48.0 | 71.6 | 64.2 | 26.6 | 32.4 | 59.0 | 67.2 | 59.0 | 66.0 | 69.4 | 77.0 | 26.0 | 32.2 | 65.2 | 42.8 | 46.0 |

| ROCKROLL | a003466 | 10.2 | 9.6 | 28.8 | 8.6 | 8.6 | 32.6 | 17.8 | 25.2 | 18.4 | 9.4 | 25.6 | 38.2 | 20.6 | 24.2 | 24.4 | 22.4 | 18.8 | 20.2 |

| ROCKROLL | b000979 | 29.0 | 41.0 | 28.0 | 38.0 | 50.0 | 0.0 | 23.0 | 49.0 | 36.0 | 30.0 | 44.0 | 63.0 | 37.0 | 18.0 | 14.0 | 8.0 | 16.0 | 58.0 |

| ROCKROLL | b001750 | 32.0 | 30.0 | 29.0 | 41.0 | 41.0 | 38.0 | 36.0 | 42.0 | 49.0 | 49.0 | 32.0 | 38.0 | 51.0 | 24.0 | 30.0 | 14.0 | 42.0 | 28.0 |

| ROCKROLL | b005580 | 41.2 | 35.2 | 35.8 | 31.6 | 31.6 | 27.2 | 22.0 | 41.0 | 27.4 | 18.6 | 32.6 | 33.8 | 36.4 | 34.4 | 24.0 | 2.6 | 23.8 | 36.8 |

| ROCKROLL | b008059 | 48.0 | 41.2 | 37.2 | 36.4 | 36.4 | 30.0 | 8.4 | 8.2 | 17.6 | 9.2 | 51.6 | 63.8 | 37.8 | 12.2 | 21.2 | 11.4 | 11.8 | 30.0 |

| ROCKROLL | b008990 | 48.4 | 70.4 | 46.2 | 44.2 | 44.2 | 38.8 | 25.4 | 41.6 | 29.0 | 38.0 | 54.6 | 64.6 | 46.0 | 32.2 | 24.0 | 1.6 | 43.4 | 51.2 |

| ROCKROLL | b011113 | 36.0 | 36.4 | 36.2 | 38.0 | 37.8 | 25.8 | 32.6 | 37.6 | 34.6 | 27.8 | 33.0 | 38.4 | 36.8 | 27.4 | 37.6 | 23.8 | 38.0 | 39.4 |

| ROCKROLL | b016165 | 66.8 | 69.8 | 60.4 | 57.6 | 59.2 | 49.8 | 54.4 | 46.6 | 29.0 | 45.4 | 61.0 | 59.2 | 49.8 | 31.4 | 12.6 | 30.0 | 57.8 | 73.6 |

| ROCKROLL | b017586 | 55.0 | 55.0 | 57.0 | 47.0 | 53.0 | 53.0 | 49.0 | 51.0 | 49.0 | 48.0 | 58.0 | 60.0 | 51.0 | 21.0 | 25.0 | 18.0 | 47.0 | 47.0 |

| ROCKROLL | b019928 | 49.8 | 50.2 | 55.2 | 59.4 | 55.4 | 29.2 | 31.8 | 44.2 | 40.0 | 50.2 | 49.2 | 50.0 | 52.2 | 37.4 | 51.4 | 42.2 | 43.8 | 53.8 |

| ROMANTIC | d005834 | 58.6 | 48.4 | 62.0 | 46.2 | 46.2 | 23.4 | 5.6 | 17.6 | 29.0 | 22.8 | 55.8 | 59.8 | 52.2 | 7.6 | 10.8 | 43.4 | 29.2 | 50.0 |

| ROMANTIC | d009949 | 67.0 | 64.8 | 59.8 | 65.2 | 65.2 | 50.6 | 59.8 | 62.0 | 62.0 | 62.0 | 67.4 | 68.4 | 65.4 | 58.4 | 47.4 | 20.6 | 35.6 | 69.4 |

| ROMANTIC | d010217 | 43.6 | 42.6 | 50.2 | 36.2 | 36.2 | 29.4 | 39.6 | 44.4 | 34.2 | 34.2 | 52.2 | 37.8 | 43.0 | 39.4 | 30.0 | 17.2 | 32.4 | 32.4 |

| ROMANTIC | d012214 | 66.0 | 68.4 | 61.0 | 65.2 | 64.0 | 55.0 | 53.2 | 55.6 | 58.4 | 55.2 | 63.8 | 66.0 | 70.6 | 34.0 | 43.0 | 46.0 | 45.0 | 69.2 |

| ROMANTIC | d015538 | 82.6 | 85.2 | 89.6 | 82.4 | 86.4 | 44.2 | 57.2 | 60.8 | 69.0 | 73.6 | 85.0 | 84.6 | 85.2 | 67.4 | 34.6 | 50.6 | 80.8 | 88.0 |

| ROMANTIC | d016565 | 46.0 | 54.0 | 45.0 | 33.0 | 37.0 | 25.0 | 24.0 | 22.0 | 29.0 | 18.0 | 48.0 | 42.0 | 47.0 | 54.0 | 29.0 | 7.0 | 15.0 | 41.0 |

| ROMANTIC | d017427 | 94.6 | 91.0 | 94.0 | 91.6 | 91.6 | 79.2 | 71.8 | 54.0 | 53.4 | 51.2 | 94.8 | 92.0 | 94.6 | 78.6 | 47.2 | 36.8 | 39.8 | 84.2 |

| ROMANTIC | d017784 | 69.0 | 74.0 | 69.0 | 73.0 | 73.0 | 68.0 | 71.0 | 47.0 | 66.0 | 51.0 | 75.0 | 67.0 | 77.0 | 87.0 | 63.0 | 85.0 | 33.0 | 73.0 |

| ROMANTIC | d018721 | 47.6 | 56.2 | 45.0 | 32.2 | 32.2 | 11.2 | 61.4 | 46.8 | 52.4 | 26.0 | 51.4 | 46.6 | 58.4 | 12.0 | 20.0 | 16.2 | 6.8 | 57.8 |

| ROMANTIC | d019573 | 73.2 | 77.4 | 75.0 | 65.0 | 67.6 | 16.2 | 51.4 | 66.4 | 50.8 | 74.2 | 75.0 | 72.0 | 72.6 | 51.4 | 26.0 | 27.6 | 36.8 | 65.0 |

BROAD Scores

These are the mean BROAD scores per query assigned by Evalutron graders. The BROAD scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 (not similar) and 2 (very similar). A perfect score would be 2. Genre labels have been included for reference.

| Genre | Query | CTCP1 | CTCP2 | CTCP3 | DM2 | DM3 | GKC1 | HKHLL1 | ML1 | ML2 | ML3 | PS1 | SSKS3 | SSPK2 | STBD1 | STBD2 | STBD3 | YL1 | ZYC2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BAROQUE | d006677 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.0 | 1.6 | 1.6 | 1.8 | 1.6 | 2.0 | 2.0 | 2.0 | 1.2 | 1.4 | 1.2 | 2.0 | 1.8 |

| BAROQUE | d006696 | 1.6 | 2.0 | 1.6 | 1.8 | 1.8 | 1.0 | 1.8 | 1.8 | 1.8 | 1.6 | 1.8 | 1.6 | 1.8 | 0.6 | 0.8 | 0.2 | 1.0 | 1.6 |

| BAROQUE | d007449 | 1.6 | 2.0 | 1.6 | 2.0 | 2.0 | 1.0 | 1.0 | 1.6 | 1.6 | 1.8 | 2.0 | 2.0 | 2.0 | 1.4 | 1.6 | 1.2 | 0.0 | 1.8 |

| BAROQUE | d008986 | 1.8 | 2.0 | 1.4 | 1.8 | 1.8 | 0.2 | 1.4 | 1.8 | 1.4 | 1.8 | 1.8 | 1.8 | 2.0 | 0.4 | 0.0 | 0.4 | 0.0 | 1.2 |

| BAROQUE | d012398 | 1.6 | 1.8 | 1.6 | 1.8 | 1.8 | 1.6 | 1.4 | 1.2 | 1.8 | 2.0 | 1.8 | 2.0 | 1.8 | 1.6 | 1.2 | 0.8 | 2.0 | 1.6 |

| BAROQUE | d013785 | 0.4 | 0.2 | 0.4 | 0.0 | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.2 | 0.2 | 0.8 | 0.0 | 0.4 | 0.0 | 0.0 | 0.4 |

| BAROQUE | d014514 | 1.8 | 1.6 | 1.8 | 0.4 | 0.4 | 0.0 | 1.6 | 1.6 | 1.8 | 1.8 | 1.6 | 1.6 | 1.6 | 0.2 | 0.2 | 0.0 | 1.0 | 1.6 |

| BAROQUE | d014776 | 1.4 | 1.2 | 1.4 | 1.4 | 1.4 | 0.4 | 1.0 | 1.0 | 0.6 | 0.6 | 1.4 | 1.6 | 1.8 | 0.4 | 0.6 | 0.4 | 1.0 | 1.6 |

| BAROQUE | d015410 | 0.0 | 0.2 | 0.0 | 0.0 | 0.2 | 0.4 | 0.0 | 0.0 | 0.0 | 0.0 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.2 | 0.6 |

| BAROQUE | d016296 | 1.6 | 1.4 | 0.8 | 1.2 | 1.2 | 0.2 | 0.6 | 1.2 | 1.2 | 1.6 | 1.6 | 1.0 | 1.2 | 0.2 | 0.0 | 1.2 | 1.2 | 1.2 |

| BLUES | e003498 | 0.2 | 0.2 | 0.2 | 0.0 | 0.0 | 0.2 | 0.0 | 0.2 | 0.0 | 0.0 | 0.4 | 0.2 | 0.0 | 0.4 | 0.2 | 0.0 | 0.2 | 0.2 |

| BLUES | e006591 | 0.6 | 0.6 | 0.8 | 0.6 | 0.6 | 0.0 | 0.6 | 0.8 | 0.6 | 1.0 | 1.2 | 0.8 | 1.0 | 0.6 | 0.0 | 1.0 | 1.0 | 0.4 |

| BLUES | e006594 | 2.0 | 1.6 | 2.0 | 2.0 | 2.0 | 0.0 | 2.0 | 1.8 | 0.8 | 1.6 | 2.0 | 2.0 | 2.0 | 0.8 | 1.0 | 0.8 | 1.8 | 1.0 |

| BLUES | e007332 | 1.8 | 1.8 | 2.0 | 1.4 | 1.2 | 0.4 | 1.6 | 1.8 | 1.6 | 1.8 | 1.8 | 1.8 | 2.0 | 0.8 | 1.2 | 0.8 | 1.4 | 2.0 |

| BLUES | e010207 | 1.4 | 1.2 | 1.2 | 1.2 | 1.2 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 2.0 | 1.6 | 1.4 | 0.2 | 0.2 | 1.2 | 1.0 | 0.8 |

| BLUES | e010715 | 1.6 | 1.4 | 1.8 | 1.4 | 1.4 | 0.2 | 0.6 | 1.4 | 0.8 | 1.6 | 1.8 | 1.8 | 1.6 | 1.0 | 1.2 | 0.4 | 1.0 | 0.0 |

| BLUES | e012145 | 1.6 | 1.6 | 1.6 | 1.0 | 1.0 | 0.8 | 0.8 | 0.6 | 0.0 | 1.0 | 1.6 | 1.2 | 1.0 | 1.0 | 0.8 | 0.2 | 1.4 | 1.0 |

| BLUES | e012250 | 1.6 | 1.8 | 1.8 | 1.6 | 1.6 | 1.2 | 1.6 | 0.6 | 1.2 | 1.4 | 2.0 | 1.6 | 1.6 | 0.4 | 0.8 | 0.6 | 0.8 | 1.6 |

| BLUES | e012576 | 0.8 | 0.6 | 0.2 | 0.2 | 0.4 | 1.0 | 0.4 | 0.2 | 0.0 | 0.0 | 1.4 | 0.6 | 0.4 | 0.6 | 1.2 | 1.0 | 1.4 | 0.8 |

| BLUES | e015955 | 2.0 | 1.8 | 2.0 | 1.6 | 1.6 | 0.4 | 1.2 | 0.6 | 1.0 | 1.0 | 2.0 | 2.0 | 2.0 | 0.4 | 0.6 | 0.8 | 1.0 | 1.0 |

| CLASSICAL | d002664 | 0.6 | 1.0 | 0.8 | 0.6 | 0.6 | 0.4 | 0.4 | 1.0 | 1.0 | 0.4 | 0.8 | 0.6 | 0.6 | 0.0 | 0.0 | 0.0 | 0.8 | 0.2 |

| CLASSICAL | d008359 | 1.8 | 1.8 | 1.4 | 1.6 | 1.6 | 0.6 | 1.8 | 1.2 | 1.4 | 1.4 | 2.0 | 1.6 | 2.0 | 0.4 | 0.4 | 0.2 | 1.6 | 1.2 |

| CLASSICAL | d008847 | 1.2 | 1.0 | 1.2 | 1.4 | 1.4 | 1.0 | 1.0 | 1.0 | 1.0 | 0.8 | 1.0 | 1.2 | 1.2 | 0.6 | 0.4 | 1.0 | 0.2 | 1.0 |

| CLASSICAL | d009363 | 0.8 | 0.6 | 0.8 | 0.8 | 1.0 | 0.8 | 0.8 | 1.0 | 1.0 | 1.0 | 1.2 | 0.8 | 0.8 | 1.0 | 0.8 | 1.0 | 1.2 | 1.0 |

| CLASSICAL | d010273 | 2.0 | 2.0 | 2.0 | 2.0 | 1.8 | 0.8 | 1.8 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.6 | 1.4 | 0.0 | 1.0 | 2.0 | 2.0 |

| CLASSICAL | d012347 | 2.0 | 1.8 | 2.0 | 2.0 | 2.0 | 0.0 | 1.8 | 1.8 | 1.8 | 2.0 | 2.0 | 2.0 | 2.0 | 0.0 | 0.0 | 1.4 | 1.4 | 1.2 |

| CLASSICAL | d013474 | 1.6 | 2.0 | 1.8 | 2.0 | 2.0 | 1.6 | 1.6 | 1.8 | 1.6 | 1.2 | 1.8 | 1.8 | 2.0 | 0.8 | 0.8 | 1.0 | 1.2 | 1.6 |

| CLASSICAL | d013555 | 0.0 | 0.0 | 0.4 | 0.2 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.2 | 0.2 | 0.0 | 0.0 | 0.0 | 0.2 | 0.0 |

| CLASSICAL | d013745 | 2.0 | 2.0 | 2.0 | 1.6 | 1.6 | 0.2 | 2.0 | 2.0 | 1.8 | 2.0 | 2.0 | 2.0 | 2.0 | 1.6 | 0.4 | 1.2 | 1.6 | 1.8 |

| CLASSICAL | d019428 | 1.8 | 1.8 | 1.6 | 1.6 | 1.6 | 0.6 | 0.6 | 1.2 | 1.4 | 1.2 | 1.6 | 1.6 | 1.4 | 1.4 | 1.0 | 0.0 | 1.0 | 1.2 |

| COUNTRY | b007754 | 0.8 | 1.0 | 0.4 | 0.8 | 1.0 | 0.0 | 0.0 | 0.6 | 0.6 | 0.4 | 0.8 | 0.4 | 0.8 | 0.4 | 0.4 | 0.2 | 1.0 | 0.4 |

| COUNTRY | e000004 | 1.2 | 1.4 | 1.0 | 0.4 | 0.4 | 0.2 | 1.2 | 0.2 | 0.6 | 0.4 | 0.2 | 1.4 | 1.6 | 0.2 | 0.2 | 0.0 | 0.8 | 0.2 |

| COUNTRY | e005074 | 1.4 | 1.8 | 1.6 | 1.0 | 0.8 | 0.8 | 1.8 | 0.6 | 0.8 | 1.4 | 1.2 | 1.2 | 1.4 | 0.4 | 0.8 | 0.0 | 0.8 | 1.6 |

| COUNTRY | e006998 | 1.6 | 1.6 | 1.2 | 0.4 | 0.6 | 0.2 | 0.6 | 0.8 | 0.4 | 0.4 | 1.4 | 1.6 | 1.2 | 0.0 | 0.4 | 0.2 | 0.4 | 0.8 |

| COUNTRY | e008019 | 1.8 | 1.8 | 1.6 | 1.4 | 1.4 | 1.0 | 1.2 | 1.6 | 1.2 | 1.6 | 2.0 | 1.6 | 1.6 | 1.4 | 0.4 | 0.0 | 1.4 | 1.8 |

| COUNTRY | e010395 | 1.2 | 1.0 | 1.0 | 1.0 | 1.0 | 0.2 | 0.4 | 0.4 | 0.6 | 0.4 | 1.2 | 0.8 | 1.4 | 0.6 | 0.4 | 0.2 | 0.2 | 0.8 |

| COUNTRY | e012084 | 1.2 | 1.2 | 1.6 | 1.4 | 1.2 | 0.2 | 1.4 | 1.0 | 1.0 | 1.0 | 1.6 | 1.2 | 1.4 | 1.0 | 0.6 | 1.2 | 1.2 | 1.2 |

| COUNTRY | e014772 | 1.0 | 1.0 | 1.0 | 1.0 | 1.2 | 0.6 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.2 | 1.0 | 1.0 | 1.0 | 1.0 | 1.2 |

| COUNTRY | e019603 | 1.2 | 1.2 | 1.0 | 1.2 | 1.2 | 0.2 | 0.6 | 0.8 | 1.0 | 0.6 | 0.8 | 1.0 | 1.4 | 0.4 | 0.2 | 0.0 | 1.0 | 0.8 |

| COUNTRY | e019738 | 1.6 | 1.2 | 1.8 | 1.6 | 1.6 | 0.6 | 1.4 | 1.0 | 1.2 | 1.2 | 1.2 | 1.4 | 1.6 | 0.4 | 1.0 | 0.4 | 1.0 | 1.6 |

| EDANCE | a005135 | 1.0 | 1.2 | 1.2 | 0.4 | 0.4 | 0.8 | 0.2 | 0.0 | 0.4 | 1.2 | 0.6 | 0.8 | 1.0 | 1.4 | 0.8 | 0.4 | 1.0 | 0.0 |

| EDANCE | a005589 | 1.6 | 1.0 | 0.8 | 0.6 | 0.6 | 0.6 | 0.0 | 0.8 | 0.4 | 1.2 | 1.0 | 0.6 | 1.0 | 0.2 | 0.6 | 0.0 | 1.2 | 0.8 |

| EDANCE | a007506 | 1.2 | 1.0 | 1.4 | 0.8 | 0.8 | 1.6 | 0.0 | 0.4 | 0.6 | 0.0 | 1.0 | 1.2 | 1.4 | 1.0 | 0.4 | 0.0 | 0.6 | 1.0 |

| EDANCE | b003709 | 0.8 | 0.8 | 0.8 | 1.2 | 1.2 | 0.4 | 0.0 | 0.6 | 0.8 | 0.8 | 1.0 | 0.8 | 1.0 | 0.6 | 0.2 | 0.8 | 0.2 | 0.2 |

| EDANCE | b005745 | 0.6 | 0.4 | 0.6 | 0.6 | 0.6 | 0.2 | 0.6 | 0.6 | 0.4 | 0.6 | 0.8 | 0.8 | 0.6 | 0.0 | 0.2 | 0.2 | 0.4 | 0.6 |

| EDANCE | b006254 | 0.6 | 0.6 | 1.0 | 0.0 | 0.0 | 1.0 | 0.4 | 0.0 | 0.2 | 0.0 | 0.4 | 1.0 | 1.0 | 0.0 | 0.2 | 0.0 | 0.6 | 0.0 |

| EDANCE | b011836 | 0.6 | 0.8 | 0.8 | 0.6 | 0.8 | 0.8 | 0.2 | 1.0 | 0.6 | 0.6 | 0.4 | 1.0 | 1.2 | 0.0 | 0.8 | 0.4 | 0.2 | 0.2 |

| EDANCE | b016602 | 1.6 | 1.4 | 1.4 | 0.8 | 1.2 | 1.2 | 0.8 | 0.6 | 1.4 | 0.8 | 1.2 | 1.4 | 1.0 | 0.6 | 0.4 | 1.2 | 1.2 | 0.6 |

| EDANCE | f003218 | 1.8 | 1.8 | 1.8 | 1.8 | 1.8 | 1.8 | 0.8 | 1.6 | 1.8 | 1.8 | 2.0 | 1.6 | 2.0 | 0.6 | 1.2 | 1.6 | 1.2 | 1.0 |

| EDANCE | f019182 | 0.4 | 1.0 | 0.2 | 0.8 | 0.4 | 0.8 | 0.0 | 0.0 | 0.2 | 0.2 | 0.4 | 1.0 | 0.0 | 0.4 | 0.4 | 0.0 | 1.6 | 0.4 |

| JAZZ | a006733 | 2.0 | 2.0 | 1.6 | 0.0 | 0.0 | 0.6 | 0.0 | 0.0 | 0.0 | 0.2 | 1.4 | 2.0 | 2.0 | 0.2 | 0.6 | 1.0 | 0.4 | 0.2 |

| JAZZ | a009161 | 1.2 | 1.2 | 1.2 | 0.8 | 0.6 | 0.6 | 1.2 | 1.2 | 1.0 | 1.0 | 0.8 | 1.4 | 1.8 | 0.6 | 0.2 | 0.2 | 1.4 | 1.4 |

| JAZZ | e004616 | 1.4 | 1.6 | 1.6 | 1.8 | 1.8 | 1.2 | 1.6 | 1.6 | 1.6 | 1.6 | 1.8 | 2.0 | 2.0 | 1.4 | 1.4 | 1.0 | 1.4 | 2.0 |

| JAZZ | e004656 | 2.0 | 2.0 | 1.8 | 1.4 | 1.4 | 0.4 | 1.6 | 1.8 | 2.0 | 1.8 | 1.6 | 2.0 | 1.8 | 0.8 | 0.8 | 0.6 | 1.6 | 2.0 |

| JAZZ | e008805 | 1.6 | 1.4 | 1.4 | 0.4 | 0.0 | 0.4 | 0.0 | 1.0 | 1.0 | 0.8 | 1.0 | 1.2 | 0.8 | 0.0 | 0.2 | 0.2 | 1.0 | 0.4 |

| JAZZ | e009003 | 2.0 | 2.0 | 2.0 | 1.6 | 1.6 | 1.2 | 1.0 | 1.6 | 2.0 | 1.8 | 2.0 | 2.0 | 2.0 | 0.8 | 1.2 | 1.6 | 0.2 | 2.0 |

| JAZZ | e009926 | 0.0 | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.2 | 0.0 | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 |

| JAZZ | e010314 | 2.0 | 2.0 | 2.0 | 0.2 | 0.2 | 0.6 | 0.4 | 0.8 | 1.2 | 0.8 | 1.2 | 1.8 | 1.6 | 0.8 | 0.2 | 0.6 | 0.0 | 1.4 |

| JAZZ | e010700 | 1.6 | 1.8 | 1.4 | 0.2 | 0.2 | 0.4 | 1.0 | 1.2 | 0.6 | 0.6 | 0.6 | 1.4 | 1.0 | 0.0 | 0.2 | 0.4 | 0.0 | 0.6 |

| JAZZ | e012958 | 0.4 | 0.4 | 0.0 | 0.0 | 0.0 | 0.4 | 0.0 | 0.0 | 0.4 | 0.0 | 0.2 | 0.0 | 0.0 | 0.4 | 0.2 | 0.0 | 0.0 | 0.0 |

| METAL | a000907 | 0.2 | 0.4 | 0.6 | 0.4 | 0.6 | 0.2 | 0.4 | 0.2 | 0.0 | 0.2 | 0.2 | 0.4 | 0.6 | 0.0 | 0.0 | 0.2 | 0.2 | 0.4 |

| METAL | b001064 | 1.8 | 2.0 | 1.8 | 0.8 | 0.8 | 0.4 | 1.0 | 0.6 | 1.6 | 1.6 | 1.0 | 2.0 | 1.8 | 0.0 | 0.2 | 1.2 | 1.4 | 1.0 |

| METAL | b005963 | 1.8 | 1.8 | 2.0 | 1.8 | 1.6 | 1.8 | 1.2 | 1.6 | 1.4 | 1.8 | 2.0 | 1.8 | 1.4 | 0.8 | 1.0 | 1.4 | 1.6 | 1.8 |

| METAL | b007455 | 1.4 | 1.4 | 1.2 | 1.2 | 1.4 | 0.0 | 1.0 | 1.4 | 1.4 | 1.2 | 1.6 | 0.6 | 1.2 | 0.6 | 0.4 | 0.0 | 1.2 | 1.2 |

| METAL | b008450 | 1.2 | 1.2 | 1.4 | 1.6 | 1.6 | 0.4 | 0.8 | 1.8 | 1.6 | 1.6 | 0.8 | 1.0 | 0.6 | 0.2 | 0.4 | 0.6 | 1.2 | 1.8 |

| METAL | b009939 | 0.4 | 0.2 | 0.6 | 0.2 | 0.0 | 0.2 | 0.2 | 0.8 | 0.6 | 0.4 | 0.6 | 0.8 | 1.0 | 0.4 | 0.2 | 0.4 | 0.2 | 0.8 |

| METAL | b012964 | 0.8 | 0.6 | 0.4 | 0.8 | 0.8 | 0.2 | 1.2 | 1.0 | 0.6 | 0.6 | 0.8 | 0.8 | 0.8 | 0.4 | 0.2 | 0.0 | 0.2 | 1.0 |

| METAL | b019036 | 0.6 | 0.8 | 1.0 | 1.0 | 1.0 | 0.6 | 0.6 | 1.2 | 1.0 | 1.0 | 1.0 | 1.0 | 0.8 | 0.8 | 0.6 | 0.4 | 1.0 | 0.4 |

| METAL | f007939 | 1.2 | 0.8 | 1.4 | 1.2 | 1.0 | 0.6 | 1.4 | 1.4 | 1.4 | 1.2 | 1.2 | 0.6 | 1.4 | 0.2 | 0.0 | 0.0 | 1.0 | 1.4 |

| METAL | f015638 | 1.6 | 1.8 | 2.0 | 1.4 | 1.4 | 0.0 | 1.2 | 1.4 | 1.2 | 1.2 | 1.8 | 2.0 | 2.0 | 0.4 | 0.2 | 0.6 | 1.4 | 1.4 |

| RAPHIPHOP | a000184 | 1.0 | 1.4 | 0.8 | 1.4 | 1.4 | 0.0 | 1.4 | 1.4 | 1.4 | 1.4 | 1.8 | 1.8 | 1.6 | 1.8 | 1.2 | 0.2 | 1.0 | 1.2 |

| RAPHIPHOP | a000369 | 1.2 | 1.6 | 1.6 | 1.4 | 1.0 | 0.4 | 0.8 | 1.4 | 1.6 | 1.4 | 1.8 | 1.6 | 1.8 | 1.4 | 1.6 | 1.6 | 1.4 | 1.8 |

| RAPHIPHOP | a002853 | 1.2 | 1.4 | 0.4 | 1.4 | 1.4 | 0.8 | 1.6 | 1.2 | 2.0 | 1.6 | 1.6 | 1.6 | 1.6 | 0.8 | 0.2 | 1.4 | 0.6 | 1.0 |

| RAPHIPHOP | a006408 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.2 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.8 | 2.0 | 2.0 | 2.0 |

| RAPHIPHOP | a007204 | 1.8 | 1.8 | 1.4 | 2.0 | 2.0 | 1.4 | 2.0 | 0.8 | 1.6 | 1.4 | 1.8 | 2.0 | 1.8 | 0.6 | 0.6 | 0.2 | 1.4 | 2.0 |

| RAPHIPHOP | a008101 | 2.0 | 2.0 | 1.8 | 1.4 | 1.2 | 0.0 | 1.2 | 2.0 | 1.8 | 1.8 | 2.0 | 2.0 | 2.0 | 1.2 | 0.8 | 1.4 | 1.6 | 1.8 |

| RAPHIPHOP | b001529 | 1.4 | 1.2 | 1.0 | 1.2 | 1.0 | 0.0 | 0.8 | 1.2 | 0.2 | 0.8 | 0.8 | 0.8 | 1.4 | 0.2 | 1.2 | 0.2 | 1.4 | 0.8 |

| RAPHIPHOP | b009504 | 1.2 | 1.2 | 1.0 | 1.6 | 1.6 | 0.2 | 1.2 | 1.0 | 1.4 | 1.6 | 1.0 | 0.8 | 0.8 | 0.0 | 0.0 | 1.2 | 1.2 | 1.2 |

| RAPHIPHOP | b012102 | 0.8 | 1.2 | 1.0 | 1.4 | 1.4 | 0.6 | 0.8 | 1.2 | 1.4 | 2.0 | 1.4 | 1.8 | 1.2 | 1.4 | 0.8 | 0.8 | 0.6 | 1.6 |

| RAPHIPHOP | b018710 | 0.8 | 1.8 | 1.0 | 1.8 | 1.6 | 0.0 | 0.4 | 1.6 | 1.8 | 1.4 | 1.6 | 1.8 | 2.0 | 0.4 | 0.4 | 1.6 | 0.8 | 1.0 |

| ROCKROLL | a003466 | 0.0 | 0.0 | 0.4 | 0.0 | 0.0 | 0.6 | 0.2 | 0.4 | 0.2 | 0.0 | 0.2 | 0.8 | 0.2 | 0.6 | 0.4 | 0.4 | 0.2 | 0.2 |

| ROCKROLL | b000979 | 0.4 | 0.8 | 0.4 | 0.6 | 0.8 | 0.0 | 0.2 | 0.8 | 0.6 | 0.4 | 0.8 | 1.2 | 0.6 | 0.2 | 0.2 | 0.0 | 0.2 | 1.4 |

| ROCKROLL | b001750 | 0.2 | 0.2 | 0.2 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.6 | 0.6 | 0.2 | 0.4 | 0.8 | 0.2 | 0.4 | 0.0 | 0.6 | 0.2 |

| ROCKROLL | b005580 | 1.0 | 0.8 | 0.8 | 0.6 | 0.6 | 0.2 | 0.6 | 1.0 | 0.6 | 0.4 | 0.8 | 0.8 | 0.8 | 0.6 | 0.4 | 0.0 | 0.2 | 0.8 |

| ROCKROLL | b008059 | 1.2 | 1.2 | 1.2 | 1.0 | 1.0 | 0.8 | 0.4 | 0.6 | 1.0 | 0.6 | 1.2 | 1.6 | 1.0 | 0.6 | 1.0 | 0.2 | 0.4 | 1.0 |

| ROCKROLL | b008990 | 1.0 | 1.4 | 0.8 | 1.0 | 1.0 | 0.8 | 0.2 | 0.8 | 0.6 | 0.8 | 1.0 | 1.2 | 1.0 | 0.6 | 0.4 | 0.0 | 0.8 | 1.0 |

| ROCKROLL | b011113 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.4 | 0.8 | 1.0 | 0.8 | 0.6 | 0.8 | 1.0 | 1.0 | 0.6 | 1.0 | 0.4 | 1.0 | 1.0 |

| ROCKROLL | b016165 | 1.2 | 1.4 | 1.2 | 1.2 | 1.2 | 1.0 | 1.2 | 0.8 | 0.6 | 0.8 | 1.0 | 1.2 | 1.2 | 0.6 | 0.2 | 0.6 | 1.2 | 1.6 |

| ROCKROLL | b017586 | 1.2 | 1.2 | 1.2 | 1.0 | 1.0 | 1.4 | 0.8 | 1.0 | 1.0 | 1.0 | 1.4 | 1.2 | 1.2 | 0.0 | 0.6 | 0.4 | 0.8 | 0.6 |

| ROCKROLL | b019928 | 1.2 | 1.4 | 1.4 | 1.2 | 1.2 | 0.4 | 0.4 | 0.8 | 0.6 | 1.0 | 1.2 | 1.0 | 1.2 | 0.4 | 1.0 | 0.8 | 0.8 | 1.0 |

| ROMANTIC | d005834 | 1.2 | 1.2 | 1.4 | 1.2 | 1.2 | 0.6 | 0.0 | 0.2 | 0.4 | 0.2 | 1.4 | 1.4 | 1.2 | 0.0 | 0.0 | 0.6 | 0.8 | 1.0 |

| ROMANTIC | d009949 | 1.8 | 1.4 | 1.2 | 1.4 | 1.4 | 1.2 | 1.4 | 1.4 | 1.4 | 1.4 | 1.8 | 1.4 | 1.4 | 1.2 | 1.2 | 0.2 | 0.6 | 1.8 |

| ROMANTIC | d010217 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.6 | 1.0 | 1.0 | 0.8 | 0.8 | 1.2 | 1.0 | 1.2 | 1.0 | 1.0 | 0.4 | 0.8 | 1.2 |

| ROMANTIC | d012214 | 1.6 | 1.6 | 1.2 | 1.6 | 1.4 | 1.2 | 1.0 | 1.2 | 1.2 | 1.0 | 1.2 | 1.6 | 2.0 | 0.6 | 0.8 | 0.8 | 0.8 | 1.8 |

| ROMANTIC | d015538 | 2.0 | 2.0 | 2.0 | 1.8 | 2.0 | 1.0 | 1.2 | 1.4 | 1.2 | 1.6 | 2.0 | 2.0 | 1.8 | 1.4 | 0.6 | 0.8 | 1.8 | 2.0 |

| ROMANTIC | d016565 | 0.8 | 1.0 | 0.6 | 0.6 | 0.6 | 0.4 | 0.4 | 0.2 | 0.4 | 0.2 | 1.0 | 0.6 | 0.8 | 0.8 | 0.2 | 0.0 | 0.2 | 0.8 |

| ROMANTIC | d017427 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.4 | 1.2 | 1.0 | 0.8 | 0.8 | 2.0 | 2.0 | 2.0 | 1.2 | 0.8 | 0.8 | 0.8 | 1.6 |

| ROMANTIC | d017784 | 1.0 | 1.4 | 1.0 | 1.2 | 1.2 | 1.6 | 1.2 | 0.8 | 1.0 | 0.8 | 1.4 | 1.2 | 1.6 | 2.0 | 1.4 | 2.0 | 0.6 | 1.2 |

| ROMANTIC | d018721 | 1.2 | 1.4 | 1.2 | 0.8 | 0.8 | 0.4 | 1.6 | 1.2 | 1.2 | 0.8 | 1.6 | 1.2 | 1.4 | 0.6 | 0.6 | 0.4 | 0.2 | 1.4 |

| ROMANTIC | d019573 | 2.0 | 2.0 | 2.0 | 1.8 | 1.8 | 0.4 | 1.2 | 1.8 | 1.4 | 2.0 | 2.0 | 2.0 | 2.0 | 1.2 | 0.6 | 0.4 | 0.8 | 2.0 |

Raw Scores

The raw data derived from the Evalutron 6000 human evaluations are located on the 2011:Audio Music Similarity and Retrieval Raw Data page.

Metadata and Distance Space Evaluation

The following reports provide evaluation statistics based on analysis of the distance space and metadata matches and include:

- Neighbourhood clustering by artist, album and genre

- Artist-filtered genre clustering

- How often the triangular inequality holds

- Statistics on 'hubs' (tracks similar to many tracks) and orphans (tracks that are not similar to any other tracks at N results).

Reports

BWL1 = Dmitry Bogdanov, Joan Serrà, Nicolas Wack, Perfecto Herrera

PS1 = Tim Pohle, Dominik Schnitzer

PSS1 = Tim Pohle, Klaus Seyerlehner, Dominik Schnitzer

RZ1 = Rainer Zufall

SSPK2 = Klaus Seyerlehner, Markus Schedl, Tim Pohle, Peter Knees

TLN1 = George Tzanetakis, Mathieu Lagrange, Steven Ness

TLN2 = George Tzanetakis, Mathieu Lagrange, Steven Ness

TLN3 = George Tzanetakis, Mathieu Lagrange, Steven Ness

Run Times

file /nema-raid/www/mirex/results/2011/ams/audiosim.runtime.csv not found