Difference between revisions of "2014:GC14UX"

Kahyun Choi (talk | contribs) (→Wireframes) |

(Update to newest version) |

||

| Line 2: | Line 2: | ||

=Welcome to GC14UX= | =Welcome to GC14UX= | ||

Grand Challenge on User Experience 2014 | Grand Challenge on User Experience 2014 | ||

| − | + | =Purpose= | |

| − | = | + | Holistic evaluation of user experience in interacting with user-serving MIR systems. |

| − | Holistic evaluation of user experience in interacting with user-serving MIR systems | + | =Goals= |

| − | + | # to inspire the development of complete MIR systems. | |

| − | =Goals= | + | # to promote the notion of user experience as a first-class research objective in the MIR community. |

| − | |||

| − | |||

| − | |||

=Dataset= | =Dataset= | ||

| − | A set of music | + | A set of music 10,000 music audio tracks is provided for the GC14UX. It will be a subset of tracks drawn from the [http://www.jamendo.com/en/welcome Jamendo collection's] CC-BY licensed works. |

The Jamendo collection contains music in a variety of genres and moods, but is mostly unknown to most listeners. This will mitigate against the possible user experience bias induced by the differential presence (or absence) of popular or known music within the participating systems. | The Jamendo collection contains music in a variety of genres and moods, but is mostly unknown to most listeners. This will mitigate against the possible user experience bias induced by the differential presence (or absence) of popular or known music within the participating systems. | ||

| − | As of May 20, 2014, the Jamendo collection contains 14742 tracks with the | + | As of May 20, 2014, the Jamendo collection contains 14742 tracks with the [http://creativecommons.org/licenses/by/3.0/ CC-BY license]. The CC-BY license allows others to distribute, modify, optimize and use your work as a basis, even commercially, as long as you give credit for the original creation. This is one of the most permissive licenses possible. |

The 10,000 tracks in GC14UX will be sampled (w.r.t. maximizing music variety) from the Jamendo collection with CC-BY license and made available for participants (system developers) to download to build their systems. | The 10,000 tracks in GC14UX will be sampled (w.r.t. maximizing music variety) from the Jamendo collection with CC-BY license and made available for participants (system developers) to download to build their systems. | ||

| − | |||

=Participating Systems= | =Participating Systems= | ||

Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC14UX team. | Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC14UX team. | ||

| Line 35: | Line 31: | ||

|} | |} | ||

| − | = | + | =Participating Systems= |

| − | + | Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC14UX team. | |

| − | + | ||

| + | To ensure a consistent experience, evaluators will see participating systems in fixed size window: '''1024x768'''. Please test your system for this screen size. | ||

| + | |||

| + | =Evaluation= | ||

| + | ==Task== | ||

| − | Evaluators | + | Evaluators are given the following task: |

| − | ''You are creating a short video about | + | ''You are creating a short video about what you did this summer and you need to find some songs to use as background music.'' |

| − | Criteria | + | ==Criteria== |

| − | * '''Overall satisfaction''': | + | ''Note that the evaluation criteria or its descriptions may change in the months leading up to the submission deadline, as we test it and work to improve it.'' |

| + | |||

| + | * '''Overall satisfaction''': Overall, how pleasurable do you find the experience of using this system? | ||

| + | Extremely unsatisfactory / Unsatisfactory / Slightly unsatisfactory / Neutral / Slightly satisfactory / Satisfactory / Extremely satisfactory | ||

* '''Learnability''': How easy was it to figure out how to use the system? | * '''Learnability''': How easy was it to figure out how to use the system? | ||

| + | Extremely difficult / Difficult / Slightly difficult / Neutral / Slightly easy / Easy / Extremely easy | ||

| − | * ''' | + | * '''Robustness''': How good is the system’s ability to warn you when you’re about to make a mistake and allow you to recover? |

| + | Extremely Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Extremely Good ||| Not Applicable | ||

| − | * | + | * ‘’’Affordances’’’: How well does the system allow you to perform what you want to do? |

| − | An open-ended question is provided but is optional for users to give feedback if they wish to do so. | + | * '''Presentation''': How well does the system communicate what’s going on? (How well do you feel the system informs you of its status? Can you clearly understand the labels and words used in the system? How visible are all of your options and menus when you use this system?) |

| + | |||

| + | * '''Aesthetics''': How good is the design? (Is it aesthetically pleasing?) | ||

| + | Extremely Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Extremely Good | ||

| + | |||

| + | * '''Feedback''' (Optional): An open-ended question is provided but is optional for users to give feedback if they wish to do so. | ||

| + | |||

| + | |||

| + | This simplicity is because: 1) the GC14UX is all about how users perceive their experiences of the systems. We intend to capture the user perceptions in a minimally intrusive manner and not to burden the users/evaluators with too many questions or required data inputs. 2) more data capturing opportunities will distract from the real user experience. | ||

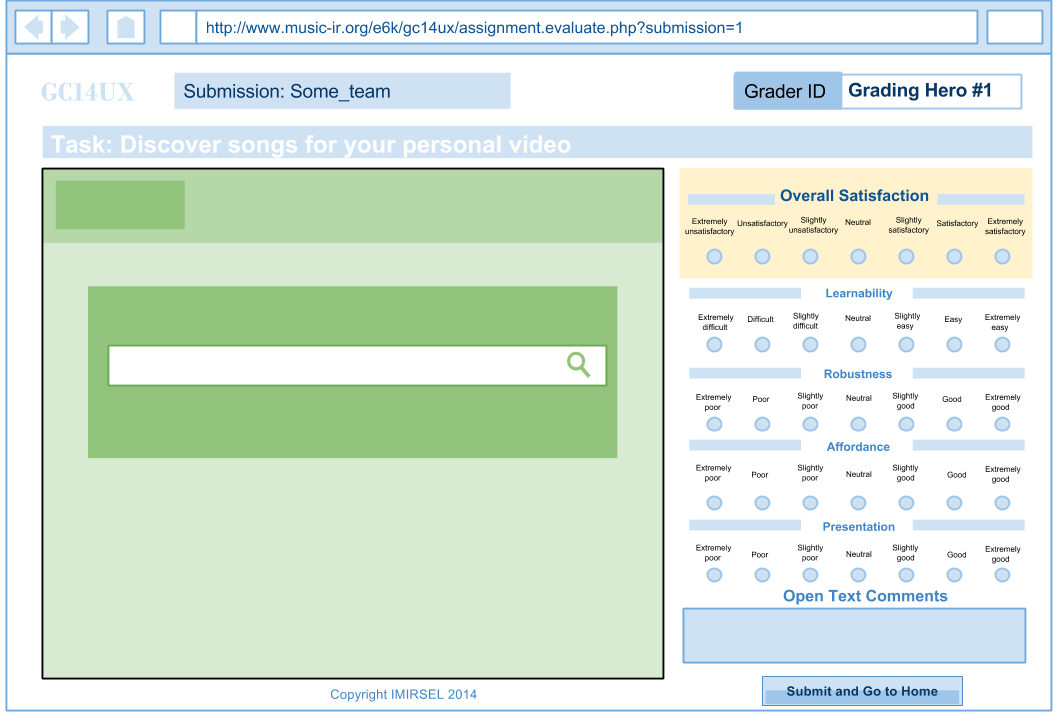

==Evaluation mechanism== | ==Evaluation mechanism== | ||

The GC14UX team will provide a set of evaluation forms which wrap around the participating system. In other words, the evaluation system will offer forms for scoring the participating system, and embed the system within an iframe. | The GC14UX team will provide a set of evaluation forms which wrap around the participating system. In other words, the evaluation system will offer forms for scoring the participating system, and embed the system within an iframe. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

==Evaluators== | ==Evaluators== | ||

| − | + | Evaluators will be users aged 18 and above. For this round, evaluators will be drawn primarily from the MIR community through solicitations via the ISMIR-community mailing list. The evaluation webforms developed by the GC14UX team will ensure all participating systems will get equal number of evaluators. | |

| − | ==Evaluation results== | + | ==Evaluation results== |

Statistics of the scores given by all evaluators will be reported: mean, average deviation. Meaningful text comments from the evaluators will also be reported. | Statistics of the scores given by all evaluators will be reported: mean, average deviation. Meaningful text comments from the evaluators will also be reported. | ||

| − | |||

| − | |||

| − | |||

=Wireframes= | =Wireframes= | ||

Revision as of 20:14, 30 June 2014

Contents

Welcome to GC14UX

Grand Challenge on User Experience 2014

Purpose

Holistic evaluation of user experience in interacting with user-serving MIR systems.

Goals

- to inspire the development of complete MIR systems.

- to promote the notion of user experience as a first-class research objective in the MIR community.

Dataset

A set of music 10,000 music audio tracks is provided for the GC14UX. It will be a subset of tracks drawn from the Jamendo collection's CC-BY licensed works.

The Jamendo collection contains music in a variety of genres and moods, but is mostly unknown to most listeners. This will mitigate against the possible user experience bias induced by the differential presence (or absence) of popular or known music within the participating systems.

As of May 20, 2014, the Jamendo collection contains 14742 tracks with the CC-BY license. The CC-BY license allows others to distribute, modify, optimize and use your work as a basis, even commercially, as long as you give credit for the original creation. This is one of the most permissive licenses possible.

The 10,000 tracks in GC14UX will be sampled (w.r.t. maximizing music variety) from the Jamendo collection with CC-BY license and made available for participants (system developers) to download to build their systems.

Participating Systems

Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC14UX team.

Potential Participants

| (Cool) Team Name | Name(s) | Email(s) |

|---|---|---|

| The MIR UX Master | Dr. MIR | mir@domain.com |

Participating Systems

Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC14UX team.

To ensure a consistent experience, evaluators will see participating systems in fixed size window: 1024x768. Please test your system for this screen size.

Evaluation

Task

Evaluators are given the following task:

You are creating a short video about what you did this summer and you need to find some songs to use as background music.

Criteria

Note that the evaluation criteria or its descriptions may change in the months leading up to the submission deadline, as we test it and work to improve it.

- Overall satisfaction: Overall, how pleasurable do you find the experience of using this system?

Extremely unsatisfactory / Unsatisfactory / Slightly unsatisfactory / Neutral / Slightly satisfactory / Satisfactory / Extremely satisfactory

- Learnability: How easy was it to figure out how to use the system?

Extremely difficult / Difficult / Slightly difficult / Neutral / Slightly easy / Easy / Extremely easy

- Robustness: How good is the system’s ability to warn you when you’re about to make a mistake and allow you to recover?

Extremely Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Extremely Good ||| Not Applicable

- ‘’’Affordances’’’: How well does the system allow you to perform what you want to do?

- Presentation: How well does the system communicate what’s going on? (How well do you feel the system informs you of its status? Can you clearly understand the labels and words used in the system? How visible are all of your options and menus when you use this system?)

- Aesthetics: How good is the design? (Is it aesthetically pleasing?)

Extremely Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Extremely Good

- Feedback (Optional): An open-ended question is provided but is optional for users to give feedback if they wish to do so.

This simplicity is because: 1) the GC14UX is all about how users perceive their experiences of the systems. We intend to capture the user perceptions in a minimally intrusive manner and not to burden the users/evaluators with too many questions or required data inputs. 2) more data capturing opportunities will distract from the real user experience.

Evaluation mechanism

The GC14UX team will provide a set of evaluation forms which wrap around the participating system. In other words, the evaluation system will offer forms for scoring the participating system, and embed the system within an iframe.

Evaluators

Evaluators will be users aged 18 and above. For this round, evaluators will be drawn primarily from the MIR community through solicitations via the ISMIR-community mailing list. The evaluation webforms developed by the GC14UX team will ensure all participating systems will get equal number of evaluators.

Evaluation results

Statistics of the scores given by all evaluators will be reported: mean, average deviation. Meaningful text comments from the evaluators will also be reported.

Wireframes

Important Dates

- July 1: announce the GC

- Sep. 21st: deadline for system submission

- Sep. 28th: start the evaluation

- Oct. 20th: close the evaluation system

- Oct. 27th: announce the results

- Oct. 31st: MIREX and GC session in ISMIR2014