Difference between revisions of "2007:Symbolic Melodic Similarity Results"

(→Overall Summaries Presented by Error Types) |

Kahyun Choi (talk | contribs) (→Raw Scores) |

||

| (31 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

==Introduction== | ==Introduction== | ||

| − | These are the results for the 2007 running of the Symbolic Melodic Similarity task set. For background information about this task set please refer to the [[Symbolic Melodic Similarity]] page. | + | These are the results for the 2007 running of the Symbolic Melodic Similarity task set. For background information about this task set please refer to the [[2007:Symbolic Melodic Similarity]] page. |

| − | Each system was given a query and returned the 10 most melodically similar songs from those taken from the Essen Collection (5274 pieces in the MIDI format | + | Each system was given a query and returned the 10 most melodically similar songs from those taken from the Essen Collection (5274 pieces in the MIDI format; see [http://www.esac-data.org/ ESAC Data Homepage] for more information). For each query, we made four classes of error-mutations, thus the set comprises the following query classes: |

| − | * 0 No errors | + | * 0. No errors |

| − | * One note deleted | + | * 1. One note deleted |

| − | * One note inserted | + | * 2. One note inserted |

| − | * One interval enlarged | + | * 3. One interval enlarged |

| − | * One interval compressed | + | * 4. One interval compressed |

For each query (and its 4 mutations), the returned results (candidates) from all systems were then grouped together (query set) for evaluation by the human graders. The graders were provide with only heard perfect version against which to evaluate the candidates and did not know whether the candidates came from a perfect or mutated query. Each query/candidate set was evaluated by 1 individual grader. Using the Evalutron 6000 system, the graders gave each query/candidate pair two types of scores. Graders were asked to provide 1 categorical score with 3 categories: NS,SS,VS as explained below, and one fine score (in the range from 0 to 10). | For each query (and its 4 mutations), the returned results (candidates) from all systems were then grouped together (query set) for evaluation by the human graders. The graders were provide with only heard perfect version against which to evaluate the candidates and did not know whether the candidates came from a perfect or mutated query. Each query/candidate set was evaluated by 1 individual grader. Using the Evalutron 6000 system, the graders gave each query/candidate pair two types of scores. Graders were asked to provide 1 categorical score with 3 categories: NS,SS,VS as explained below, and one fine score (in the range from 0 to 10). | ||

| Line 20: | Line 19: | ||

'''Total number of unique query/candidate pairs graded''' = 799<br /> | '''Total number of unique query/candidate pairs graded''' = 799<br /> | ||

'''Average number of query/candidate pairs evaluated per grader: 133 <br /> | '''Average number of query/candidate pairs evaluated per grader: 133 <br /> | ||

| − | '''Number of queries''' = | + | '''Number of queries''' = 5 (perfect) with each perfect query error-mutated 4 different ways = 30<br /> |

===General Legend=== | ===General Legend=== | ||

====Team ID==== | ====Team ID==== | ||

| − | + | '''FHAR''' = [https://www.music-ir.org/mirex/abstracts/2007/SMS_ferraro.pdf Pascal Ferraro, Pierre Hanna, Julien Allali, Matthias Robine]<br /> | |

| − | + | '''GAR1''' = [https://www.music-ir.org/mirex/abstracts/2007/QBSH_SMS_gomez.pdf Carlos Gómez begin_of_the_skype_highlighting end_of_the_skype_highlighting begin_of_the_skype_highlighting end_of_the_skype_highlighting, Soraya Abad-Mota, Edna Ruckhaus 1]<br /> | |

| − | + | '''GAR2''' = [https://www.music-ir.org/mirex/abstracts/2007/QBSH_SMS_gomez.pdf Carlos Gómez, Soraya Abad-Mota, Edna Ruckhaus 2]<br /> | |

| − | '''GAR1''' = | + | '''AP1''' = [https://www.music-ir.org/mirex/abstracts/2007/SMS_pinto.pdf Alberto Pinto 1]<br /> |

| − | '''GAR2''' = | + | '''AP2''' = [https://www.music-ir.org/mirex/abstracts/2007/SMS_pinto.pdf Alberto Pinto 2]<br /> |

| − | '''AP1''' = Pinto | + | '''AU1''' = [https://www.music-ir.org/mirex/abstracts/2007/QBSH_SMS_uitdenbogerd.pdf Alexandra L. Uitdenbogerd 1]<br /> |

| − | '''AP2''' = Pinto | + | '''AU2''' = [https://www.music-ir.org/mirex/abstracts/2007/QBSH_SMS_uitdenbogerd.pdf Alexandra L. Uitdenbogerd 2]<br /> |

| − | '''AU1''' = Alexandra L. Uitdenbogerd <br /> | + | '''AU3''' = [https://www.music-ir.org/mirex/abstracts/2007/QBSH_SMS_uitdenbogerd.pdf Alexandra L. Uitdenbogerd 3]<br /> |

| − | '''AU2''' = Alexandra L. Uitdenbogerd <br /> | ||

| − | '''AU3''' = Alexandra L. Uitdenbogerd <br /> | ||

====Broad Categories==== | ====Broad Categories==== | ||

| Line 40: | Line 37: | ||

'''VS''' = Very Similar<br /> | '''VS''' = Very Similar<br /> | ||

| − | ====Table Headings==== | + | ====Table Headings (Other metrics to be added soon to results by Xiao Hu )==== |

'''ADR''' = Average Dynamic Recall <br /> | '''ADR''' = Average Dynamic Recall <br /> | ||

'''NRGB''' = Normalize Recall at Group Boundaries <br /> | '''NRGB''' = Normalize Recall at Group Boundaries <br /> | ||

| Line 57: | Line 54: | ||

==Summary Results== | ==Summary Results== | ||

| + | ===Run Times=== | ||

| + | <csv>2007/sms_runtimes.csv</csv> | ||

===Overall Scores (Includes Perfect and Error Candidates)=== | ===Overall Scores (Includes Perfect and Error Candidates)=== | ||

| − | <csv> | + | <csv>2007/SMS07_overall_norm.csv</csv> |

===Overall Summaries (Presented by Error Types)=== | ===Overall Summaries (Presented by Error Types)=== | ||

| − | <csv> | + | <csv>2007/SMS07_errors_norm.csv</csv> |

| + | ===Friedman Test with Multiple Comparisons Results (p=0.05)=== | ||

| + | The Friedman test was run in MATLAB against the Fine summary data over the 30 queries.<br /> | ||

| + | Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05); | ||

| + | <csv>2007/sms07_sum_friedman_fine.csv</csv> | ||

| + | <csv>2007/sms07_detail_friedman_fine.csv</csv> | ||

| − | + | [[Image:2007 sms fine scores friedmans.png]] | |

| − | |||

| − | |||

| + | ==Raw Scores== | ||

| + | The raw data derived from the Evalutron 6000 human evaluations are located on the 2007:Symbolic Melodic Similarity Raw Data page. | ||

| − | + | [[Category: Results]] | |

| − | |||

| − | |||

Latest revision as of 22:12, 19 December 2011

Contents

Introduction

These are the results for the 2007 running of the Symbolic Melodic Similarity task set. For background information about this task set please refer to the 2007:Symbolic Melodic Similarity page.

Each system was given a query and returned the 10 most melodically similar songs from those taken from the Essen Collection (5274 pieces in the MIDI format; see ESAC Data Homepage for more information). For each query, we made four classes of error-mutations, thus the set comprises the following query classes:

- 0. No errors

- 1. One note deleted

- 2. One note inserted

- 3. One interval enlarged

- 4. One interval compressed

For each query (and its 4 mutations), the returned results (candidates) from all systems were then grouped together (query set) for evaluation by the human graders. The graders were provide with only heard perfect version against which to evaluate the candidates and did not know whether the candidates came from a perfect or mutated query. Each query/candidate set was evaluated by 1 individual grader. Using the Evalutron 6000 system, the graders gave each query/candidate pair two types of scores. Graders were asked to provide 1 categorical score with 3 categories: NS,SS,VS as explained below, and one fine score (in the range from 0 to 10).

Evalutron 6000 Summary Data

Number of evaluators = 6

Number of evaluations per query/candidate pair = 1

Number of queries per grader = 1

Total number of candidates returned = 2400

Total number of unique query/candidate pairs graded = 799

Average number of query/candidate pairs evaluated per grader: 133

Number of queries = 5 (perfect) with each perfect query error-mutated 4 different ways = 30

General Legend

Team ID

FHAR = Pascal Ferraro, Pierre Hanna, Julien Allali, Matthias Robine

GAR1 = Carlos Gómez begin_of_the_skype_highlighting end_of_the_skype_highlighting begin_of_the_skype_highlighting end_of_the_skype_highlighting, Soraya Abad-Mota, Edna Ruckhaus 1

GAR2 = Carlos Gómez, Soraya Abad-Mota, Edna Ruckhaus 2

AP1 = Alberto Pinto 1

AP2 = Alberto Pinto 2

AU1 = Alexandra L. Uitdenbogerd 1

AU2 = Alexandra L. Uitdenbogerd 2

AU3 = Alexandra L. Uitdenbogerd 3

Broad Categories

NS = Not Similar

SS = Somewhat Similar

VS = Very Similar

Table Headings (Other metrics to be added soon to results by Xiao Hu )

ADR = Average Dynamic Recall

NRGB = Normalize Recall at Group Boundaries

AP = Average Precision (non-interpolated)

PND = Precision at N Documents

Calculating Summary Measures

Fine(1) = Sum of fine-grained human similarity decisions (0-10).

PSum(1) = Sum of human broad similarity decisions: NS=0, SS=1, VS=2.

WCsum(1) = 'World Cup' scoring: NS=0, SS=1, VS=3 (rewards Very Similar).

SDsum(1) = 'Stephen Downie' scoring: NS=0, SS=1, VS=4 (strongly rewards Very Similar).

Greater0(1) = NS=0, SS=1, VS=1 (binary relevance judgement).

Greater1(1) = NS=0, SS=0, VS=1 (binary relevance judgement using only Very Similar).

(1)Normalized to the range 0 to 1.

Summary Results

Run Times

| Participant | Runtime (sec) |

|---|---|

| AP1 | query: |

| AP2 | query: |

| AU | indexing: 7 |

| AU1 | query: 9 |

| AU2 | query: 63 |

| AU3 | query: 11 |

| GAR | indexing: 5 |

| GAR1 | query: 5143 |

| GAR2 | query: 4728 |

| FHAR | query: 1155 |

Overall Scores (Includes Perfect and Error Candidates)

| Overall | AP1 | AP2 | AU1 | AU2 | AU3 | GAR1 | GAR2 | FHAR |

|---|---|---|---|---|---|---|---|---|

| ADR | 0.031 | 0.024 | 0.666 | 0.698 | 0.706 | 0.712 | 0.739 | 0.730 |

| NRGB | 0.028 | 0.027 | 0.601 | 0.590 | 0.616 | 0.617 | 0.683 | 0.666 |

| AP | 0.017 | 0.023 | 0.525 | 0.477 | 0.500 | 0.508 | 0.545 | 0.545 |

| PND | 0.044 | 0.056 | 0.527 | 0.495 | 0.515 | 0.494 | 0.588 | 0.557 |

| Fine | 0.292 | 0.281 | 0.532 | 0.528 | 0.532 | 0.586 | 0.581 | 0.540 |

| Psum | 0.234 | 0.190 | 0.522 | 0.524 | 0.527 | 0.589 | 0.580 | 0.517 |

| WCsum | 0.179 | 0.146 | 0.470 | 0.480 | 0.486 | 0.537 | 0.526 | 0.470 |

| SDsum | 0.152 | 0.123 | 0.444 | 0.458 | 0.465 | 0.511 | 0.498 | 0.447 |

| Greater0 | 0.397 | 0.323 | 0.677 | 0.653 | 0.650 | 0.743 | 0.743 | 0.657 |

| Greater1 | 0.070 | 0.057 | 0.367 | 0.393 | 0.403 | 0.433 | 0.417 | 0.377 |

Overall Summaries (Presented by Error Types)

| No Errors | AP1 | AP2 | AU1 | AU2 | AU3 | GAR1 | GAR2 | FHAR |

|---|---|---|---|---|---|---|---|---|

| ADR | 0.009 | 0.024 | 0.687 | 0.692 | 0.694 | 0.707 | 0.730 | 0.743 |

| NRGB | 0.010 | 0.030 | 0.615 | 0.576 | 0.583 | 0.602 | 0.675 | 0.661 |

| AP | 0.008 | 0.029 | 0.539 | 0.461 | 0.477 | 0.499 | 0.528 | 0.551 |

| PND | 0.021 | 0.069 | 0.556 | 0.491 | 0.488 | 0.496 | 0.561 | 0.548 |

| Fine | 0.260 | 0.283 | 0.555 | 0.542 | 0.539 | 0.581 | 0.582 | 0.527 |

| Psum | 0.183 | 0.208 | 0.550 | 0.542 | 0.525 | 0.592 | 0.600 | 0.500 |

| WCsum | 0.139 | 0.161 | 0.494 | 0.500 | 0.500 | 0.544 | 0.544 | 0.450 |

| SDsum | 0.117 | 0.138 | 0.467 | 0.479 | 0.488 | 0.521 | 0.517 | 0.425 |

| Greater0 | 0.317 | 0.350 | 0.717 | 0.667 | 0.600 | 0.733 | 0.767 | 0.650 |

| Greater1 | 0.050 | 0.067 | 0.383 | 0.417 | 0.450 | 0.450 | 0.433 | 0.350 |

| Deleted | AP1 | AP2 | AU1 | AU2 | AU3 | GAR1 | GAR2 | FHAR |

| ADR | 0.062 | 0.003 | 0.673 | 0.730 | 0.761 | 0.704 | 0.762 | 0.743 |

| NRGB | 0.058 | 0.003 | 0.663 | 0.656 | 0.740 | 0.628 | 0.720 | 0.706 |

| AP | 0.036 | 0.012 | 0.557 | 0.532 | 0.613 | 0.531 | 0.598 | 0.571 |

| PND | 0.085 | 0.024 | 0.556 | 0.568 | 0.646 | 0.509 | 0.640 | 0.574 |

| Fine | 0.343 | 0.258 | 0.518 | 0.529 | 0.511 | 0.580 | 0.578 | 0.554 |

| Psum | 0.317 | 0.175 | 0.500 | 0.525 | 0.508 | 0.592 | 0.575 | 0.542 |

| Wcsum | 0.256 | 0.128 | 0.450 | 0.478 | 0.461 | 0.533 | 0.522 | 0.489 |

| SDsum | 0.225 | 0.104 | 0.425 | 0.454 | 0.438 | 0.504 | 0.496 | 0.463 |

| Greater0 | 0.500 | 0.317 | 0.650 | 0.667 | 0.650 | 0.767 | 0.733 | 0.700 |

| Greater1 | 0.133 | 0.033 | 0.350 | 0.383 | 0.367 | 0.417 | 0.417 | 0.383 |

| Inserted | AP1 | AP2 | AU1 | AU2 | AU3 | GAR1 | GAR2 | FHAR |

| ADR | 0.037 | 0.047 | 0.677 | 0.685 | 0.685 | 0.718 | 0.736 | 0.699 |

| NRGB | 0.036 | 0.047 | 0.610 | 0.555 | 0.573 | 0.608 | 0.668 | 0.667 |

| AP | 0.013 | 0.030 | 0.548 | 0.469 | 0.470 | 0.494 | 0.530 | 0.537 |

| PND | 0.056 | 0.052 | 0.572 | 0.456 | 0.483 | 0.474 | 0.557 | 0.563 |

| Fine | 0.310 | 0.278 | 0.501 | 0.505 | 0.505 | 0.591 | 0.568 | 0.518 |

| Psum | 0.258 | 0.175 | 0.467 | 0.492 | 0.500 | 0.592 | 0.542 | 0.492 |

| WCsum | 0.194 | 0.133 | 0.417 | 0.450 | 0.456 | 0.539 | 0.494 | 0.450 |

| SDsum | 0.163 | 0.113 | 0.392 | 0.429 | 0.433 | 0.513 | 0.471 | 0.429 |

| Greater0 | 0.450 | 0.300 | 0.617 | 0.617 | 0.633 | 0.750 | 0.683 | 0.617 |

| Greater1 | 0.067 | 0.050 | 0.317 | 0.367 | 0.367 | 0.433 | 0.400 | 0.367 |

| Enlarged | AP1 | AP2 | AU1 | AU2 | AU3 | GAR1 | GAR2 | FHAR |

| ADR | 0.034 | 0.036 | 0.668 | 0.716 | 0.704 | 0.722 | 0.732 | 0.710 |

| NRGB | 0.017 | 0.037 | 0.589 | 0.604 | 0.604 | 0.631 | 0.665 | 0.624 |

| AP | 0.019 | 0.026 | 0.478 | 0.462 | 0.465 | 0.503 | 0.526 | 0.511 |

| PND | 0.019 | 0.085 | 0.483 | 0.474 | 0.474 | 0.516 | 0.576 | 0.525 |

| Fine | 0.255 | 0.300 | 0.531 | 0.520 | 0.558 | 0.595 | 0.589 | 0.535 |

| Psum | 0.167 | 0.200 | 0.533 | 0.517 | 0.558 | 0.583 | 0.592 | 0.500 |

| WCsum | 0.133 | 0.161 | 0.483 | 0.472 | 0.511 | 0.533 | 0.533 | 0.456 |

| SDsum | 0.117 | 0.142 | 0.458 | 0.450 | 0.488 | 0.508 | 0.504 | 0.433 |

| Greater0 | 0.267 | 0.317 | 0.683 | 0.650 | 0.700 | 0.733 | 0.767 | 0.633 |

| Greater1 | 0.067 | 0.083 | 0.383 | 0.383 | 0.417 | 0.433 | 0.417 | 0.367 |

| Compressed | AP1 | AP2 | AU1 | AU2 | AU3 | GAR1 | GAR2 | FHAR |

| ADR | 0.012 | 0.011 | 0.627 | 0.667 | 0.686 | 0.710 | 0.737 | 0.757 |

| NRGB | 0.017 | 0.019 | 0.526 | 0.561 | 0.578 | 0.618 | 0.688 | 0.673 |

| AP | 0.007 | 0.016 | 0.504 | 0.459 | 0.475 | 0.515 | 0.545 | 0.553 |

| PND | 0.037 | 0.048 | 0.468 | 0.487 | 0.482 | 0.475 | 0.605 | 0.574 |

| Fine | 0.290 | 0.287 | 0.554 | 0.546 | 0.549 | 0.584 | 0.589 | 0.567 |

| Psum | 0.242 | 0.192 | 0.558 | 0.542 | 0.542 | 0.583 | 0.592 | 0.550 |

| WCsum | 0.172 | 0.144 | 0.506 | 0.500 | 0.500 | 0.533 | 0.533 | 0.506 |

| SDsum | 0.138 | 0.121 | 0.479 | 0.479 | 0.479 | 0.508 | 0.504 | 0.483 |

| Greater0 | 0.450 | 0.333 | 0.717 | 0.667 | 0.667 | 0.733 | 0.767 | 0.683 |

| Greater1 | 0.033 | 0.050 | 0.400 | 0.417 | 0.417 | 0.433 | 0.417 | 0.417 |

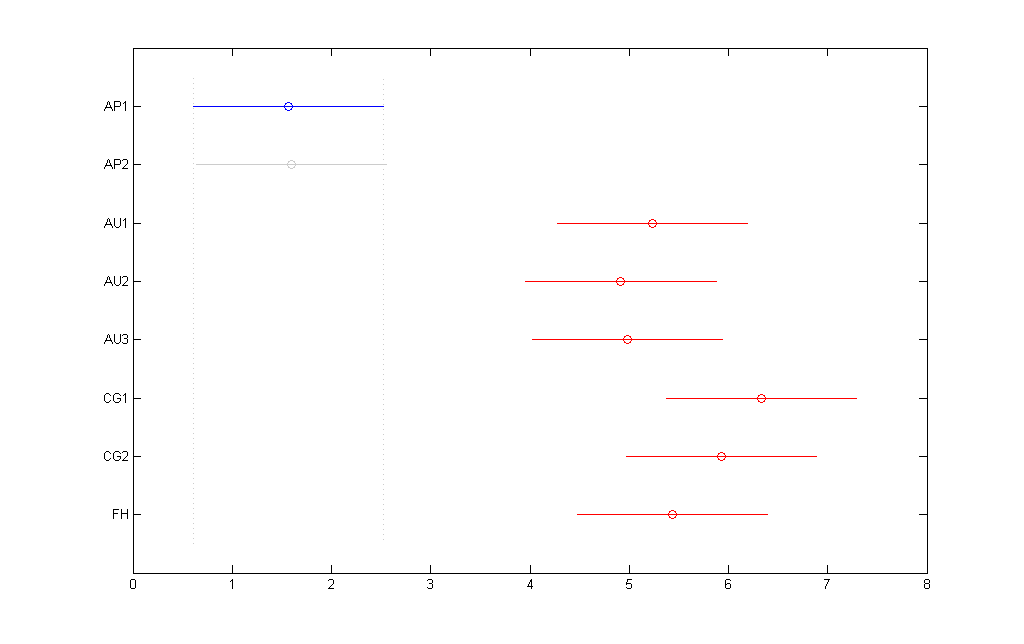

Friedman Test with Multiple Comparisons Results (p=0.05)

The Friedman test was run in MATLAB against the Fine summary data over the 30 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| Friedman's ANOVA Table | |||||

|---|---|---|---|---|---|

| Source | SS | df | MS | Chi-sq | Prob>Chi-sq |

| Columns | 727.3833 | 7 | 103.9119 | 121.2787 | 0 |

| Error | 532.1167 | 203 | 2.6213 | ||

| Total | 1.2595e+003 | 239 |

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| AP1 | AP2 | -1.9498 | -0.0333 | 1.8832 | FALSE |

| AP1 | AU1 | -5.5832 | -3.6667 | -1.7502 | TRUE |

| AP1 | AU2 | -5.2665 | -3.35 | -1.4335 | TRUE |

| AP1 | AU3 | -5.3332 | -3.4167 | -1.5002 | TRUE |

| AP1 | CG1 | -6.6832 | -4.7667 | -2.8502 | TRUE |

| AP1 | CG2 | -6.2832 | -4.3667 | -2.4502 | TRUE |

| AP1 | FH | -5.7832 | -3.8667 | -1.9502 | TRUE |

| AP2 | AU1 | -5.5498 | -3.6333 | -1.7168 | TRUE |

| AP2 | AU2 | -5.2332 | -3.3167 | -1.4002 | TRUE |

| AP2 | AU3 | -5.2998 | -3.3833 | -1.4668 | TRUE |

| AP2 | CG1 | -6.6498 | -4.7333 | -2.8168 | TRUE |

| AP2 | CG2 | -6.2498 | -4.3333 | -2.4168 | TRUE |

| AP2 | FH | -5.7498 | -3.8333 | -1.9168 | TRUE |

| AU1 | AU2 | -1.5998 | 0.3167 | 2.2332 | FALSE |

| AU1 | AU3 | -1.6665 | 0.25 | 2.1665 | FALSE |

| AU1 | CG1 | -3.0165 | -1.1 | 0.8165 | FALSE |

| AU1 | CG2 | -2.6165 | -0.7 | 1.2165 | FALSE |

| AU1 | FH | -2.1165 | -0.2 | 1.7165 | FALSE |

| AU2 | AU3 | -1.9832 | -0.0667 | 1.8498 | FALSE |

| AU2 | CG1 | -3.3332 | -1.4167 | 0.4998 | FALSE |

| AU2 | CG2 | -2.9332 | -1.0167 | 0.8998 | FALSE |

| AU2 | FH | -2.4332 | -0.5167 | 1.3998 | FALSE |

| AU3 | CG1 | -3.2665 | -1.35 | 0.5665 | FALSE |

| AU3 | CG2 | -2.8665 | -0.95 | 0.9665 | FALSE |

| AU3 | FH | -2.3665 | -0.45 | 1.4665 | FALSE |

| CG1 | CG2 | -1.5165 | 0.4 | 2.3165 | FALSE |

| CG1 | FH | -1.0165 | 0.9 | 2.8165 | FALSE |

| CG2 | FH | -1.4165 | 0.5 | 2.4165 | FALSE |

Raw Scores

The raw data derived from the Evalutron 6000 human evaluations are located on the 2007:Symbolic Melodic Similarity Raw Data page.