Difference between revisions of "2007:Audio Music Similarity and Retrieval Results"

(→Summary Data on Human Evaluations (Evalutron 6000)) |

(→Team ID) |

||

| (43 intermediate revisions by 9 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

[[Category: Results]] | [[Category: Results]] | ||

==Introduction== | ==Introduction== | ||

| − | These are the results for the 2007 running of the Audio Music Similarity and Retrieval task set. For background information about this task set please refer to the [[Audio Music Similarity and Retrieval]] page. | + | These are the results for the 2007 running of the Audio Music Similarity and Retrieval task set. For background information about this task set please refer to the [[2007:Audio Music Similarity and Retrieval]] page. |

| − | Each system was given | + | Each system was given 7000 songs chosen from IMIRSEL's "uspop", "uscrap" and "american" "classical" and "sundry" collections. Each system then returned a 7000x7000 distance matrix. 100 songs were randomly selected from the 10 genre groups (10 per genre) as queries and the first 5 most highly ranked songs out of the 7000 were extracted for each query (after filtering out the query itself, returned results from the same artist were also omitted). Then, for each query, the returned results (candidates) from all participants were grouped and were evaluated by human graders using the Evalutron 6000 grading system. Each individual query/candidate set was evaluated by a single grader. For each query/candidate pair, graders provided two scores. Graders were asked to provide 1 categorical score with 3 categories: NS,SS,VS as explained below, and one fine score (in the range from 0 to 10). A description and analysis is provided below. |

| + | |||

| + | The systems read in 30 second audio clips as their raw data. The same 30 second clips were used in the grading stage. | ||

===Summary Data on Human Evaluations (Evalutron 6000)=== | ===Summary Data on Human Evaluations (Evalutron 6000)=== | ||

'''Number of evaluators''' = 20<br /> | '''Number of evaluators''' = 20<br /> | ||

| − | '''Number of | + | '''Number of evaluations per query/candidate pair''' = 1<br /> |

'''Number of queries per grader''' = 5 <br /> | '''Number of queries per grader''' = 5 <br /> | ||

| − | '''Size of the candidate lists''' = | + | '''Size of the candidate lists''' = 48.32<br /> |

'''Number of randomly selected queries''' = 100 <br /> | '''Number of randomly selected queries''' = 100 <br /> | ||

| + | '''Number of query/candidate pairs graded''' = 4832 | ||

====General Legend==== | ====General Legend==== | ||

=====Team ID===== | =====Team ID===== | ||

| − | ''' | + | '''BK1''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_bosteels.pdf Klaas Bosteels, Etienne E. Kerre 1]<br /> |

| − | ''' | + | '''BK2''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_bosteels.pdf Klaas Bosteels, Etienne E. Kerre 2]<br /> |

| − | ''' | + | '''CB1''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_bastuck.pdf Christoph Bastuck 1]<br /> |

| − | ''' | + | '''CB2''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_bastuck.pdf Christoph Bastuck 2]<br /> |

| − | ''' | + | '''CB3''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_bastuck.pdf Christoph Bastuck 3]<br /> |

| − | ''' | + | '''GT''' = [https://www.music-ir.org/mirex/abstracts/2007/AI_CC_GC_MC_AS_tzanetakis.pdf George Tzanetakis] <br /> |

| + | '''LB''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_barrington.pdf Luke Barrington, Douglas Turnbull, David Torres, Gert Lanskriet]<br /> | ||

| + | '''ME''' = [https://www.music-ir.org/mirex/abstracts/2007/AI_CC_GC_MC_AS_mandel.pdf Michael I. Mandel, Daniel P. W. Ellis]<br /> | ||

| + | '''PC''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_paradzinets.pdf Aliaksandr Paradzinets, Liming Chen]<br /> | ||

| + | '''PS''' = [https://www.music-ir.org/mirex/abstracts/2007/AS_pohle.pdf Tim Pohle, Dominik Schnitzer]<br /> | ||

| + | '''TL1''' = [https://www.music-ir.org/mirex/abstracts/2007/AI_CC_GC_MC_AS_lidy.pdf Thomas Lidy, Andreas Rauber, Antonio Pertusa, José Manuel Iñesta 1]<br /> | ||

| + | '''TL2''' = [https://www.music-ir.org/mirex/abstracts/2007/AI_CC_GC_MC_AS_lidy.pdf Thomas Lidy, Andreas Rauber, Antonio Pertusa, José Manuel Iñesta 2]<br /> | ||

| − | + | ====Broad Categories==== | |

'''NS''' = Not Similar<br /> | '''NS''' = Not Similar<br /> | ||

'''SS''' = Somewhat Similar<br /> | '''SS''' = Somewhat Similar<br /> | ||

| Line 39: | Line 46: | ||

===Overall Summary Results=== | ===Overall Summary Results=== | ||

| + | '''NB''': The results for BK2 were interpolated from partial data due to a runtime error. | ||

| − | <csv> | + | <csv>2007/ams07_overall_summary2.csv</csv> |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

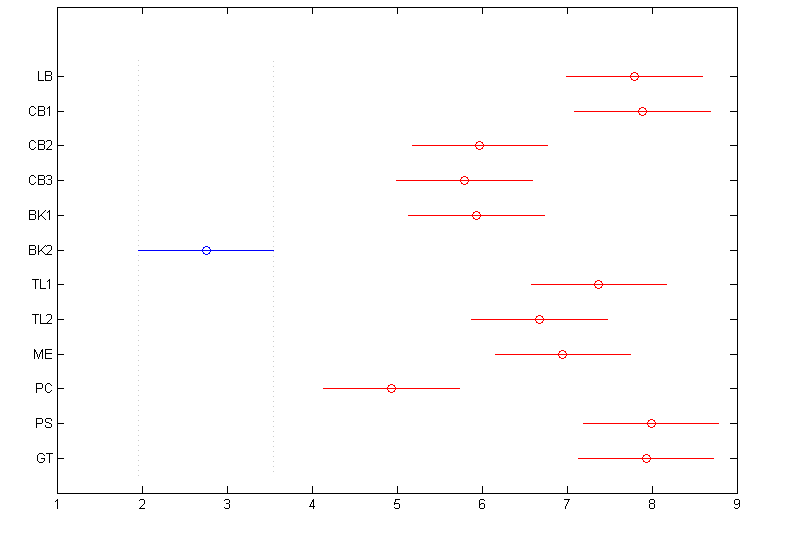

===Friedman Test with Multiple Comparisons Results (p=0.05)=== | ===Friedman Test with Multiple Comparisons Results (p=0.05)=== | ||

| − | The Friedman test was run in MATLAB against the Fine summary data over the | + | The Friedman test was run in MATLAB against the Fine summary data over the 100 queries.<br /> |

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05); | Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05); | ||

| − | <csv> | + | <csv>2007/ams07_sum_friedman_fine.csv</csv> |

| − | <csv> | + | <csv>2007/ams07_detail_friedman_fine.csv</csv> |

| + | |||

| + | [[Image:2007 ams broad scores friedmans.png]] | ||

===Summary Results by Query=== | ===Summary Results by Query=== | ||

| − | + | These are the mean FINE scores per query assigned by Evalutron graders. The FINE scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0.0 and 10.0. A perfect score would be 10. Genre labels have been included for reference. | |

| − | + | <csv>2007/ams07_fine_by_query_with_genre.csv</csv> | |

| − | |||

| − | + | These are the mean BROAD scores per query assigned by Evalutron graders. The BROAD scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 (not similar) and 2 (very similar). A perfect score would be 2. Genre labels have been included for reference. | |

| − | <csv> | + | <csv>2007/ams07_broad_by_query_with_genre.csv</csv> |

| + | ===Anonymized Metadata=== | ||

| + | [https://www.music-ir.org/mirex/results/2007/anonymizedAudioSim07metaData.csv Anonymized Metadata]<br /> | ||

| − | + | ===Raw Scores=== | |

| − | + | The raw data derived from the Evalutron 6000 human evaluations are located on the [[2007:Audio Music Similarity and Retrieval Raw Data]] page. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | ||

| − | |||

| − | |||

| − | The | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | [[ | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 10:44, 26 July 2010

Contents

Introduction

These are the results for the 2007 running of the Audio Music Similarity and Retrieval task set. For background information about this task set please refer to the 2007:Audio Music Similarity and Retrieval page.

Each system was given 7000 songs chosen from IMIRSEL's "uspop", "uscrap" and "american" "classical" and "sundry" collections. Each system then returned a 7000x7000 distance matrix. 100 songs were randomly selected from the 10 genre groups (10 per genre) as queries and the first 5 most highly ranked songs out of the 7000 were extracted for each query (after filtering out the query itself, returned results from the same artist were also omitted). Then, for each query, the returned results (candidates) from all participants were grouped and were evaluated by human graders using the Evalutron 6000 grading system. Each individual query/candidate set was evaluated by a single grader. For each query/candidate pair, graders provided two scores. Graders were asked to provide 1 categorical score with 3 categories: NS,SS,VS as explained below, and one fine score (in the range from 0 to 10). A description and analysis is provided below.

The systems read in 30 second audio clips as their raw data. The same 30 second clips were used in the grading stage.

Summary Data on Human Evaluations (Evalutron 6000)

Number of evaluators = 20

Number of evaluations per query/candidate pair = 1

Number of queries per grader = 5

Size of the candidate lists = 48.32

Number of randomly selected queries = 100

Number of query/candidate pairs graded = 4832

General Legend

Team ID

BK1 = Klaas Bosteels, Etienne E. Kerre 1

BK2 = Klaas Bosteels, Etienne E. Kerre 2

CB1 = Christoph Bastuck 1

CB2 = Christoph Bastuck 2

CB3 = Christoph Bastuck 3

GT = George Tzanetakis

LB = Luke Barrington, Douglas Turnbull, David Torres, Gert Lanskriet

ME = Michael I. Mandel, Daniel P. W. Ellis

PC = Aliaksandr Paradzinets, Liming Chen

PS = Tim Pohle, Dominik Schnitzer

TL1 = Thomas Lidy, Andreas Rauber, Antonio Pertusa, José Manuel Iñesta 1

TL2 = Thomas Lidy, Andreas Rauber, Antonio Pertusa, José Manuel Iñesta 2

Broad Categories

NS = Not Similar

SS = Somewhat Similar

VS = Very Similar

Calculating Summary Measures

Fine(1) = Sum of fine-grained human similarity decisions (0-10).

PSum(1) = Sum of human broad similarity decisions: NS=0, SS=1, VS=2.

WCsum(1) = 'World Cup' scoring: NS=0, SS=1, VS=3 (rewards Very Similar).

SDsum(1) = 'Stephen Downie' scoring: NS=0, SS=1, VS=4 (strongly rewards Very Similar).

Greater0(1) = NS=0, SS=1, VS=1 (binary relevance judgement).

Greater1(1) = NS=0, SS=0, VS=1 (binary relevance judgement using only Very Similar).

(1)Normalized to the range 0 to 1.

Overall Summary Results

NB: The results for BK2 were interpolated from partial data due to a runtime error.

| BK1 | BK2 | CB1 | CB2 | CB3 | GT | LB | ME | PC | PS | TL1 | TL2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fine | 0.412 | 0.178 | 0.539 | 0.446 | 0.439 | 0.554 | 0.541 | 0.512 | 0.377 | 0.568 | 0.519 | 0.491 |

| Psum | 0.417 | 0.147 | 0.586 | 0.468 | 0.457 | 0.597 | 0.582 | 0.539 | 0.376 | 0.619 | 0.556 | 0.521 |

| WCsum | 0.361 | 0.115 | 0.528 | 0.414 | 0.407 | 0.534 | 0.520 | 0.480 | 0.325 | 0.560 | 0.491 | 0.459 |

| SDsum | 0.333 | 0.099 | 0.499 | 0.387 | 0.382 | 0.503 | 0.489 | 0.451 | 0.299 | 0.531 | 0.459 | 0.428 |

| Greater0 | 0.586 | 0.244 | 0.760 | 0.630 | 0.608 | 0.786 | 0.768 | 0.716 | 0.530 | 0.796 | 0.750 | 0.708 |

| Greater1 | 0.246 | 0.050 | 0.412 | 0.304 | 0.306 | 0.408 | 0.396 | 0.362 | 0.222 | 0.442 | 0.362 | 0.334 |

Friedman Test with Multiple Comparisons Results (p=0.05)

The Friedman test was run in MATLAB against the Fine summary data over the 100 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| Friedman's ANOVA Table | |||||

|---|---|---|---|---|---|

| Source | SS | df | MS | Chi-sq | Prob>Chi-sq |

| Columns | 3.0110e+003 | 11 | 273.7314 | 232.0407 | 0 |

| Error | 1.1263e+004 | 1089 | 10.3425 | ||

| Total | 1.4274e+004 | 1199 |

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| LB | CB1 | -1.7998 | -0.1350 | 1.5298 | FALSE |

| LB | CB2 | 0.1302 | 1.7950 | 3.4598 | TRUE |

| LB | CB3 | 0.1002 | 1.7650 | 3.4298 | TRUE |

| LB | BK1 | -0.0598 | 1.6050 | 3.2698 | FALSE |

| LB | BK2 | 3.4352 | 5.1000 | 6.7648 | TRUE |

| LB | TL1 | -1.4748 | 0.1900 | 1.8548 | FALSE |

| LB | TL2 | -0.9048 | 0.7600 | 2.4248 | FALSE |

| LB | ME | -0.9098 | 0.7550 | 2.4198 | FALSE |

| LB | PC | 1.2052 | 2.8700 | 4.5348 | TRUE |

| LB | PS | -2.3198 | -0.6550 | 1.0098 | FALSE |

| LB | GT | -2.2748 | -0.6100 | 1.0548 | FALSE |

| CB1 | CB2 | 0.2652 | 1.9300 | 3.5948 | TRUE |

| CB1 | CB3 | 0.2352 | 1.9000 | 3.5648 | TRUE |

| CB1 | BK1 | 0.0752 | 1.7400 | 3.4048 | TRUE |

| CB1 | BK2 | 3.5702 | 5.2350 | 6.8998 | TRUE |

| CB1 | TL1 | -1.3398 | 0.3250 | 1.9898 | FALSE |

| CB1 | TL2 | -0.7698 | 0.8950 | 2.5598 | FALSE |

| CB1 | ME | -0.7748 | 0.8900 | 2.5548 | FALSE |

| CB1 | PC | 1.3402 | 3.0050 | 4.6698 | TRUE |

| CB1 | PS | -2.1848 | -0.5200 | 1.1448 | FALSE |

| CB1 | GT | -2.1398 | -0.4750 | 1.1898 | FALSE |

| CB2 | CB3 | -1.6948 | -0.0300 | 1.6348 | FALSE |

| CB2 | BK1 | -1.8548 | -0.1900 | 1.4748 | FALSE |

| CB2 | BK2 | 1.6402 | 3.3050 | 4.9698 | TRUE |

| CB2 | TL1 | -3.2698 | -1.6050 | 0.0598 | FALSE |

| CB2 | TL2 | -2.6998 | -1.0350 | 0.6298 | FALSE |

| CB2 | ME | -2.7048 | -1.0400 | 0.6248 | FALSE |

| CB2 | PC | -0.5898 | 1.0750 | 2.7398 | FALSE |

| CB2 | PS | -4.1148 | -2.4500 | -0.7852 | TRUE |

| CB2 | GT | -4.0698 | -2.4050 | -0.7402 | TRUE |

| CB3 | BK1 | -1.8248 | -0.1600 | 1.5048 | FALSE |

| CB3 | BK2 | 1.6702 | 3.3350 | 4.9998 | TRUE |

| CB3 | TL1 | -3.2398 | -1.5750 | 0.0898 | FALSE |

| CB3 | TL2 | -2.6698 | -1.0050 | 0.6598 | FALSE |

| CB3 | ME | -2.6748 | -1.0100 | 0.6548 | FALSE |

| CB3 | PC | -0.5598 | 1.1050 | 2.7698 | FALSE |

| CB3 | PS | -4.0848 | -2.4200 | -0.7552 | TRUE |

| CB3 | GT | -4.0398 | -2.3750 | -0.7102 | TRUE |

| BK1 | BK2 | 1.8302 | 3.4950 | 5.1598 | TRUE |

| BK1 | TL1 | -3.0798 | -1.4150 | 0.2498 | FALSE |

| BK1 | TL2 | -2.5098 | -0.8450 | 0.8198 | FALSE |

| BK1 | ME | -2.5148 | -0.8500 | 0.8148 | FALSE |

| BK1 | PC | -0.3998 | 1.2650 | 2.9298 | FALSE |

| BK1 | PS | -3.9248 | -2.2600 | -0.5952 | TRUE |

| BK1 | GT | -3.8798 | -2.2150 | -0.5502 | TRUE |

| BK2 | TL1 | -6.5748 | -4.9100 | -3.2452 | TRUE |

| BK2 | TL2 | -6.0048 | -4.3400 | -2.6752 | TRUE |

| BK2 | ME | -6.0098 | -4.3450 | -2.6802 | TRUE |

| BK2 | PC | -3.8948 | -2.2300 | -0.5652 | TRUE |

| BK2 | PS | -7.4198 | -5.7550 | -4.0902 | TRUE |

| BK2 | GT | -7.3748 | -5.7100 | -4.0452 | TRUE |

| TL1 | TL2 | -1.0948 | 0.5700 | 2.2348 | FALSE |

| TL1 | ME | -1.0998 | 0.5650 | 2.2298 | FALSE |

| TL1 | PC | 1.0152 | 2.6800 | 4.3448 | TRUE |

| TL1 | PS | -2.5098 | -0.8450 | 0.8198 | FALSE |

| TL1 | GT | -2.4648 | -0.8000 | 0.8648 | FALSE |

| TL2 | ME | -1.6698 | -0.0050 | 1.6598 | FALSE |

| TL2 | PC | 0.4452 | 2.1100 | 3.7748 | TRUE |

| TL2 | PS | -3.0798 | -1.4150 | 0.2498 | FALSE |

| TL2 | GT | -3.0348 | -1.3700 | 0.2948 | FALSE |

| ME | PC | 0.4502 | 2.1150 | 3.7798 | TRUE |

| ME | PS | -3.0748 | -1.4100 | 0.2548 | FALSE |

| ME | GT | -3.0298 | -1.3650 | 0.2998 | FALSE |

| PC | PS | -5.1898 | -3.5250 | -1.8602 | TRUE |

| PC | GT | -5.1448 | -3.4800 | -1.8152 | TRUE |

| PS | GT | -1.6198 | 0.0450 | 1.7098 | FALSE |

Summary Results by Query

These are the mean FINE scores per query assigned by Evalutron graders. The FINE scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0.0 and 10.0. A perfect score would be 10. Genre labels have been included for reference.

| Genre | Query | BK1 | BK2 | CB1 | CB2 | CB3 | GT | LB | ME | PC | PS | TL1 | TL2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BAROQUE | d004553 | 1.500 | 2.920 | 8.500 | 7.740 | 8.300 | 8.920 | 8.740 | 8.960 | 7.420 | 8.800 | 6.960 | 5.880 |

| BAROQUE | d006674 | 7.740 | 2.400 | 9.300 | 7.840 | 9.420 | 9.380 | 9.000 | 9.280 | 7.060 | 9.200 | 9.700 | 9.680 |

| BAROQUE | d008942 | 6.400 | 1.000 | 7.000 | 5.400 | 7.800 | 7.200 | 8.400 | 5.800 | 2.600 | 6.980 | 6.480 | 6.980 |

| BAROQUE | d010361 | 0.340 | 1.640 | 6.880 | 5.420 | 6.940 | 5.640 | 5.300 | 6.700 | 4.840 | 8.880 | 8.360 | 7.680 |

| BAROQUE | d011850 | 9.040 | 1.760 | 8.920 | 8.100 | 7.900 | 9.080 | 8.760 | 7.560 | 2.980 | 9.060 | 9.020 | 9.380 |

| BAROQUE | d012288 | 0.360 | 2.280 | 8.900 | 2.740 | 5.660 | 7.140 | 8.600 | 7.800 | 4.400 | 8.760 | 8.400 | 9.160 |

| BAROQUE | d017287 | 1.060 | 1.620 | 9.480 | 8.900 | 8.180 | 9.600 | 9.140 | 9.220 | 5.260 | 7.800 | 8.840 | 8.660 |

| BAROQUE | d018106 | 0.340 | 0.900 | 6.000 | 3.560 | 6.720 | 8.020 | 7.300 | 7.660 | 7.240 | 7.800 | 5.860 | 4.720 |

| BAROQUE | d018433 | 1.680 | 2.200 | 9.020 | 7.000 | 7.680 | 9.360 | 8.900 | 9.420 | 6.360 | 7.640 | 8.880 | 8.660 |

| BAROQUE | d018533 | 0.120 | 1.940 | 7.140 | 6.660 | 6.340 | 7.440 | 7.700 | 6.720 | 6.160 | 7.480 | 6.300 | 7.220 |

| BLUES | e000039 | 6.160 | 5.080 | 2.660 | 2.780 | 1.400 | 5.060 | 5.200 | 5.700 | 5.600 | 4.880 | 5.820 | 2.340 |

| BLUES | e001388 | 5.880 | 3.960 | 4.000 | 2.320 | 6.700 | 8.180 | 7.020 | 7.780 | 8.460 | 7.920 | 5.380 | 5.380 |

| BLUES | e004743 | 8.800 | 7.100 | 5.500 | 2.800 | 0.700 | 6.900 | 2.800 | 8.400 | 0.900 | 5.600 | 4.800 | 4.800 |

| BLUES | e004899 | 3.300 | 3.120 | 3.000 | 2.820 | 1.380 | 3.100 | 5.360 | 5.700 | 5.440 | 3.120 | 2.460 | 1.500 |

| BLUES | e006420 | 1.960 | 1.120 | 3.600 | 3.120 | 1.860 | 3.140 | 3.260 | 2.220 | 2.300 | 2.960 | 0.400 | 0.820 |

| BLUES | e011446 | 6.860 | 5.800 | 7.980 | 8.200 | 8.580 | 7.560 | 7.440 | 6.260 | 6.420 | 7.100 | 7.340 | 6.800 |

| BLUES | e012584 | 4.040 | 1.540 | 1.480 | 2.220 | 1.660 | 3.840 | 2.240 | 1.160 | 2.200 | 4.200 | 4.780 | 2.620 |

| BLUES | e013173 | 5.200 | 5.320 | 4.920 | 1.500 | 1.760 | 5.180 | 7.340 | 6.340 | 7.880 | 7.100 | 7.060 | 4.680 |

| BLUES | e015590 | 3.000 | 2.560 | 4.780 | 2.600 | 2.620 | 3.680 | 3.440 | 3.720 | 1.320 | 1.700 | 2.620 | 2.480 |

| BLUES | e018053 | 1.360 | 2.120 | 4.560 | 2.460 | 1.380 | 3.080 | 4.720 | 0.760 | 2.980 | 3.980 | 2.720 | 1.980 |

| CLASSICAL | d000429 | 4.640 | 1.960 | 4.780 | 3.280 | 4.840 | 5.820 | 5.900 | 5.140 | 0.360 | 5.840 | 4.780 | 4.880 |

| CLASSICAL | d003313 | 1.020 | 2.880 | 7.480 | 8.120 | 5.940 | 4.360 | 7.420 | 6.380 | 5.200 | 7.580 | 5.740 | 5.980 |

| CLASSICAL | d011706 | 3.280 | 3.240 | 9.040 | 8.940 | 9.060 | 8.920 | 9.020 | 9.100 | 1.680 | 8.140 | 7.920 | 5.460 |

| CLASSICAL | d012929 | 0.760 | 1.760 | 8.000 | 7.940 | 7.720 | 7.820 | 7.240 | 7.540 | 7.340 | 8.060 | 7.660 | 7.380 |

| CLASSICAL | d014060 | 1.320 | 2.660 | 7.500 | 6.860 | 6.740 | 7.320 | 8.360 | 6.520 | 5.440 | 7.040 | 6.560 | 6.420 |

| CLASSICAL | d014334 | 1.100 | 2.600 | 9.200 | 9.200 | 8.860 | 8.800 | 8.000 | 8.100 | 5.400 | 8.160 | 8.200 | 8.200 |

| CLASSICAL | d015555 | 5.220 | 0.000 | 5.100 | 0.400 | 1.940 | 5.140 | 4.900 | 3.900 | 0.100 | 6.940 | 5.440 | 4.580 |

| CLASSICAL | d017438 | 0.000 | 0.740 | 2.900 | 2.640 | 4.480 | 5.700 | 4.380 | 6.940 | 3.460 | 4.080 | 3.220 | 1.900 |

| CLASSICAL | d017826 | 0.000 | 1.380 | 2.080 | 1.140 | 2.640 | 4.000 | 1.860 | 4.800 | 1.420 | 3.980 | 3.700 | 2.120 |

| CLASSICAL | d019684 | 0.120 | 0.500 | 8.380 | 7.400 | 8.200 | 7.240 | 6.940 | 7.560 | 5.700 | 7.420 | 8.340 | 8.560 |

| COUNTRY | b008293 | 7.200 | 1.800 | 7.800 | 3.000 | 3.200 | 5.200 | 7.000 | 5.000 | 3.000 | 4.200 | 7.000 | 7.000 |

| COUNTRY | e003167 | 2.940 | 3.240 | 5.880 | 4.140 | 6.140 | 7.460 | 5.160 | 3.360 | 2.260 | 3.460 | 3.340 | 2.120 |

| COUNTRY | e005922 | 1.240 | 1.320 | 2.540 | 0.360 | 0.240 | 3.580 | 0.860 | 1.820 | 1.900 | 1.400 | 2.360 | 2.120 |

| COUNTRY | e008013 | 5.920 | 2.980 | 7.100 | 7.480 | 6.240 | 5.320 | 5.720 | 2.400 | 5.180 | 5.920 | 6.620 | 5.200 |

| COUNTRY | e008637 | 6.340 | 2.960 | 5.460 | 3.220 | 0.780 | 4.220 | 3.580 | 6.040 | 1.400 | 5.820 | 5.980 | 6.480 |

| COUNTRY | e009438 | 5.600 | 1.140 | 4.020 | 3.120 | 3.420 | 3.520 | 4.120 | 5.780 | 2.960 | 7.240 | 4.660 | 3.520 |

| COUNTRY | e011578 | 6.620 | 3.580 | 6.360 | 4.220 | 2.100 | 1.620 | 5.500 | 4.340 | 5.080 | 3.620 | 1.720 | 1.940 |

| COUNTRY | e011702 | 0.220 | 0.920 | 0.380 | 1.560 | 0.000 | 0.040 | 0.000 | 0.440 | 7.140 | 0.120 | 0.000 | 1.760 |

| COUNTRY | e014903 | 5.340 | 2.200 | 4.960 | 1.900 | 2.400 | 3.500 | 2.840 | 1.340 | 4.320 | 2.740 | 3.980 | 3.320 |

| COUNTRY | e018325 | 5.080 | 3.240 | 4.760 | 4.020 | 3.100 | 5.720 | 4.280 | 3.120 | 2.340 | 4.200 | 4.300 | 2.800 |

| EDANCE | a002630 | 4.840 | 0.100 | 3.460 | 2.800 | 3.300 | 3.360 | 6.220 | 5.200 | 2.880 | 3.760 | 5.480 | 4.720 |

| EDANCE | a003317 | 6.200 | 0.480 | 5.280 | 5.660 | 2.640 | 7.300 | 3.880 | 3.520 | 4.360 | 6.860 | 5.320 | 6.480 |

| EDANCE | a003588 | 8.780 | 0.000 | 5.400 | 6.340 | 6.840 | 6.040 | 4.380 | 4.000 | 1.560 | 9.100 | 6.720 | 2.120 |

| EDANCE | a006326 | 1.800 | 0.500 | 1.540 | 2.140 | 3.840 | 1.900 | 2.720 | 3.580 | 1.500 | 3.140 | 1.840 | 2.500 |

| EDANCE | b007008 | 7.420 | 0.000 | 4.420 | 4.980 | 5.960 | 8.000 | 7.180 | 8.200 | 5.960 | 8.400 | 6.020 | 5.620 |

| EDANCE | b008903 | 5.020 | 0.480 | 1.620 | 2.760 | 5.020 | 5.260 | 4.580 | 6.900 | 5.480 | 5.500 | 6.760 | 5.600 |

| EDANCE | b009057 | 1.940 | 0.060 | 4.820 | 4.360 | 5.360 | 2.780 | 5.020 | 3.240 | 1.260 | 3.000 | 2.840 | 6.840 |

| EDANCE | f000987 | 0.200 | 0.600 | 3.700 | 2.100 | 2.100 | 2.600 | 3.700 | 5.400 | 3.300 | 5.700 | 0.200 | 0.400 |

| EDANCE | f005146 | 2.540 | 1.000 | 5.320 | 3.740 | 2.280 | 3.320 | 3.580 | 2.740 | 1.360 | 6.600 | 2.620 | 6.500 |

| EDANCE | f010067 | 7.000 | 0.800 | 5.400 | 4.400 | 6.200 | 4.800 | 4.600 | 6.000 | 7.000 | 5.400 | 5.200 | 5.200 |

| JAZZ | e001054 | 4.000 | 4.000 | 7.000 | 6.200 | 6.400 | 6.600 | 5.400 | 4.800 | 5.400 | 5.800 | 5.600 | 5.600 |

| JAZZ | e004497 | 6.120 | 5.900 | 3.620 | 2.760 | 1.520 | 6.420 | 6.020 | 5.520 | 1.840 | 5.020 | 5.780 | 6.000 |

| JAZZ | e005048 | 8.240 | 7.100 | 4.060 | 4.140 | 1.300 | 4.200 | 2.300 | 6.600 | 7.920 | 3.120 | 4.900 | 3.420 |

| JAZZ | e007252 | 1.400 | 1.140 | 2.060 | 3.140 | 2.700 | 1.180 | 1.000 | 4.680 | 2.320 | 5.380 | 2.900 | 2.520 |

| JAZZ | e007772 | 5.540 | 4.300 | 3.440 | 3.640 | 3.160 | 2.920 | 1.920 | 1.820 | 2.660 | 3.780 | 2.580 | 4.140 |

| JAZZ | e007968 | 6.600 | 4.000 | 6.100 | 4.640 | 4.540 | 6.940 | 6.200 | 7.880 | 7.340 | 7.600 | 7.000 | 7.340 |

| JAZZ | e009441 | 5.360 | 0.720 | 4.200 | 1.800 | 0.380 | 1.500 | 4.760 | 2.800 | 3.440 | 4.540 | 4.260 | 3.280 |

| JAZZ | e010478 | 6.200 | 5.680 | 3.020 | 3.900 | 6.040 | 3.820 | 2.340 | 4.820 | 6.220 | 3.700 | 3.460 | 4.680 |

| JAZZ | e016650 | 3.780 | 2.860 | 6.600 | 4.040 | 0.000 | 4.280 | 4.320 | 5.100 | 3.380 | 5.080 | 0.940 | 0.940 |

| JAZZ | e019093 | 7.140 | 6.340 | 9.040 | 5.800 | 4.060 | 4.900 | 8.880 | 5.120 | 6.840 | 7.960 | 6.520 | 5.940 |

| METAL | b001148 | 0.920 | 0.640 | 5.180 | 4.920 | 6.140 | 4.880 | 6.720 | 6.840 | 2.280 | 5.700 | 5.360 | 5.480 |

| METAL | b002842 | 6.560 | 0.080 | 6.680 | 5.680 | 2.560 | 7.540 | 5.340 | 5.320 | 5.480 | 6.360 | 4.400 | 2.780 |

| METAL | b005320 | 6.060 | 1.100 | 6.080 | 3.140 | 2.660 | 5.440 | 7.000 | 6.880 | 1.420 | 6.020 | 5.380 | 6.300 |

| METAL | b005832 | 3.380 | 0.480 | 6.780 | 4.120 | 4.140 | 4.180 | 6.360 | 6.040 | 4.240 | 3.440 | 4.900 | 5.600 |

| METAL | b006870 | 4.960 | 0.360 | 6.800 | 5.720 | 6.220 | 6.360 | 5.520 | 7.260 | 3.800 | 6.180 | 7.100 | 6.760 |

| METAL | b010213 | 0.960 | 0.560 | 1.020 | 2.020 | 2.060 | 3.500 | 1.140 | 0.200 | 1.660 | 2.400 | 2.200 | 2.000 |

| METAL | b010569 | 6.200 | 2.200 | 6.400 | 5.400 | 3.200 | 5.400 | 6.800 | 5.200 | 3.000 | 4.200 | 6.200 | 4.800 |

| METAL | b011148 | 7.860 | 0.300 | 6.740 | 6.300 | 7.200 | 5.920 | 7.640 | 3.320 | 5.080 | 6.420 | 6.960 | 7.620 |

| METAL | b011701 | 6.460 | 0.000 | 6.660 | 7.220 | 1.640 | 7.400 | 7.940 | 8.160 | 5.100 | 8.180 | 8.160 | 7.760 |

| METAL | f012092 | 6.560 | 0.440 | 7.280 | 8.260 | 6.780 | 7.320 | 7.900 | 4.360 | 1.040 | 5.600 | 6.740 | 5.020 |

| RAPHIPHOP | a000154 | 8.660 | 0.360 | 9.160 | 9.120 | 9.340 | 8.880 | 8.900 | 8.360 | 8.840 | 8.820 | 9.400 | 7.680 |

| RAPHIPHOP | a000895 | 7.200 | 1.340 | 6.820 | 7.200 | 6.620 | 7.140 | 7.540 | 6.780 | 5.320 | 7.880 | 7.140 | 7.300 |

| RAPHIPHOP | a001600 | 2.440 | 3.760 | 1.400 | 0.420 | 0.220 | 2.840 | 5.660 | 4.120 | 1.240 | 1.640 | 2.360 | 2.260 |

| RAPHIPHOP | a003760 | 6.660 | 0.420 | 7.200 | 6.040 | 7.080 | 7.620 | 7.280 | 4.640 | 1.460 | 7.140 | 6.720 | 7.320 |

| RAPHIPHOP | a004471 | 6.480 | 0.040 | 7.600 | 7.480 | 7.640 | 7.540 | 7.040 | 7.000 | 7.460 | 7.740 | 8.480 | 7.840 |

| RAPHIPHOP | a006304 | 5.500 | 0.000 | 5.420 | 6.300 | 5.980 | 5.920 | 6.240 | 4.040 | 0.200 | 6.580 | 4.340 | 6.280 |

| RAPHIPHOP | a008309 | 8.380 | 0.620 | 7.120 | 6.420 | 8.280 | 8.380 | 7.500 | 7.500 | 1.100 | 7.640 | 7.980 | 7.660 |

| RAPHIPHOP | b000472 | 6.060 | 0.000 | 5.820 | 2.740 | 6.240 | 6.720 | 4.880 | 6.080 | 5.400 | 4.400 | 6.340 | 3.300 |

| RAPHIPHOP | b001007 | 3.960 | 0.840 | 2.700 | 3.600 | 4.660 | 3.540 | 2.920 | 5.700 | 1.760 | 2.140 | 1.200 | 1.040 |

| RAPHIPHOP | b011158 | 7.840 | 0.800 | 8.140 | 9.080 | 8.820 | 8.900 | 7.360 | 7.920 | 8.480 | 8.940 | 8.940 | 8.900 |

| ROCKROLL | b000264 | 0.760 | 0.000 | 0.100 | 0.720 | 0.280 | 0.940 | 0.360 | 0.160 | 0.480 | 0.200 | 0.920 | 0.320 |

| ROCKROLL | b000459 | 2.640 | 1.100 | 3.900 | 4.520 | 3.180 | 3.780 | 3.060 | 4.780 | 1.540 | 2.300 | 5.400 | 3.780 |

| ROCKROLL | b001686 | 2.600 | 0.000 | 4.200 | 6.500 | 0.900 | 5.700 | 2.700 | 5.700 | 0.300 | 3.800 | 2.900 | 1.400 |

| ROCKROLL | b005863 | 1.740 | 0.220 | 4.600 | 1.120 | 1.460 | 2.900 | 2.500 | 0.200 | 1.380 | 1.300 | 2.700 | 2.200 |

| ROCKROLL | b008067 | 4.000 | 1.200 | 4.800 | 2.000 | 1.600 | 4.800 | 5.200 | 4.600 | 2.400 | 3.800 | 5.800 | 5.000 |

| ROCKROLL | b009038 | 2.640 | 0.060 | 1.980 | 2.700 | 2.020 | 2.120 | 3.060 | 0.820 | 0.400 | 2.140 | 2.200 | 2.400 |

| ROCKROLL | b012623 | 1.920 | 0.000 | 3.940 | 0.880 | 0.200 | 3.100 | 2.020 | 1.940 | 2.080 | 2.020 | 1.500 | 2.080 |

| ROCKROLL | b015975 | 4.560 | 0.760 | 5.820 | 5.980 | 3.540 | 4.460 | 6.100 | 3.600 | 2.740 | 5.940 | 6.540 | 5.540 |

| ROCKROLL | b016172 | 1.900 | 1.040 | 3.800 | 2.100 | 1.900 | 3.960 | 3.860 | 1.240 | 2.240 | 2.560 | 3.460 | 2.920 |

| ROCKROLL | b019890 | 3.420 | 0.480 | 4.520 | 1.960 | 1.060 | 3.380 | 2.300 | 1.080 | 3.360 | 3.560 | 3.760 | 3.820 |

| ROMANTIC | d000095 | 1.600 | 2.340 | 7.640 | 6.360 | 7.740 | 7.420 | 6.860 | 7.040 | 4.200 | 7.740 | 3.300 | 3.020 |

| ROMANTIC | d000491 | 0.980 | 2.440 | 5.080 | 5.020 | 7.280 | 8.060 | 6.920 | 6.120 | 1.320 | 8.960 | 7.160 | 5.340 |

| ROMANTIC | d003906 | 6.800 | 1.560 | 4.600 | 3.960 | 4.760 | 8.020 | 5.840 | 7.020 | 4.200 | 7.300 | 6.740 | 7.140 |

| ROMANTIC | d005430 | 2.200 | 1.100 | 2.100 | 0.960 | 5.260 | 7.680 | 4.600 | 7.200 | 7.480 | 8.780 | 1.880 | 1.880 |

| ROMANTIC | d008298 | 3.860 | 0.260 | 5.120 | 1.840 | 3.000 | 5.660 | 5.200 | 2.600 | 0.000 | 7.120 | 5.000 | 6.260 |

| ROMANTIC | d008438 | 2.160 | 3.100 | 7.520 | 7.020 | 8.560 | 7.240 | 7.200 | 8.320 | 7.660 | 7.840 | 7.460 | 7.020 |

| ROMANTIC | d009148 | 6.260 | 2.360 | 3.960 | 4.220 | 5.380 | 5.940 | 5.780 | 2.960 | 1.240 | 4.760 | 6.520 | 6.500 |

| ROMANTIC | d009455 | 2.660 | 2.080 | 3.220 | 4.620 | 2.480 | 6.240 | 4.900 | 2.160 | 6.180 | 8.360 | 5.080 | 6.140 |

| ROMANTIC | d010229 | 7.000 | 0.020 | 6.300 | 4.720 | 4.700 | 7.100 | 4.700 | 0.000 | 1.420 | 6.900 | 4.900 | 5.700 |

| ROMANTIC | d010380 | 0.000 | 0.380 | 7.280 | 5.340 | 1.700 | 4.700 | 7.120 | 4.500 | 0.440 | 8.380 | 4.840 | 5.640 |

These are the mean BROAD scores per query assigned by Evalutron graders. The BROAD scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 (not similar) and 2 (very similar). A perfect score would be 2. Genre labels have been included for reference.

| Genre | Query | BK1 | BK2 | CB1 | CB2 | CB3 | GT | LB | ME | PC | PS | TL1 | TL2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BAROQUE | d004553 | 1.6 | 0.0 | 1.2 | 1.4 | 0.4 | 1.8 | 0.4 | 0.6 | 0.6 | 1.8 | 1.4 | 1.8 |

| BAROQUE | d006674 | 0.4 | 0.6 | 0.2 | 0.0 | 0.0 | 0.6 | 1.2 | 0.8 | 0.2 | 0.2 | 0.4 | 0.4 |

| BAROQUE | d008942 | 1.6 | 0.0 | 1.4 | 1.8 | 1.8 | 1.8 | 1.8 | 1.0 | 0.0 | 2.0 | 1.0 | 1.6 |

| BAROQUE | d010361 | 0.2 | 0.0 | 0.0 | 0.2 | 0.6 | 0.0 | 0.4 | 0.4 | 0.2 | 0.4 | 0.2 | 0.2 |

| BAROQUE | d011850 | 1.8 | 0.0 | 2.0 | 2.0 | 2.0 | 1.6 | 2.0 | 2.0 | 1.6 | 2.0 | 1.8 | 1.8 |

| BAROQUE | d012288 | 0.8 | 0.0 | 0.4 | 0.2 | 0.4 | 0.6 | 1.4 | 1.0 | 0.4 | 0.4 | 1.0 | 1.0 |

| BAROQUE | d017287 | 1.6 | 0.0 | 2.0 | 1.4 | 1.8 | 2.0 | 1.8 | 1.2 | 0.2 | 1.8 | 1.6 | 2.0 |

| BAROQUE | d018106 | 2.0 | 0.0 | 1.0 | 1.0 | 1.4 | 1.2 | 0.8 | 0.8 | 0.4 | 2.0 | 1.4 | 0.4 |

| BAROQUE | d018433 | 1.2 | 0.0 | 1.4 | 1.4 | 1.4 | 1.4 | 1.2 | 1.2 | 1.6 | 1.4 | 1.8 | 1.4 |

| BAROQUE | d018533 | 2.0 | 0.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.6 |

| BLUES | e000039 | 0.8 | 0.0 | 0.4 | 0.8 | 0.8 | 0.4 | 0.4 | 1.2 | 0.2 | 0.2 | 0.0 | 0.0 |

| BLUES | e001388 | 2.0 | 0.0 | 1.8 | 1.6 | 2.0 | 2.0 | 2.0 | 2.0 | 0.0 | 1.8 | 1.8 | 1.8 |

| BLUES | e004743 | 0.4 | 0.0 | 1.2 | 0.6 | 0.8 | 0.8 | 1.2 | 1.2 | 1.0 | 0.6 | 1.0 | 1.2 |

| BLUES | e004899 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.2 | 1.2 | 0.4 | 1.0 | 1.0 | 1.0 |

| BLUES | e006420 | 1.0 | 0.0 | 1.0 | 0.4 | 0.4 | 1.0 | 1.2 | 1.2 | 0.0 | 1.0 | 1.0 | 1.0 |

| BLUES | e011446 | 1.4 | 0.0 | 1.4 | 1.4 | 0.6 | 1.4 | 1.0 | 1.0 | 1.0 | 1.2 | 0.8 | 0.6 |

| BLUES | e012584 | 0.6 | 0.0 | 0.6 | 1.0 | 0.8 | 0.8 | 1.0 | 1.0 | 0.6 | 0.4 | 1.0 | 1.2 |

| BLUES | e013173 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| BLUES | e015590 | 0.4 | 0.0 | 0.8 | 1.6 | 0.2 | 1.2 | 0.4 | 1.4 | 0.0 | 0.6 | 0.8 | 0.2 |

| BLUES | e018053 | 1.4 | 0.0 | 1.2 | 0.4 | 1.4 | 1.4 | 1.2 | 1.6 | 1.2 | 1.0 | 1.4 | 0.8 |

| CLASSICAL | d000429 | 0.8 | 0.0 | 0.4 | 0.6 | 0.4 | 0.6 | 0.8 | 0.2 | 0.0 | 0.4 | 0.8 | 0.6 |

| CLASSICAL | d003313 | 1.4 | 0.4 | 1.6 | 1.4 | 0.8 | 1.4 | 1.8 | 1.2 | 0.6 | 1.0 | 1.6 | 1.2 |

| CLASSICAL | d011706 | 0.4 | 0.0 | 1.2 | 0.8 | 1.4 | 0.6 | 1.2 | 0.6 | 0.0 | 0.6 | 0.6 | 1.8 |

| CLASSICAL | d012929 | 0.2 | 0.0 | 1.2 | 0.0 | 0.2 | 0.6 | 0.6 | 0.0 | 0.2 | 0.4 | 0.6 | 0.6 |

| CLASSICAL | d014060 | 1.2 | 0.0 | 0.2 | 0.4 | 1.2 | 1.2 | 1.2 | 1.6 | 1.2 | 1.4 | 1.8 | 1.2 |

| CLASSICAL | d014334 | 0.0 | 0.0 | 0.0 | 0.2 | 0.0 | 0.4 | 0.0 | 0.0 | 0.0 | 0.2 | 0.0 | 0.0 |

| CLASSICAL | d015555 | 1.0 | 0.0 | 1.8 | 1.2 | 1.4 | 1.4 | 1.0 | 1.6 | 0.4 | 1.4 | 1.8 | 1.6 |

| CLASSICAL | d017438 | 1.6 | 0.0 | 0.8 | 1.0 | 1.2 | 1.6 | 1.4 | 1.8 | 1.0 | 1.8 | 1.4 | 1.2 |

| CLASSICAL | d017826 | 0.8 | 0.0 | 1.2 | 0.4 | 0.0 | 1.0 | 1.2 | 1.2 | 0.4 | 0.8 | 1.0 | 1.0 |

| CLASSICAL | d019684 | 1.8 | 0.4 | 1.8 | 0.6 | 0.6 | 1.2 | 1.8 | 1.2 | 0.6 | 1.0 | 1.6 | 1.6 |

| COUNTRY | b008293 | 1.0 | 0.0 | 1.2 | 1.0 | 0.6 | 0.8 | 1.2 | 0.8 | 0.6 | 1.2 | 1.2 | 1.0 |

| COUNTRY | e003167 | 0.8 | 0.0 | 1.0 | 0.4 | 0.2 | 0.6 | 0.4 | 0.2 | 0.8 | 0.8 | 0.8 | 0.8 |

| COUNTRY | e005922 | 0.2 | 0.4 | 2.0 | 1.4 | 1.8 | 1.4 | 1.6 | 1.2 | 1.0 | 1.8 | 0.6 | 0.6 |

| COUNTRY | e008013 | 0.2 | 0.0 | 0.8 | 0.0 | 0.0 | 0.6 | 0.4 | 0.2 | 0.4 | 0.2 | 0.2 | 0.4 |

| COUNTRY | e008637 | 1.6 | 0.0 | 1.6 | 1.8 | 0.4 | 1.8 | 2.0 | 2.0 | 1.2 | 2.0 | 2.0 | 1.6 |

| COUNTRY | e009438 | 0.0 | 0.4 | 1.0 | 0.8 | 1.2 | 1.6 | 1.6 | 1.0 | 0.0 | 2.0 | 1.6 | 1.0 |

| COUNTRY | e011578 | 0.8 | 0.4 | 0.8 | 0.6 | 1.0 | 1.2 | 1.2 | 1.0 | 0.0 | 1.2 | 1.0 | 1.0 |

| COUNTRY | e011702 | 1.0 | 0.0 | 1.8 | 2.0 | 2.0 | 2.0 | 1.8 | 1.6 | 2.0 | 2.0 | 2.0 | 2.0 |

| COUNTRY | e014903 | 1.8 | 0.0 | 1.6 | 1.4 | 1.6 | 1.2 | 1.8 | 0.4 | 1.2 | 1.6 | 1.4 | 1.6 |

| COUNTRY | e018325 | 0.2 | 0.0 | 0.6 | 0.4 | 0.2 | 0.6 | 0.6 | 0.0 | 0.2 | 0.2 | 0.6 | 0.4 |

| EDANCE | a002630 | 0.0 | 0.4 | 2.0 | 1.6 | 2.0 | 2.0 | 2.0 | 2.0 | 1.4 | 2.0 | 1.0 | 0.8 |

| EDANCE | a003317 | 0.4 | 0.2 | 0.4 | 0.8 | 0.2 | 1.2 | 1.0 | 0.4 | 1.4 | 1.8 | 0.8 | 1.2 |

| EDANCE | a003588 | 1.0 | 0.6 | 0.8 | 1.0 | 1.2 | 1.0 | 1.0 | 0.4 | 0.4 | 1.0 | 1.2 | 1.2 |

| EDANCE | a006326 | 0.2 | 0.2 | 0.4 | 0.2 | 0.8 | 1.6 | 0.6 | 1.4 | 1.4 | 2.0 | 0.2 | 0.2 |

| EDANCE | b007008 | 1.4 | 0.2 | 1.8 | 1.6 | 2.0 | 1.8 | 1.6 | 2.0 | 1.0 | 1.8 | 2.0 | 2.0 |

| EDANCE | b008903 | 1.2 | 0.2 | 1.6 | 1.2 | 1.8 | 1.6 | 2.0 | 1.4 | 0.6 | 1.4 | 1.4 | 1.6 |

| EDANCE | b009057 | 1.0 | 0.0 | 1.4 | 0.4 | 0.8 | 1.6 | 1.2 | 0.6 | 0.0 | 2.0 | 1.2 | 1.6 |

| EDANCE | f000987 | 0.0 | 0.4 | 1.6 | 1.8 | 1.2 | 0.8 | 1.6 | 1.2 | 1.2 | 1.4 | 1.2 | 1.2 |

| EDANCE | f005146 | 0.4 | 0.8 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 1.8 | 2.0 | 2.0 |

| EDANCE | f010067 | 1.4 | 0.4 | 1.0 | 0.6 | 0.8 | 2.0 | 1.2 | 1.4 | 1.0 | 1.8 | 1.6 | 1.6 |

| JAZZ | e001054 | 0.0 | 0.4 | 1.8 | 1.4 | 1.4 | 1.6 | 1.8 | 1.4 | 1.2 | 1.6 | 1.6 | 1.8 |

| JAZZ | e004497 | 0.0 | 0.2 | 2.0 | 2.0 | 1.8 | 2.0 | 2.0 | 2.0 | 1.0 | 1.6 | 2.0 | 2.0 |

| JAZZ | e005048 | 0.0 | 0.2 | 2.0 | 1.6 | 1.6 | 2.0 | 2.0 | 2.0 | 1.2 | 1.6 | 2.0 | 2.0 |

| JAZZ | e007252 | 1.0 | 1.0 | 1.8 | 1.4 | 1.4 | 1.6 | 1.0 | 1.0 | 1.0 | 1.2 | 1.2 | 1.2 |

| JAZZ | e007772 | 0.0 | 0.2 | 0.6 | 0.6 | 1.0 | 1.4 | 1.4 | 1.8 | 0.8 | 1.0 | 0.8 | 0.4 |

| JAZZ | e007968 | 0.0 | 0.2 | 0.2 | 0.0 | 0.4 | 1.0 | 0.4 | 1.0 | 0.2 | 1.0 | 0.8 | 0.2 |

| JAZZ | e009441 | 0.0 | 0.2 | 1.2 | 0.6 | 1.4 | 1.8 | 1.6 | 1.8 | 1.6 | 2.0 | 1.4 | 1.0 |

| JAZZ | e010478 | 0.0 | 0.0 | 2.0 | 1.8 | 2.0 | 1.8 | 1.6 | 1.6 | 1.2 | 1.6 | 2.0 | 2.0 |

| JAZZ | e016650 | 1.4 | 1.2 | 0.4 | 0.4 | 0.2 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.2 | 0.6 |

| JAZZ | e019093 | 1.2 | 0.8 | 1.0 | 0.6 | 1.4 | 2.0 | 1.6 | 1.6 | 1.8 | 1.8 | 1.2 | 1.2 |

| METAL | b001148 | 0.0 | 0.4 | 2.0 | 0.4 | 1.2 | 1.8 | 2.0 | 1.8 | 1.0 | 2.0 | 2.0 | 2.0 |

| METAL | b002842 | 2.0 | 0.2 | 2.0 | 1.8 | 2.0 | 2.0 | 1.8 | 1.6 | 0.6 | 2.0 | 2.0 | 2.0 |

| METAL | b005320 | 1.8 | 0.0 | 1.4 | 1.2 | 1.2 | 1.6 | 1.2 | 0.0 | 0.2 | 1.6 | 1.2 | 1.4 |

| METAL | b005832 | 0.0 | 0.2 | 1.4 | 1.0 | 1.4 | 1.0 | 1.0 | 1.2 | 1.0 | 2.0 | 1.6 | 1.2 |

| METAL | b006870 | 1.2 | 0.0 | 1.4 | 0.2 | 0.6 | 1.2 | 1.2 | 1.0 | 0.2 | 1.8 | 1.2 | 1.0 |

| METAL | b010213 | 0.0 | 0.4 | 2.0 | 2.0 | 1.8 | 1.8 | 1.6 | 1.8 | 1.8 | 2.0 | 1.8 | 1.6 |

| METAL | b010569 | 0.4 | 0.4 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 2.0 | 0.0 | 1.8 | 1.6 | 1.0 |

| METAL | b011148 | 0.0 | 0.4 | 2.0 | 2.0 | 1.8 | 2.0 | 1.8 | 1.6 | 1.2 | 1.8 | 1.8 | 1.8 |

| METAL | b011701 | 0.0 | 0.4 | 2.0 | 1.8 | 1.8 | 2.0 | 2.0 | 1.6 | 1.4 | 1.8 | 1.8 | 1.8 |

| METAL | f012092 | 0.0 | 0.0 | 1.6 | 1.2 | 0.0 | 1.0 | 1.6 | 0.8 | 0.0 | 1.8 | 1.0 | 1.4 |

| RAPHIPHOP | a000154 | 1.0 | 0.8 | 0.6 | 0.8 | 0.4 | 0.4 | 0.2 | 0.4 | 0.4 | 0.8 | 0.4 | 0.8 |

| RAPHIPHOP | a000895 | 0.8 | 0.8 | 0.4 | 0.6 | 0.2 | 0.8 | 1.0 | 1.0 | 0.8 | 0.8 | 0.4 | 0.2 |

| RAPHIPHOP | a001600 | 1.6 | 0.8 | 1.2 | 1.0 | 1.0 | 1.6 | 1.2 | 2.0 | 1.8 | 1.8 | 1.6 | 1.8 |

| RAPHIPHOP | a003760 | 0.0 | 0.0 | 0.4 | 0.4 | 0.4 | 0.0 | 0.0 | 0.8 | 0.2 | 1.2 | 0.4 | 0.2 |

| RAPHIPHOP | a004471 | 1.6 | 1.4 | 0.8 | 0.8 | 0.2 | 0.6 | 0.4 | 1.6 | 1.4 | 0.6 | 0.8 | 0.6 |

| RAPHIPHOP | a006304 | 1.0 | 1.0 | 0.8 | 0.4 | 0.0 | 1.2 | 0.8 | 1.2 | 0.2 | 1.0 | 0.8 | 1.0 |

| RAPHIPHOP | a008309 | 0.8 | 0.4 | 1.0 | 1.0 | 0.4 | 0.8 | 0.8 | 0.6 | 0.8 | 0.8 | 0.0 | 0.2 |

| RAPHIPHOP | b000472 | 0.4 | 0.6 | 1.0 | 0.8 | 1.0 | 1.8 | 1.0 | 0.6 | 0.2 | 0.6 | 0.6 | 0.2 |

| RAPHIPHOP | b001007 | 0.0 | 0.0 | 0.4 | 0.0 | 0.0 | 0.6 | 0.0 | 0.2 | 0.4 | 0.2 | 0.4 | 0.2 |

| RAPHIPHOP | b011158 | 2.0 | 1.6 | 1.2 | 0.4 | 0.0 | 1.8 | 0.6 | 2.0 | 0.2 | 1.2 | 1.2 | 1.2 |

| ROCKROLL | b000264 | 1.0 | 0.0 | 0.6 | 0.6 | 0.4 | 0.6 | 0.8 | 1.0 | 0.6 | 1.4 | 0.8 | 0.4 |

| ROCKROLL | b000459 | 1.6 | 0.6 | 1.2 | 0.8 | 0.2 | 1.0 | 0.6 | 1.4 | 0.2 | 1.2 | 1.4 | 1.6 |

| ROCKROLL | b001686 | 0.8 | 0.0 | 0.0 | 0.4 | 0.4 | 0.8 | 0.2 | 0.2 | 0.2 | 0.8 | 1.0 | 0.4 |

| ROCKROLL | b005863 | 1.2 | 1.2 | 1.0 | 0.4 | 0.4 | 1.0 | 1.6 | 1.4 | 1.8 | 1.6 | 1.6 | 0.8 |

| ROCKROLL | b008067 | 1.4 | 0.6 | 1.6 | 1.6 | 1.2 | 1.2 | 1.4 | 0.2 | 1.0 | 1.4 | 1.6 | 0.8 |

| ROCKROLL | b009038 | 1.4 | 0.6 | 1.4 | 0.8 | 0.4 | 0.4 | 1.0 | 0.6 | 0.8 | 0.6 | 0.4 | 0.4 |

| ROCKROLL | b012623 | 0.0 | 0.2 | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 1.4 | 0.0 | 0.0 | 0.4 |

| ROCKROLL | b015975 | 1.0 | 0.8 | 0.4 | 0.6 | 1.2 | 0.8 | 0.4 | 0.8 | 1.6 | 0.6 | 0.8 | 1.0 |

| ROCKROLL | b016172 | 1.2 | 0.0 | 1.0 | 0.2 | 0.0 | 0.2 | 1.0 | 0.2 | 0.6 | 1.0 | 1.0 | 0.8 |

| ROCKROLL | b019890 | 1.2 | 1.0 | 1.8 | 2.0 | 2.0 | 1.8 | 1.6 | 1.2 | 1.4 | 1.4 | 1.6 | 1.4 |

| ROMANTIC | d000095 | 1.4 | 0.8 | 1.2 | 1.0 | 0.8 | 1.4 | 1.2 | 0.8 | 0.6 | 1.2 | 1.2 | 0.8 |

| ROMANTIC | d000491 | 0.0 | 0.0 | 0.8 | 0.4 | 0.4 | 0.4 | 0.8 | 1.4 | 0.4 | 1.2 | 0.0 | 0.0 |

| ROMANTIC | d003906 | 0.2 | 0.0 | 1.0 | 0.4 | 0.2 | 0.4 | 0.4 | 0.4 | 0.0 | 1.4 | 0.4 | 1.4 |

| ROMANTIC | d005430 | 0.8 | 0.4 | 1.4 | 0.8 | 0.0 | 0.8 | 1.0 | 1.0 | 0.8 | 1.2 | 0.2 | 0.2 |

| ROMANTIC | d008298 | 0.4 | 0.4 | 1.0 | 0.4 | 0.4 | 0.6 | 0.6 | 0.8 | 0.2 | 0.2 | 0.2 | 0.2 |

| ROMANTIC | d008438 | 0.4 | 0.6 | 1.4 | 0.6 | 0.4 | 0.6 | 1.2 | 0.2 | 0.8 | 1.2 | 1.0 | 0.6 |

| ROMANTIC | d009148 | 1.8 | 0.0 | 1.4 | 0.8 | 1.4 | 1.0 | 1.0 | 1.4 | 1.6 | 1.0 | 1.2 | 1.4 |

| ROMANTIC | d009455 | 1.6 | 1.6 | 2.0 | 1.2 | 0.8 | 1.0 | 2.0 | 1.2 | 1.6 | 2.0 | 1.2 | 1.2 |

| ROMANTIC | d010229 | 1.6 | 0.0 | 2.0 | 2.0 | 1.6 | 2.0 | 2.0 | 1.0 | 0.0 | 1.2 | 1.6 | 1.2 |

| ROMANTIC | d010380 | 1.2 | 0.4 | 0.8 | 0.4 | 0.4 | 0.8 | 0.6 | 0.2 | 1.0 | 0.4 | 0.6 | 0.6 |

Anonymized Metadata

Raw Scores

The raw data derived from the Evalutron 6000 human evaluations are located on the 2007:Audio Music Similarity and Retrieval Raw Data page.