Difference between revisions of "2015:GC15UX:JDISC"

(→Dataset) |

|||

| (48 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{DISPLAYTITLE:Grand Challenge | + | {{DISPLAYTITLE:Grand Challenge 2016: User Experience (GC16UX:J-DISC)}} |

=Purpose= | =Purpose= | ||

| − | + | Holistic, user-centered evaluation of the user experience in interacting with complete, user-facing music information retrieval (MIR) systems. | |

| − | |||

=Goals= | =Goals= | ||

| − | # | + | # To inspire the development of complete MIR systems. |

| − | # | + | # To promote the notion of user experience as a first-class research objective in the MIR community. |

| + | =About J-DISC= | ||

| + | J-DISC ([http://jdisc.columbia.edu http://jdisc.columbia.edu]) is a resource for searching and exploring jazz recordings created by the Center for Jazz Studies at Columbia University. It is organized to present complete information on jazz recording sessions, and merge a large corpus of session data into a single easily accessible repository, in a manner that can be easily searched, cross-searched, navigated and cited. In addition to the focus on recording artist/leaders of traditional discography, J-DISC incorporates extensive cultural, geographic, biographical, composer and studio information that can also be easily searched and accessed. | ||

=Dataset= | =Dataset= | ||

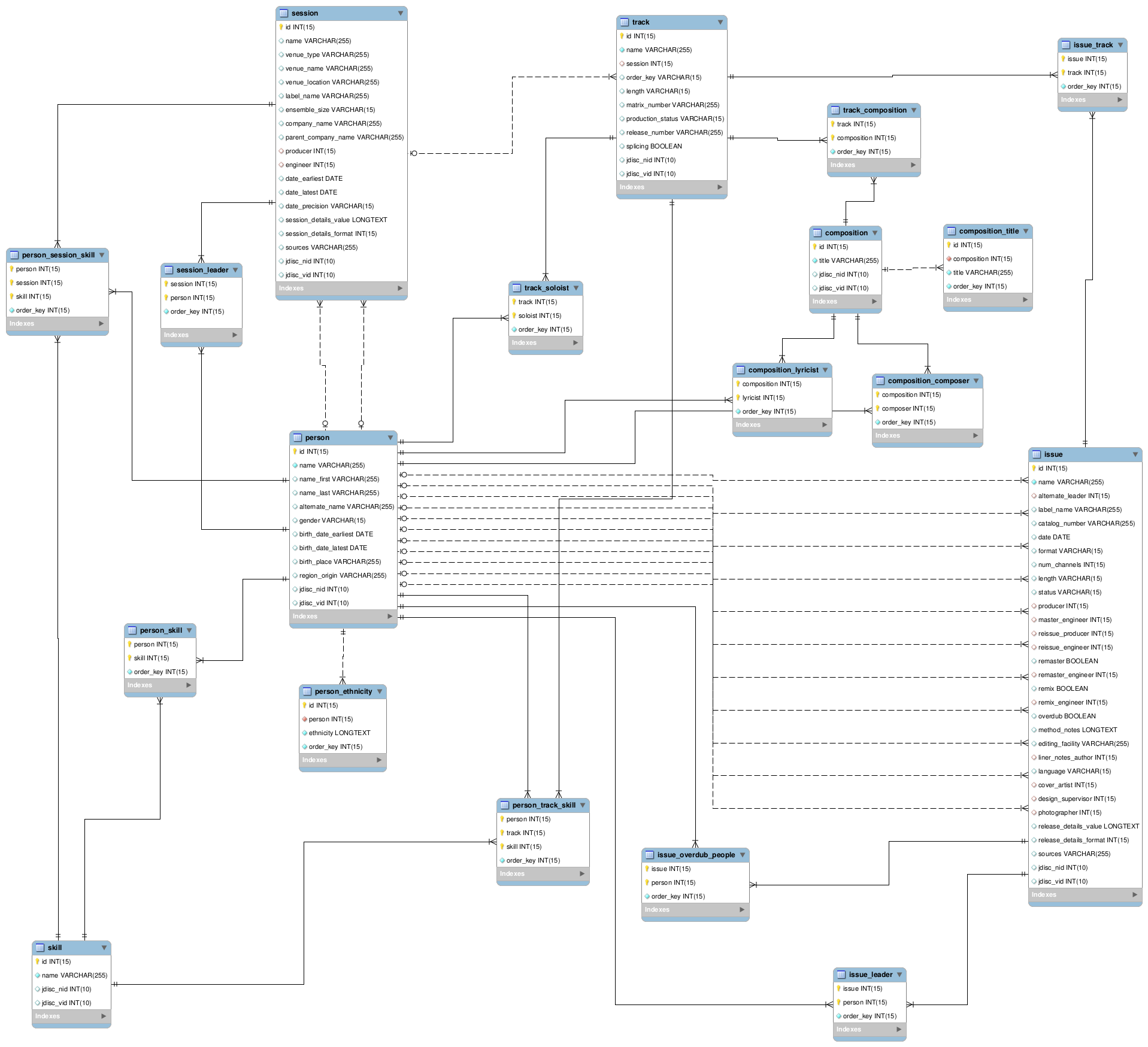

| − | + | J-DISC contains fully structured and searchable metadata. Key entities in the dataset include '''person, skill, session, track, composition, and issue'''. There are 19 tables in the dataset representing various relationships between those entities. Below are brief descriptions for each of the 19 tables (table names in alphabetical order, numbers at the end showing the number of rows in each table): | |

| − | + | *'''composition: '''Compositions, which may be recorded as tracks at sessions. (7,104) | |

| − | + | *'''composition_composer: '''Associations between compositions and their composers. (8,622) | |

| + | * '''composition_lyricist: '''Associations between compositions and their lyricists. (880) | ||

| + | * ''' composition_title: '''Alternative titles for compositions. (811) | ||

| + | *''' issue: '''Releases or issues of tracks e.g. as albums. (545) | ||

| + | *'''issue_leader: '''Associations between releases and their leaders. (610) | ||

| + | *'''issue_overdub_people: '''Associations between overdubbed releases and people working on them. (17) | ||

| + | *'''issue_track: '''Associations between releases and the included tracks. (3,769) | ||

| + | *'''person: '''People associated with musical recordings, including musicians and composers. (5,734) | ||

| + | * '''person_ethnicity: '''Association of people with ethnic descriptions. (70) | ||

| + | *'''person_session_skill: '''Correlation between people, sessions, and skills or instruments. (21,424) | ||

| + | *'''person_skill: '''Correlation between people and primary skills or instruments. (6,450) | ||

| + | *'''person_track_skill: '''Variation from sessions in correlation between people, tracks, and skills or instruments. (7,044) | ||

| + | *'''session: '''Recording sessions, at which one or more musicians produced one or more tracks. (2,711) | ||

| + | * '''session_leader: '''Associations between sessions and their leader(s). (3,123) | ||

| + | * '''skill: '''Skills associated with musical recordings, including instruments played, conducting, composing. (209) | ||

| + | *'''track: '''Tracks laid down at recording sessions by musicians. (15,361) | ||

| + | *'''track_composition: '''Associations between tracks and compositions. (15,672) | ||

| + | *'''track_soloist: '''Associations between tracks and soloists. (630) | ||

| − | |||

| − | + | [[2015:GC15UX:JDISC_Schema]] presents more details about each table. | |

| − | + | ==ER Diagram of the Schema== | |

| − | + | [[File:jdisc_schema.png|400px]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | | | ||

| − | + | [https://www.music-ir.org/mirex/gc15ux_jdisc/jdisc_schema.pdf Click to see the full version of the diagram (PDF)] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=Download the Dataset= | =Download the Dataset= | ||

| − | |||

| − | |||

| − | |||

=Participating Systems= | =Participating Systems= | ||

''Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC15UX team.'' | ''Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC15UX team.'' | ||

| − | To ensure a consistent experience, evaluators will see participating systems in fixed size window: '''1024x768'''. Please test your system for this screen size. | + | To ensure a consistent experience, evaluators will see participating systems in fixed size window: '''1024x768'''. Please test your system for this screen size. |

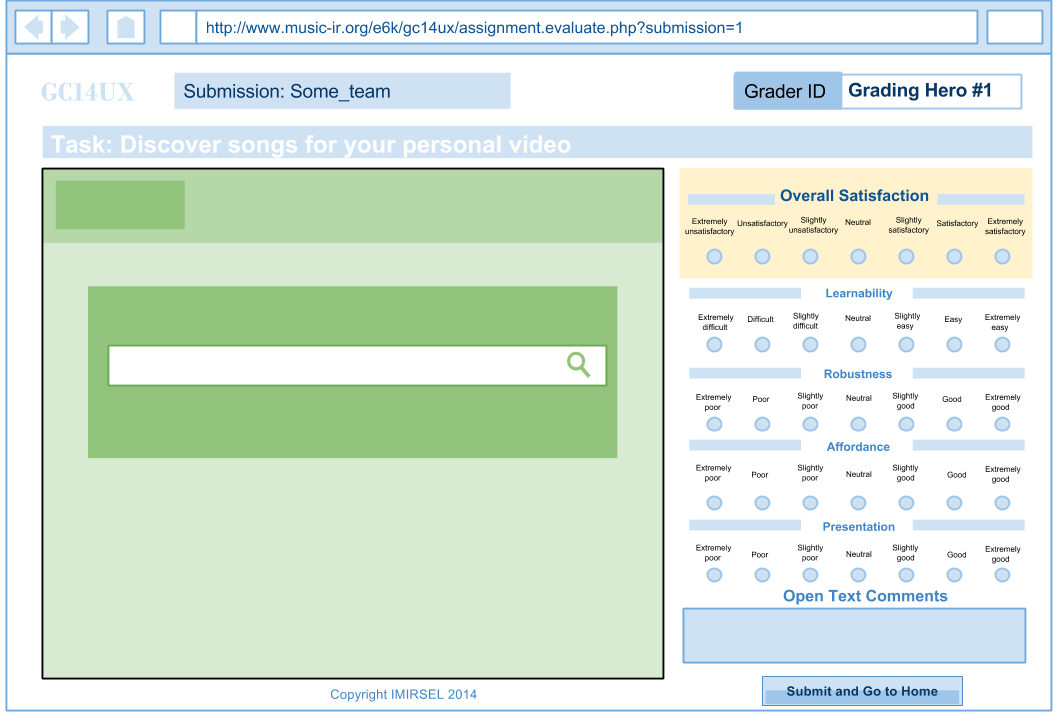

See the [[#Evaluation Webforms]] below for a better understanding of our E6K-inpsired evaluation system design. | See the [[#Evaluation Webforms]] below for a better understanding of our E6K-inpsired evaluation system design. | ||

| Line 514: | Line 111: | ||

==Tasks for Evaluators== | ==Tasks for Evaluators== | ||

| + | The final motivating task will be defined after ISMIR 2015. Because this dataset is has strong and interesting networking information, and no underlying audio, we should define a task definition that best fits this state of affairs. | ||

| + | |||

| + | Below is what we framed for the GC15UX:Jamendo task to give you an idea to start thinking about possible task definitions. | ||

| + | |||

| + | ===GC15UX:Jamendo Task Definition=== | ||

''To motivate the evaluators, a defined yet open task is given to the evaluators: | ''To motivate the evaluators, a defined yet open task is given to the evaluators: | ||

| − | + | ''You need to put together a playlist for a particular event (e.g., dinner party at your house, workout session). Try to use the assigned system to make playlists for at least a couple of different events.'' | |

| − | The task is to ensure that evaluators have a (more or less) consistent goal when they interact with the systems. The goal is flexible and authentic to the evaluators' purposes ("music for their own situation"). As the task is not too specific, evaluators can potentially look for a wide range of music in terms of genre, mood and other aspects. This allows great flexibility and virtually unlimited possibility in system or service design. | + | ''The task is to ensure that evaluators have a (more or less) consistent goal when they interact with the systems. The goal is flexible and authentic to the evaluators' purposes ("music for their own situation"). As the task is not too specific, evaluators can potentially look for a wide range of music in terms of genre, mood and other aspects. This allows great flexibility and virtually unlimited possibility in system or service design. '' |

| − | Another important consideration in designing the task is the music collection available for this GC15UX: the Jamando collection. Jamando music is not well-known to most users/evaluators, whereas many more commonly seen music information tasks are more or less influenced by users' familiarity to the songs and song popularity. Through this task of "finding (copyright-free) background music for a self-made video", we strive to minimize the need of looking for familiar or popular music.'' | + | ''Another important consideration in designing the task is the music collection available for this GC15UX: the Jamando collection. Jamando music is not well-known to most users/evaluators, whereas many more commonly seen music information tasks are more or less influenced by users' familiarity to the songs and song popularity. Through this task of "finding (copyright-free) background music for a self-made video", we strive to minimize the need of looking for familiar or popular music.'' |

==Evaluation Results== | ==Evaluation Results== | ||

| Line 539: | Line 141: | ||

This year GC15UX:JDISC adopts the two-phase model with two evaluations. The first phase will end by the ISMIR conference and we will disclose preliminary results at the conference. Then, phase II will start. Participating developers can continue improving their systems based on the feedback from the first phase and another round of evaluation will be conducted in February. We believe that this model serves the developers well since it is in accordance with the iterative nature of user-centered design. In this way, the developers will also have enough time to develop their complete MIR systems. | This year GC15UX:JDISC adopts the two-phase model with two evaluations. The first phase will end by the ISMIR conference and we will disclose preliminary results at the conference. Then, phase II will start. Participating developers can continue improving their systems based on the feedback from the first phase and another round of evaluation will be conducted in February. We believe that this model serves the developers well since it is in accordance with the iterative nature of user-centered design. In this way, the developers will also have enough time to develop their complete MIR systems. | ||

| − | * | + | *Tentative Deadline: 15 January 2016 |

| − | |||

| − | |||

==What to Submit== | ==What to Submit== | ||

| Line 552: | Line 152: | ||

:Xiao Hu, University of Hong Kong (ISMIR2014 co-chair) | :Xiao Hu, University of Hong Kong (ISMIR2014 co-chair) | ||

:Jin Ha Lee, University of Washington (ISMIR2014 program co-chair) | :Jin Ha Lee, University of Washington (ISMIR2014 program co-chair) | ||

| − | |||

:David Bainbridge, Waikato University, New Zealand | :David Bainbridge, Waikato University, New Zealand | ||

:Christopher R. Maden, University of Illinois | :Christopher R. Maden, University of Illinois | ||

Latest revision as of 16:06, 18 November 2015

Contents

Purpose

Holistic, user-centered evaluation of the user experience in interacting with complete, user-facing music information retrieval (MIR) systems.

Goals

- To inspire the development of complete MIR systems.

- To promote the notion of user experience as a first-class research objective in the MIR community.

About J-DISC

J-DISC (http://jdisc.columbia.edu) is a resource for searching and exploring jazz recordings created by the Center for Jazz Studies at Columbia University. It is organized to present complete information on jazz recording sessions, and merge a large corpus of session data into a single easily accessible repository, in a manner that can be easily searched, cross-searched, navigated and cited. In addition to the focus on recording artist/leaders of traditional discography, J-DISC incorporates extensive cultural, geographic, biographical, composer and studio information that can also be easily searched and accessed.

Dataset

J-DISC contains fully structured and searchable metadata. Key entities in the dataset include person, skill, session, track, composition, and issue. There are 19 tables in the dataset representing various relationships between those entities. Below are brief descriptions for each of the 19 tables (table names in alphabetical order, numbers at the end showing the number of rows in each table):

- composition: Compositions, which may be recorded as tracks at sessions. (7,104)

- composition_composer: Associations between compositions and their composers. (8,622)

- composition_lyricist: Associations between compositions and their lyricists. (880)

- composition_title: Alternative titles for compositions. (811)

- issue: Releases or issues of tracks e.g. as albums. (545)

- issue_leader: Associations between releases and their leaders. (610)

- issue_overdub_people: Associations between overdubbed releases and people working on them. (17)

- issue_track: Associations between releases and the included tracks. (3,769)

- person: People associated with musical recordings, including musicians and composers. (5,734)

- person_ethnicity: Association of people with ethnic descriptions. (70)

- person_session_skill: Correlation between people, sessions, and skills or instruments. (21,424)

- person_skill: Correlation between people and primary skills or instruments. (6,450)

- person_track_skill: Variation from sessions in correlation between people, tracks, and skills or instruments. (7,044)

- session: Recording sessions, at which one or more musicians produced one or more tracks. (2,711)

- session_leader: Associations between sessions and their leader(s). (3,123)

- skill: Skills associated with musical recordings, including instruments played, conducting, composing. (209)

- track: Tracks laid down at recording sessions by musicians. (15,361)

- track_composition: Associations between tracks and compositions. (15,672)

- track_soloist: Associations between tracks and soloists. (630)

2015:GC15UX:JDISC_Schema presents more details about each table.

ER Diagram of the Schema

Click to see the full version of the diagram (PDF)

Download the Dataset

Participating Systems

Unlike conventional MIREX tasks, participants are not asked to submit their systems. Instead, the systems will be hosted by their developers. All participating systems need to be constructed as websites accessible to users through normal web browsers. Participating teams will submit the URLs to their systems to the GC15UX team.

To ensure a consistent experience, evaluators will see participating systems in fixed size window: 1024x768. Please test your system for this screen size.

See the #Evaluation Webforms below for a better understanding of our E6K-inpsired evaluation system design.

Potential Participants

Please put your names and email contacts in the following table. It is encouraged that you give your team a cool name!

| (Cool) Team Name | Name(s) | Email(s) |

|---|---|---|

Evaluation

As written in the name of the Grand Challenge, the evaluation will be user-centered. All systems will be used by a number of human evaluators and be rated by them on several most important criteria in evaluating user experience.

Criteria

Note that the evaluation criteria or its descriptions may be slightly changed in the months leading up to the submission deadline, as we test it and work to improve it.

Given the GC15UX is all about how users perceive their experiences of the systems, we intend to capture the user perceptions in a minimally intrusive manner and not to burden the users/evaluators with too many questions or required data inputs. The following criteria are grounded on the literature of Human Computer Interaction (HCI) and User Experience (UX), with a careful consideration on striking a balance between being comprehensive and minimizing evaluators' cognitive load.

Evaluators will rate systems on the following criteria:

- Overall satisfaction: How would you rate your overall satisfaction with the system?

Very unsatisfactory / Unsatisfactory / Slightly unsatisfactory / Neutral / Slightly satisfactory / Satisfactory / Very satisfactory

- Aesthetics: How would you rate the visual attractiveness of the system?

Very Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Excellent

- Ease of use: How easy was it to figure out how to use the system?

Very difficult / Difficult / Slightly difficult / Neutral / Slightly easy / Easy / Very easy

- Clarity: How well does the system communicate what is going on?

Very Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Excellent

- Affordances: How well does the system allow you to perform what you want to do?

Very Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Excellent

- Performance: Does the system work efficiently and without bugs/glitches?

Very Poor / Poor / Slightly Poor / Neutral / Slightly Good / Good / Excellent

- Open Text Feedback: An open-ended question is provided for evaluators to give feedback if they wish to do so.

Evaluators

Evaluators will be users aged 18 and above. For this round, evaluators will be drawn primarily from the MIR community through solicitations via the ISMIR-community mailing list. The #Evaluation Webforms developed by the GC15UX team will ensure all participating systems will get equal number of evaluators.

Tasks for Evaluators

The final motivating task will be defined after ISMIR 2015. Because this dataset is has strong and interesting networking information, and no underlying audio, we should define a task definition that best fits this state of affairs.

Below is what we framed for the GC15UX:Jamendo task to give you an idea to start thinking about possible task definitions.

GC15UX:Jamendo Task Definition

To motivate the evaluators, a defined yet open task is given to the evaluators:

You need to put together a playlist for a particular event (e.g., dinner party at your house, workout session). Try to use the assigned system to make playlists for at least a couple of different events.

The task is to ensure that evaluators have a (more or less) consistent goal when they interact with the systems. The goal is flexible and authentic to the evaluators' purposes ("music for their own situation"). As the task is not too specific, evaluators can potentially look for a wide range of music in terms of genre, mood and other aspects. This allows great flexibility and virtually unlimited possibility in system or service design.

Another important consideration in designing the task is the music collection available for this GC15UX: the Jamando collection. Jamando music is not well-known to most users/evaluators, whereas many more commonly seen music information tasks are more or less influenced by users' familiarity to the songs and song popularity. Through this task of "finding (copyright-free) background music for a self-made video", we strive to minimize the need of looking for familiar or popular music.

Evaluation Results

Statistics of the scores given by all evaluators will be reported: mean, average deviation. Meaningful text comments from the evaluators will also be reported.

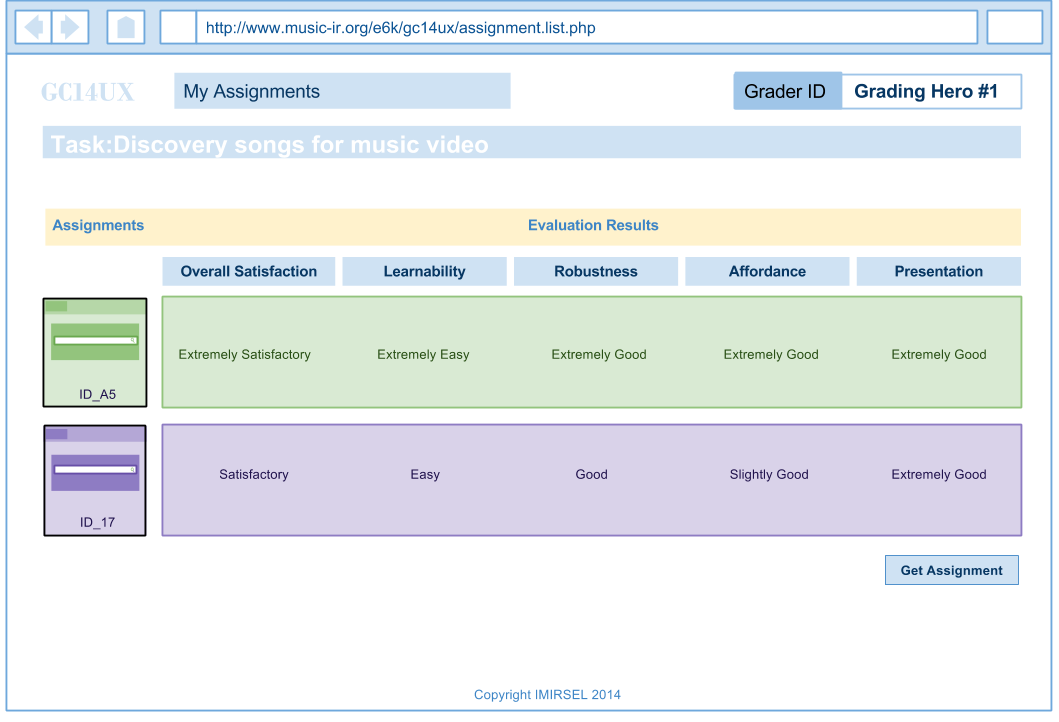

Evaluation Webforms

Graders can take as many assignments as they wish in the My Assignments page. They are allowed to go back to the evaluation page anytime by clicking the thumbnail of the submission.

To facilitate the evaluators and minimize their burden, the GC15UX team will provide a set of evaluation forms which wrap around the participating systems. As shown in the following image, the evaluation webforms are for scoring the participating systems, with their client interfaces embedded within an iframe in the left side of the webform.

Organization

Important Dates

This year GC15UX:JDISC adopts the two-phase model with two evaluations. The first phase will end by the ISMIR conference and we will disclose preliminary results at the conference. Then, phase II will start. Participating developers can continue improving their systems based on the feedback from the first phase and another round of evaluation will be conducted in February. We believe that this model serves the developers well since it is in accordance with the iterative nature of user-centered design. In this way, the developers will also have enough time to develop their complete MIR systems.

- Tentative Deadline: 15 January 2016

What to Submit

A URL to the participanting system.

Contacts

The GC15UX team consists of:

- J. Stephen Downie, University of Illinois (MIREX director)

- Xiao Hu, University of Hong Kong (ISMIR2014 co-chair)

- Jin Ha Lee, University of Washington (ISMIR2014 program co-chair)

- David Bainbridge, Waikato University, New Zealand

- Christopher R. Maden, University of Illinois

- Kahyun Choi, University of Illinois

- Peter Organisciak, University of Illinois

- Yun Hao, University of Illinois

Inquiries, suggestions, questions, comments are all highly welcome! Please contact Prof. Downie [1] or anyone in the team.