Difference between revisions of "2006:Evalutron6000 Walkthrough For Symbolic Melodic Similarity"

IMIRSELBot (talk | contribs) m (Bot: Fixing image links) |

|||

| (51 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | ==Special Comments about Grading the Symbolic Melodic Similarity Submissions == |

| − | === | + | ===Special Update on Candidate Files (3 September 2006)=== |

| − | + | '''''Nota Bene''''' (The following description of the Mixed and Karaoke candidates has been updated, 3 September 2006). The length of candidates for the polyphonic task, drawn from the "Mixed" and "Karaoke" collections (Queries #7 through #17) is now more consistently in the 30 second range. We discovered that the timing information returned was not a reliable indicator of where the "best match" was found in each of the candidates. To correct for this, we took the "best match" starting information and then "padded" the candiate on both sides of the "best match" start point. Thus, the first 10 or so seconds of each candidate might, or might not, represent a strong "hit". The last 10 or so might also start "wandering away" from the "best match" region. Therfore, we recommend that you listen to the entire candidate before giving your grade and to be mindful that the "best match" section in each candidate is a bit slippery. | |

| − | A screen resolution of 1024 X 768 the minimal advised. Higher resolutions appear to work well too. We have noted some odd behaviour if you adjust your resolution in mid-session, so probably best | + | ===Task Description: Focusing on Melody=== |

| + | The goal of Symbolic Melodic Similarity Task is to evaluate how well various algorithms can retrieve results that are MELODICALLY similar to a given query. You will find in the candidate files a variety of different instrumentations as set by the creators of the MIDI files. We need you to look beyond the differences in timbre and instrumentation in assigning your grading scores. | ||

| + | |||

| + | ===Grading Expectations and "Reasonableness"=== | ||

| + | For each candidate, we need you to assign '''BOTH''' a Broad Category score '''AND''' a Fine Score (i.e., a numeric grade between 0 and 10). You have the freedom to make whatever associations you desire between a particular Broad Category score and its related Fine Score. In fact, we expect to see variations across evaluators with regard to the relationships between Broad Categories and Fine Scores as this is a normal part of human subjectivity. However, we will be using the two different types of scores to do important inter-related post-Evalutron calculations so, please, do be thoughtful in selecting your Broad Categories and related Fine Scores. What we are really asking here is that you apply a level of "reasonableness" to both your scores and your associations. For example, if you score a candidate in the VERY SIMILAR category, a Fine Score of 2.1 would not be, by most standards, "reasonable". Same applies at the other extreme. For example, a Broad Category score of NOT SIMILAR should not be associated with a Fine Score of, say, 7.2 or 8.4, etc. | ||

| + | |||

| + | Evalutron 6000 design and use details follow below and should clarify for you the use of the Broad Category and Fine Score input systems. | ||

| + | |||

| + | ====Clarification==== | ||

| + | A recent question about the Evalutron scoring system prompted the following response from me. In case others were confused, I think it best to share what I said with the rest of the grading community. | ||

| + | |||

| + | |||

| + | '''My response''': | ||

| + | The "Broad Category" and the "Fine Score" are meant to express the *same* grade only in different ways. Think of exams you might have taken: Some professors might give an A or B or F (i.e., Broad Category). On the same exam, another professor might want to express the same grade as 99%, 78% or 20% (i.e., Fine Score). | ||

| + | |||

| + | We need the graders to be "two professors in one" . We are asking each grader to be a "broad grading" professor and also a "fine grading" professor. On our fine scale, 0 is meant to represent complete failure and 10 a perfectly similar success. With the set of paired "Broad" and "Fine" scores we can can do different kinds of post-Evalutron analyses with each kind of score. Also, we want to be able to take both kinds of scores and analyze them to see what kind of fine scores are associated with each broad score. | ||

| + | |||

| + | ===Coming and Going: Avoiding Grader Fatigue=== | ||

| + | Listening to, and then comparing, hundreds of audio files is very tiring. We have built into the back-end of the Evalutron 6000 system a rather robust database system that records in near-real-time your grading scores. These scores are saved along with information about which queries and candidates you have yet to review. All this information is stored in association with your personal sign-in ID. This means you can break up your grading over several days at times convenient and productive to you. In fact, we recommend that you not try to tackle your "assignment" in one big chunk as fresh ears are happy ears and happy ears make for better evaluations. | ||

| + | |||

| + | ==Basic Technical Requirements== | ||

| + | In order to use the Evalutron 6000 you will need a modern web browser (e.g., Firefox, Mozilla, Safari, Internet Explorer ) that supports JavaScript (ECMAScript) and Cookies. Evalutron has been tested on Windows XP, MacOS X, RedHat Linux, and Solaris. We have found that the combination of Firefox and Flash Player to be most stable across platforms. Despite our best efforts, the use of Internet Explorer continues to generate seemingly random errors and we ask that you avoid using Internet Explorer. For example, | ||

| + | the combination of Internet Explorer and QuickTime Player simply refuses to work properly. Also the combination of Safari, MacOS X, and QuickTime does not work properly. Similarly, Media Player has worked well for some testers but very erratically for others. A decent amount of bandwidth is also advisable to minimize download times (i.e., DSL, cable modem and better). | ||

| + | |||

| + | A screen resolution of 1024 X 768 the minimal advised. Higher resolutions appear to work well too. We have noted some odd behaviour if you adjust your resolution in mid-session, so it is probably best not to do this. Adjusting font size via you browser can sometimes make things look a bit tidier and does not seem to have adverse effects. | ||

In general, if you are having trouble, please try accessing the Evalutron 6000 using another machine/platform/browser/player combination. If you are still having difficulty, please contact | In general, if you are having trouble, please try accessing the Evalutron 6000 using another machine/platform/browser/player combination. If you are still having difficulty, please contact | ||

| − | mrx-com09@lists.lis.uiuc.edu. | + | [mailto:mrx-com09@lists.lis.uiuc.edu]. |

| − | |||

| − | + | ==Getting Started== | |

When first visiting the Evalutron 6000 homepage, you will see a page similar to this (Fig. 1). | When first visiting the Evalutron 6000 homepage, you will see a page similar to this (Fig. 1). | ||

| − | [[Image: | + | [[Image:2006_e6ksms_home_page_scaled.png]] |

'''Figure 1. Evalutron 6000 start page.''' | '''Figure 1. Evalutron 6000 start page.''' | ||

| + | ==Four Important Issues Before Proceeding== | ||

| + | |||

| + | # This system is NOT open to the general public. Due to the legalities imposed upon us by the University of Illinois and the US Federal Government, we are allowed to accept only those graders that have a stake in Music Information Retrieval and Music Digital Library research. Acceptable persons include MIREX participants, subscribers to the music-ir[AT]ircam.fr list, ISMIR attendees, computational musicologists, music technology researchers, etc. If you are in doubt about your acceptability, please contact Prof. Downie at [mailto:jdownie@uiuc.edu]. | ||

| + | # Do NOT create an account for yourself if you are merely curious to see what the Evalutron 6000 is set up to do. The account creation process scientifically distributes Query and Candidate sets to each account holder. The act of creating a "curiousity account" seriously disrupts the adminstration of the results data we are collecting. | ||

| + | # IF YOU ARE REALLY CURIOUS: We have created a small "sandbox" version of the AudioSim Evalutron with fake data at: https://music-ir.org/eval6000/sandbox. You still must go through all the official procedures but your scores wil not affect anything as the data is "made up". | ||

| + | # Please do NOT create multiple accounts for yourself for this particular evaluation task. If you are also grading the Audio Music Similarity and Retrieval (AudioSim) task, you will be creating another task-specific account for AudioSim in the AudioSim space. The system is designed to track individuals based upon unique sign-in IDs. The creation of multiple accounts for one person causes the system to improperly distribute the assignments to your fellow graders. If you have difficulties, such as forgetting your sign-in password, please contact us at [mailto:mrx-com09@lists.lis.uiuc.edu] or try the "Forgot Your Password?" link found at the top of the homepage (Fig 1). | ||

| + | |||

| + | ==Registration== | ||

First you must register a new account. Click on the "Register" link (listed under Step 2) on the page to create an account. | First you must register a new account. Click on the "Register" link (listed under Step 2) on the page to create an account. | ||

| − | The registration page is fairly straightforward (Fig. 2). Required fields are marked in blue with | + | The registration page is fairly straightforward (Fig. 2). Required fields are marked in blue with asterisks. You can create any username and password you wish. Passwords must be at least 6 characters long and are case-sensitive. Before completing the registration, you must read and agree to the terms of the Informed Consent document. The evaluation, because it is using human judgements of similarity, is considered a human-subjects research project and the Evalutron is basically a survey instrument. To indicate your consent to participate in the evaluation, check the "I Agree" checkbox below the informed consent document. |

If you have questions about your rights as a subject in this research project, you should contact the UIUC IRB office (http://www.irb.uiuc.edu) for more information. The research protocol for this project is IRB# 07066. | If you have questions about your rights as a subject in this research project, you should contact the UIUC IRB office (http://www.irb.uiuc.edu) for more information. The research protocol for this project is IRB# 07066. | ||

| − | [[Image: | + | [[Image:2006_e6ksms_registration_page_scaled.png]] |

'''Figure 2. Evalutron 6000 registration page.''' | '''Figure 2. Evalutron 6000 registration page.''' | ||

| − | After completing the Registration, the system will ask you to sign-in with your newly created username and password. After signing-in, you can begin evaluation (Fig. 3). To start the evaluation process, click on the "Start Evaluation" link on the homepage. You will be presented with several options for | + | ==Selecting Your Audio Player== |

| + | After completing the Registration, the system will ask you to sign-in with your newly created username and password. After signing-in, you can begin evaluation (Fig. 3). To start the evaluation process, click on the "Start Evaluation" link on the homepage. You will be presented with several options for which media player you want to use. We have invested considerable effort coding various interfaces to be compatible with the maximum number of browsers, platforms, and players. Most users should be able to use the Flash MP3 Player option -- which we've found to work the best. The Windows Media Player and QuickTime options should also work in most modern browsers. We have noted that the Media Player does introduce some start-up lags when starting a new query set which we believe is caused by the way Media Player downloads the MP3 files. We have also noted that the combination of Internet Explorer and QuickTime is simply not working. | ||

| − | [[Image: | + | [[Image:2006_e6ksms_options_page_scaled.png]] |

'''Figure 3. Audio player selection page.''' | '''Figure 3. Audio player selection page.''' | ||

| − | Once you have selected a player configuration, you'll see a query evaluation page (Fig. 4). On this page, you will see the query player and the list of candidate players. The queries and candidates drawn from the RISM collection are fairly short (Queries # | + | ==Evaluation Pages: Queries and Candidate Lists== |

| + | Once you have selected a player configuration, you'll see a query evaluation page (Fig. 4). On this page, you will see the query player and the list of candidate players. The queries and candidates drawn from the RISM collection (Queries #1 through #6) are fairly short - most candidates are about 4-5 seconds long. '''''Nota Bene''''' (The following description of the Mixed and Karaoke candidates has been updated, 3 September 2006). The length of candidates for the polyphonic task, drawn from the "Mixed" and "Karaoke" collections (Queries #7 through #17) is now more consistently in the 30 second range. We discovered that the timing information returned was not a reliable indicator of where the "best match" was found in each of the candidates. To correct for this, we took the "best match" starting information and then "padded" the candiate on both sides of the "best match" start point. Thus, the first 10 or so seconds of each candidate might, or might not, represent a strong "hit". The last 10 or so might also start "wandering away" from the "best match" region. Therfore, we recommend that you listen to the entire candidate before giving your grade and to be mindful that the "best match" section in each candidate is a bit slippery. | ||

| − | [[Image: | + | [[Image:2006_e6ksms_evaluation_page_scaled.png]] |

'''Figure 4. Sample evaluation page.''' | '''Figure 4. Sample evaluation page.''' | ||

| − | Please do not be puzzled if your first query assignment is not Query #1 or if your Candidate List has candidates in seemingly random order: This is deliberate! The Evalutron 6000 is designed to build customized randomized lists and orderings for each grader to minimize fatigue and ordering effects. While you have the right to jump around in the system to do your evaluations in any order you like, we would appreciate it if you followed the suggested ordering given by the Evalutron 6000's randomizer. The "Previous Query" and "Next Query" buttons visible Figure 4 are designed to guide you through the randomized Query List ordering. | + | Please do not be puzzled if your first query assignment is not Query #1 or if your Candidate List has candidates in a seemingly random order: This is deliberate! The Evalutron 6000 is designed to build customized randomized lists and orderings for each grader to minimize fatigue and ordering effects. While you have the right to jump around in the system to do your evaluations in any order you like, we would appreciate it if you followed the suggested ordering given by the Evalutron 6000's randomizer. The "Previous Query" and "Next Query" buttons visible in Figure 4 are designed to guide you through the randomized Query List ordering. |

| − | Please note that the list of candidates is longer than the visible page (i.e., there are more candidates than may be immediately visible on the page). Please scroll to the bottom of the candidate list to make sure you have evaluated each song. | + | Please note that the list of candidates is longer than the visible page (i.e., there are more candidates than may be immediately visible on the page). Please scroll to the bottom of the candidate list to make sure you have evaluated each song. You can use the "Align Player" button to move the position of the Query Player closer to any candidate file. |

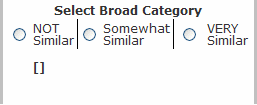

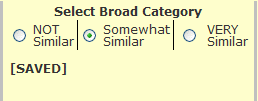

| − | The procedure for listening to a candidate is the same as listening to a query -- click the | + | The procedure for listening to a candidate is the same as listening to a query -- click the "Play Candidate" buttons to load the clip into the player and listen to it (Fig. 4). Once you have a feeling for how similar the candidate is to the query, click the "Not Similar," "Somewhat Similar" or "Very Similar" radio buttons to the right of the candidate. Note: you can continue to replay the query during candidate playback. |

| − | [[Image: | + | [[Image:2006_e6ksms_select_category.png]] |

'''Figure 5. Close up image of Broad Category selection buttons.''' | '''Figure 5. Close up image of Broad Category selection buttons.''' | ||

| Line 52: | Line 85: | ||

Depending on how you graded the candidate, you should see the candidate box react to your grade, indicating that the vote has been logged in the database (Fig. 6). If you indicated the song was "Somewhat Similar" the box will turn yellow and state "SAVED". If you indicated the song was "Very Similar" the box will turn green, "Not Similar" the box will turn red, and state "SAVED". | Depending on how you graded the candidate, you should see the candidate box react to your grade, indicating that the vote has been logged in the database (Fig. 6). If you indicated the song was "Somewhat Similar" the box will turn yellow and state "SAVED". If you indicated the song was "Very Similar" the box will turn green, "Not Similar" the box will turn red, and state "SAVED". | ||

| − | [[Image: | + | [[Image:2006_e6ksms_select_category_saved.png]] |

'''Figure 6. Close up image of Broad Category selection buttons with "Somewhat Similar" selected and "SAVED" automatically.''' | '''Figure 6. Close up image of Broad Category selection buttons with "Somewhat Similar" selected and "SAVED" automatically.''' | ||

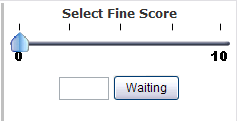

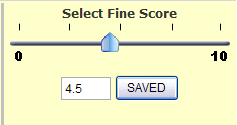

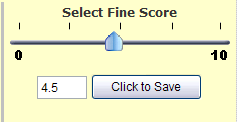

| − | We also need each grader to assign a fine-grained score for the similarity of the candidate to the query on | + | We also need each grader to assign a fine-grained score for the similarity of the candidate to the query on a scale of 0-10 (Fig. 7). More information about this process below. |

| − | [[Image: | + | [[Image:2006_e6ksms_select_score.png]] |

'''Figure 7. Close up image of the Fine Score selection scale.''' | '''Figure 7. Close up image of the Fine Score selection scale.''' | ||

| − | The system will automatically record the score when you let go of the | + | The system will automatically record the score when you let go of the scaler (Fig. 8). |

| − | [[Image: | + | [[Image:2006_e6ksms_select_score_saved.png]] |

'''Figure 8. Close up image of a Fine Score selection scale with a random score "SAVED".''' | '''Figure 8. Close up image of a Fine Score selection scale with a random score "SAVED".''' | ||

| + | |||

| + | You can also manually enter the score in the box, but in this case you MUST click the button labeled "Click to Save" to record your score (Fig. 9). After the score is saved, the scaler will automatically move to the correct position and the label on the button will change to "Saved" (Fig. 8). | ||

| + | |||

| + | [[Image:2006_e6ksms_select_score_before_save.png]] | ||

| + | |||

| + | '''Figure 9. Close up image of a Fine Score selection scale before "SAVED".''' | ||

You can always change your evaluation for any candidate by toggling the radio buttons. You can also go back and adjust the Fine Score selection scale. | You can always change your evaluation for any candidate by toggling the radio buttons. You can also go back and adjust the Fine Score selection scale. | ||

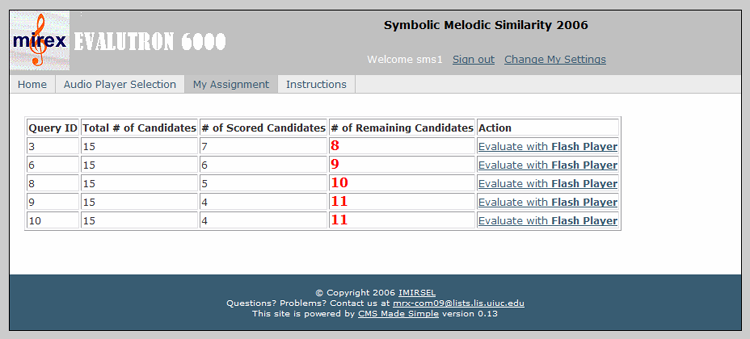

| − | When you have completed | + | When you have completed evaluating all of the candidates for a single query, you can click on the "Next Query" button at the top right of the evaluation page. This button will load a new Query and associated Candidate List for you to evaluate. Using the "My Assignment" tab, you can check the list of queries and candidates that were assigned to you, and also see how far you are in completing your evaluation task (Fig. 10). You must assign '''BOTH''' a Broad Category and a Fine Score for each candidate before the system registers it as a "completed" candidate. |

| + | |||

| − | [[Image: | + | [[Image:2006_e6ksms_my_assignment_scaled.png]] |

| − | '''Figure | + | '''Figure 10. My Assignment page. ''' |

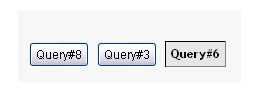

| − | You can also see a list of all of the queries you have been assigned at the bottom of each Candidate List page. You can return to any query by clicking on the button for that query here (Fig. | + | You can also see a list of all of the queries you have been assigned at the bottom of each Candidate List page. You can return to any query by clicking on the button for that query here (Fig. 11). You can re-evaluate any candidate for any query at any time, up to the closing of the evaluation system. |

| − | [[Image: | + | [[Image:2006_eval6_queries_detail.png]] |

| − | '''Figure | + | '''Figure 11. Close up image of Query List buttons which allow for revisiting of completed query/candidate sets. ''' |

Latest revision as of 21:34, 13 May 2010

Contents

Special Comments about Grading the Symbolic Melodic Similarity Submissions

Special Update on Candidate Files (3 September 2006)

Nota Bene (The following description of the Mixed and Karaoke candidates has been updated, 3 September 2006). The length of candidates for the polyphonic task, drawn from the "Mixed" and "Karaoke" collections (Queries #7 through #17) is now more consistently in the 30 second range. We discovered that the timing information returned was not a reliable indicator of where the "best match" was found in each of the candidates. To correct for this, we took the "best match" starting information and then "padded" the candiate on both sides of the "best match" start point. Thus, the first 10 or so seconds of each candidate might, or might not, represent a strong "hit". The last 10 or so might also start "wandering away" from the "best match" region. Therfore, we recommend that you listen to the entire candidate before giving your grade and to be mindful that the "best match" section in each candidate is a bit slippery.

Task Description: Focusing on Melody

The goal of Symbolic Melodic Similarity Task is to evaluate how well various algorithms can retrieve results that are MELODICALLY similar to a given query. You will find in the candidate files a variety of different instrumentations as set by the creators of the MIDI files. We need you to look beyond the differences in timbre and instrumentation in assigning your grading scores.

Grading Expectations and "Reasonableness"

For each candidate, we need you to assign BOTH a Broad Category score AND a Fine Score (i.e., a numeric grade between 0 and 10). You have the freedom to make whatever associations you desire between a particular Broad Category score and its related Fine Score. In fact, we expect to see variations across evaluators with regard to the relationships between Broad Categories and Fine Scores as this is a normal part of human subjectivity. However, we will be using the two different types of scores to do important inter-related post-Evalutron calculations so, please, do be thoughtful in selecting your Broad Categories and related Fine Scores. What we are really asking here is that you apply a level of "reasonableness" to both your scores and your associations. For example, if you score a candidate in the VERY SIMILAR category, a Fine Score of 2.1 would not be, by most standards, "reasonable". Same applies at the other extreme. For example, a Broad Category score of NOT SIMILAR should not be associated with a Fine Score of, say, 7.2 or 8.4, etc.

Evalutron 6000 design and use details follow below and should clarify for you the use of the Broad Category and Fine Score input systems.

Clarification

A recent question about the Evalutron scoring system prompted the following response from me. In case others were confused, I think it best to share what I said with the rest of the grading community.

My response:

The "Broad Category" and the "Fine Score" are meant to express the *same* grade only in different ways. Think of exams you might have taken: Some professors might give an A or B or F (i.e., Broad Category). On the same exam, another professor might want to express the same grade as 99%, 78% or 20% (i.e., Fine Score).

We need the graders to be "two professors in one" . We are asking each grader to be a "broad grading" professor and also a "fine grading" professor. On our fine scale, 0 is meant to represent complete failure and 10 a perfectly similar success. With the set of paired "Broad" and "Fine" scores we can can do different kinds of post-Evalutron analyses with each kind of score. Also, we want to be able to take both kinds of scores and analyze them to see what kind of fine scores are associated with each broad score.

Coming and Going: Avoiding Grader Fatigue

Listening to, and then comparing, hundreds of audio files is very tiring. We have built into the back-end of the Evalutron 6000 system a rather robust database system that records in near-real-time your grading scores. These scores are saved along with information about which queries and candidates you have yet to review. All this information is stored in association with your personal sign-in ID. This means you can break up your grading over several days at times convenient and productive to you. In fact, we recommend that you not try to tackle your "assignment" in one big chunk as fresh ears are happy ears and happy ears make for better evaluations.

Basic Technical Requirements

In order to use the Evalutron 6000 you will need a modern web browser (e.g., Firefox, Mozilla, Safari, Internet Explorer ) that supports JavaScript (ECMAScript) and Cookies. Evalutron has been tested on Windows XP, MacOS X, RedHat Linux, and Solaris. We have found that the combination of Firefox and Flash Player to be most stable across platforms. Despite our best efforts, the use of Internet Explorer continues to generate seemingly random errors and we ask that you avoid using Internet Explorer. For example, the combination of Internet Explorer and QuickTime Player simply refuses to work properly. Also the combination of Safari, MacOS X, and QuickTime does not work properly. Similarly, Media Player has worked well for some testers but very erratically for others. A decent amount of bandwidth is also advisable to minimize download times (i.e., DSL, cable modem and better).

A screen resolution of 1024 X 768 the minimal advised. Higher resolutions appear to work well too. We have noted some odd behaviour if you adjust your resolution in mid-session, so it is probably best not to do this. Adjusting font size via you browser can sometimes make things look a bit tidier and does not seem to have adverse effects.

In general, if you are having trouble, please try accessing the Evalutron 6000 using another machine/platform/browser/player combination. If you are still having difficulty, please contact

[1].

Getting Started

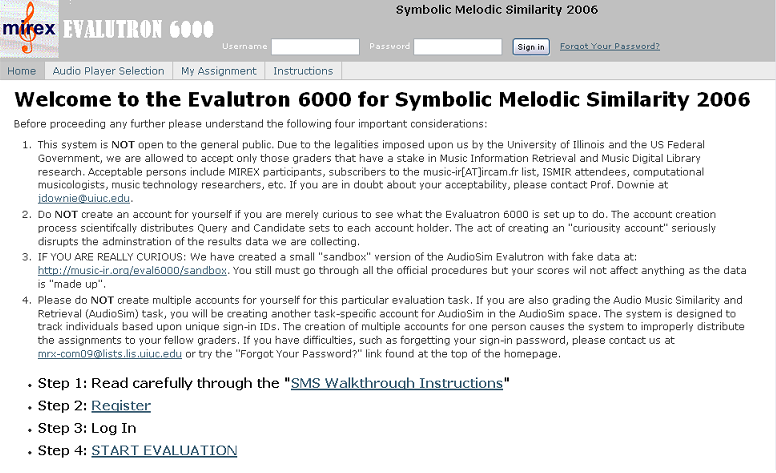

When first visiting the Evalutron 6000 homepage, you will see a page similar to this (Fig. 1).

Figure 1. Evalutron 6000 start page.

Four Important Issues Before Proceeding

- This system is NOT open to the general public. Due to the legalities imposed upon us by the University of Illinois and the US Federal Government, we are allowed to accept only those graders that have a stake in Music Information Retrieval and Music Digital Library research. Acceptable persons include MIREX participants, subscribers to the music-ir[AT]ircam.fr list, ISMIR attendees, computational musicologists, music technology researchers, etc. If you are in doubt about your acceptability, please contact Prof. Downie at [2].

- Do NOT create an account for yourself if you are merely curious to see what the Evalutron 6000 is set up to do. The account creation process scientifically distributes Query and Candidate sets to each account holder. The act of creating a "curiousity account" seriously disrupts the adminstration of the results data we are collecting.

- IF YOU ARE REALLY CURIOUS: We have created a small "sandbox" version of the AudioSim Evalutron with fake data at: https://music-ir.org/eval6000/sandbox. You still must go through all the official procedures but your scores wil not affect anything as the data is "made up".

- Please do NOT create multiple accounts for yourself for this particular evaluation task. If you are also grading the Audio Music Similarity and Retrieval (AudioSim) task, you will be creating another task-specific account for AudioSim in the AudioSim space. The system is designed to track individuals based upon unique sign-in IDs. The creation of multiple accounts for one person causes the system to improperly distribute the assignments to your fellow graders. If you have difficulties, such as forgetting your sign-in password, please contact us at [3] or try the "Forgot Your Password?" link found at the top of the homepage (Fig 1).

Registration

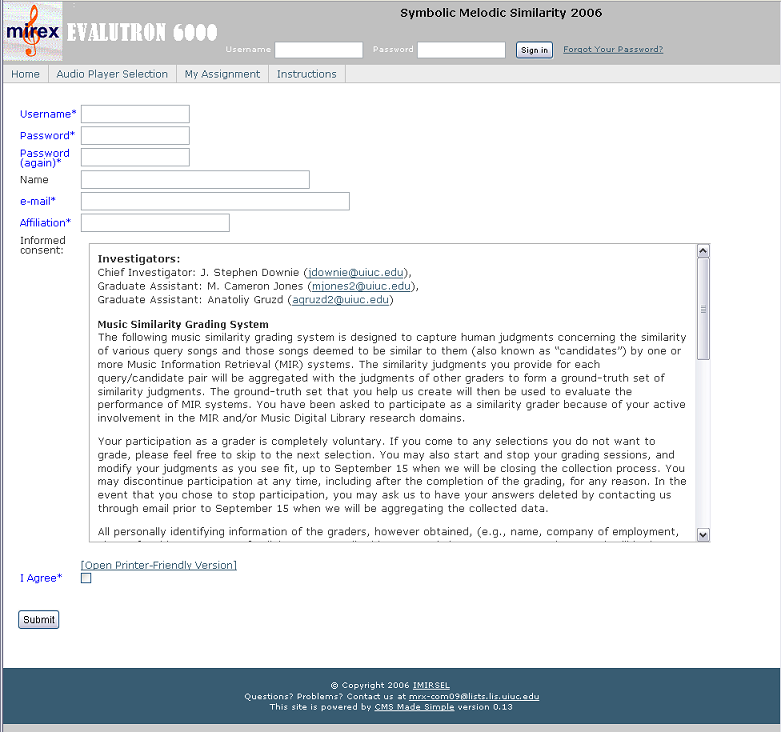

First you must register a new account. Click on the "Register" link (listed under Step 2) on the page to create an account.

The registration page is fairly straightforward (Fig. 2). Required fields are marked in blue with asterisks. You can create any username and password you wish. Passwords must be at least 6 characters long and are case-sensitive. Before completing the registration, you must read and agree to the terms of the Informed Consent document. The evaluation, because it is using human judgements of similarity, is considered a human-subjects research project and the Evalutron is basically a survey instrument. To indicate your consent to participate in the evaluation, check the "I Agree" checkbox below the informed consent document.

If you have questions about your rights as a subject in this research project, you should contact the UIUC IRB office (http://www.irb.uiuc.edu) for more information. The research protocol for this project is IRB# 07066.

Figure 2. Evalutron 6000 registration page.

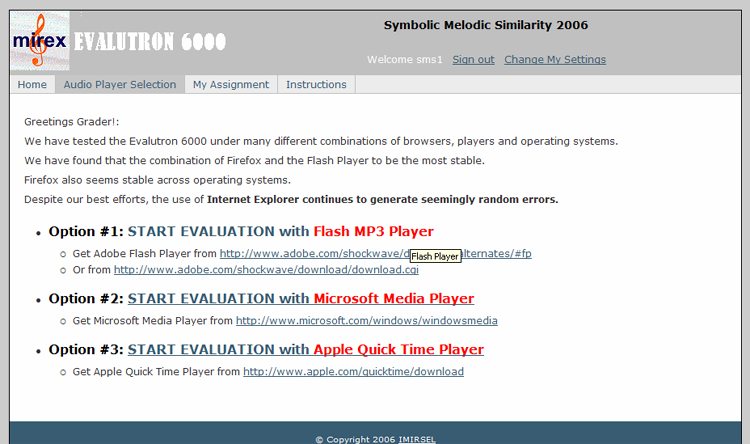

Selecting Your Audio Player

After completing the Registration, the system will ask you to sign-in with your newly created username and password. After signing-in, you can begin evaluation (Fig. 3). To start the evaluation process, click on the "Start Evaluation" link on the homepage. You will be presented with several options for which media player you want to use. We have invested considerable effort coding various interfaces to be compatible with the maximum number of browsers, platforms, and players. Most users should be able to use the Flash MP3 Player option -- which we've found to work the best. The Windows Media Player and QuickTime options should also work in most modern browsers. We have noted that the Media Player does introduce some start-up lags when starting a new query set which we believe is caused by the way Media Player downloads the MP3 files. We have also noted that the combination of Internet Explorer and QuickTime is simply not working.

Figure 3. Audio player selection page.

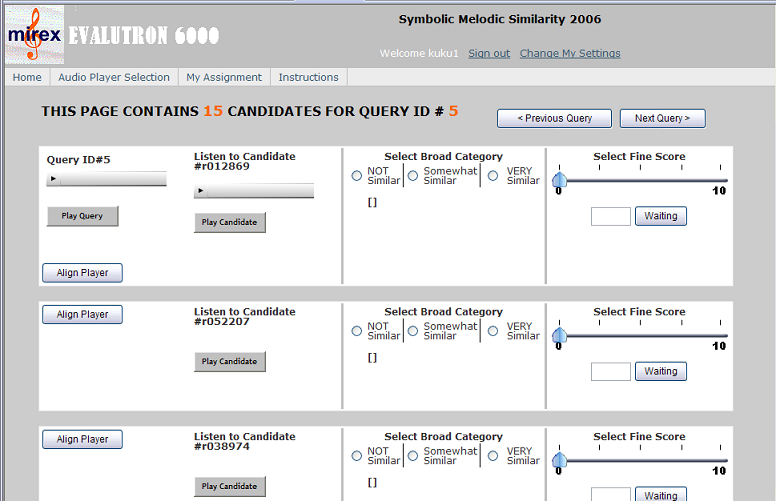

Evaluation Pages: Queries and Candidate Lists

Once you have selected a player configuration, you'll see a query evaluation page (Fig. 4). On this page, you will see the query player and the list of candidate players. The queries and candidates drawn from the RISM collection (Queries #1 through #6) are fairly short - most candidates are about 4-5 seconds long. Nota Bene (The following description of the Mixed and Karaoke candidates has been updated, 3 September 2006). The length of candidates for the polyphonic task, drawn from the "Mixed" and "Karaoke" collections (Queries #7 through #17) is now more consistently in the 30 second range. We discovered that the timing information returned was not a reliable indicator of where the "best match" was found in each of the candidates. To correct for this, we took the "best match" starting information and then "padded" the candiate on both sides of the "best match" start point. Thus, the first 10 or so seconds of each candidate might, or might not, represent a strong "hit". The last 10 or so might also start "wandering away" from the "best match" region. Therfore, we recommend that you listen to the entire candidate before giving your grade and to be mindful that the "best match" section in each candidate is a bit slippery.

Figure 4. Sample evaluation page.

Please do not be puzzled if your first query assignment is not Query #1 or if your Candidate List has candidates in a seemingly random order: This is deliberate! The Evalutron 6000 is designed to build customized randomized lists and orderings for each grader to minimize fatigue and ordering effects. While you have the right to jump around in the system to do your evaluations in any order you like, we would appreciate it if you followed the suggested ordering given by the Evalutron 6000's randomizer. The "Previous Query" and "Next Query" buttons visible in Figure 4 are designed to guide you through the randomized Query List ordering.

Please note that the list of candidates is longer than the visible page (i.e., there are more candidates than may be immediately visible on the page). Please scroll to the bottom of the candidate list to make sure you have evaluated each song. You can use the "Align Player" button to move the position of the Query Player closer to any candidate file.

The procedure for listening to a candidate is the same as listening to a query -- click the "Play Candidate" buttons to load the clip into the player and listen to it (Fig. 4). Once you have a feeling for how similar the candidate is to the query, click the "Not Similar," "Somewhat Similar" or "Very Similar" radio buttons to the right of the candidate. Note: you can continue to replay the query during candidate playback.

Figure 5. Close up image of Broad Category selection buttons.

Depending on how you graded the candidate, you should see the candidate box react to your grade, indicating that the vote has been logged in the database (Fig. 6). If you indicated the song was "Somewhat Similar" the box will turn yellow and state "SAVED". If you indicated the song was "Very Similar" the box will turn green, "Not Similar" the box will turn red, and state "SAVED".

Figure 6. Close up image of Broad Category selection buttons with "Somewhat Similar" selected and "SAVED" automatically.

We also need each grader to assign a fine-grained score for the similarity of the candidate to the query on a scale of 0-10 (Fig. 7). More information about this process below.

Figure 7. Close up image of the Fine Score selection scale.

The system will automatically record the score when you let go of the scaler (Fig. 8).

Figure 8. Close up image of a Fine Score selection scale with a random score "SAVED".

You can also manually enter the score in the box, but in this case you MUST click the button labeled "Click to Save" to record your score (Fig. 9). After the score is saved, the scaler will automatically move to the correct position and the label on the button will change to "Saved" (Fig. 8).

Figure 9. Close up image of a Fine Score selection scale before "SAVED".

You can always change your evaluation for any candidate by toggling the radio buttons. You can also go back and adjust the Fine Score selection scale.

When you have completed evaluating all of the candidates for a single query, you can click on the "Next Query" button at the top right of the evaluation page. This button will load a new Query and associated Candidate List for you to evaluate. Using the "My Assignment" tab, you can check the list of queries and candidates that were assigned to you, and also see how far you are in completing your evaluation task (Fig. 10). You must assign BOTH a Broad Category and a Fine Score for each candidate before the system registers it as a "completed" candidate.

Figure 10. My Assignment page.

You can also see a list of all of the queries you have been assigned at the bottom of each Candidate List page. You can return to any query by clicking on the button for that query here (Fig. 11). You can re-evaluate any candidate for any query at any time, up to the closing of the evaluation system.

Figure 11. Close up image of Query List buttons which allow for revisiting of completed query/candidate sets.