Difference between revisions of "2009:Audio Music Similarity and Retrieval Results"

(→Team ID) |

|||

| Line 1: | Line 1: | ||

| − | |||

== Introduction == | == Introduction == | ||

| Line 21: | Line 20: | ||

'''SH''' = [[Stephan H├╝bler]]<br /> | '''SH''' = [[Stephan H├╝bler]]<br /> | ||

| − | ==Results== | + | ====Broad Categories==== |

| + | '''NS''' = Not Similar<br /> | ||

| + | '''SS''' = Somewhat Similar<br /> | ||

| + | '''VS''' = Very Similar<br /> | ||

| + | |||

| + | =====Calculating Summary Measures===== | ||

| + | '''Fine'''<sup>(1)</sup> = Sum of fine-grained human similarity decisions (0-10). <br /> | ||

| + | '''PSum'''<sup>(1)</sup> = Sum of human broad similarity decisions: NS=0, SS=1, VS=2. <br /> | ||

| + | '''WCsum'''<sup>(1)</sup> = 'World Cup' scoring: NS=0, SS=1, VS=3 (rewards Very Similar). <br /> | ||

| + | '''SDsum'''<sup>(1)</sup> = 'Stephen Downie' scoring: NS=0, SS=1, VS=4 (strongly rewards Very Similar). <br /> | ||

| + | '''Greater0'''<sup>(1)</sup> = NS=0, SS=1, VS=1 (binary relevance judgement).<br /> | ||

| + | '''Greater1'''<sup>(1)</sup> = NS=0, SS=0, VS=1 (binary relevance judgement using only Very Similar).<br /> | ||

| + | |||

| + | <sup>(1)</sup>Normalized to the range 0 to 1. | ||

| + | |||

| + | ===Overall Summary Results=== | ||

| + | '''NB''': The results for BK2 were interpolated from partial data due to a runtime error. | ||

| + | |||

| + | <csv>ams07_overall_summary2.csv</csv> | ||

| + | |||

| + | |||

| + | |||

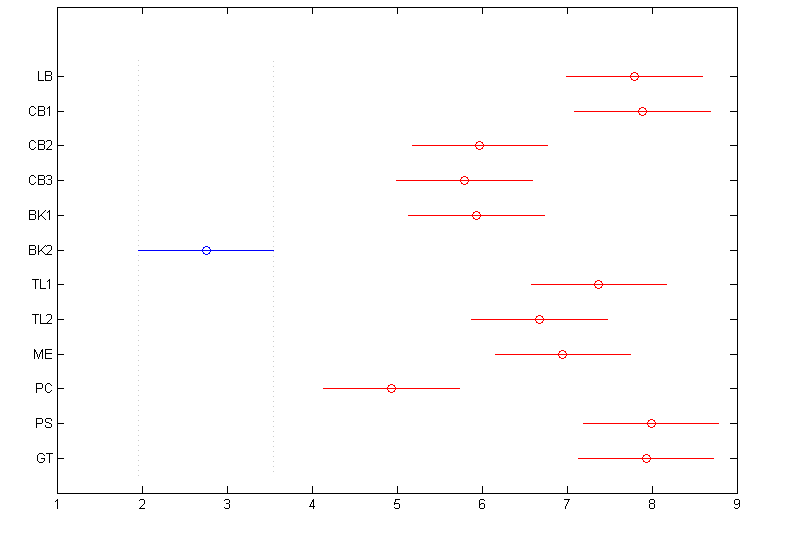

| + | ===Friedman Test with Multiple Comparisons Results (p=0.05)=== | ||

| + | The Friedman test was run in MATLAB against the Fine summary data over the 100 queries.<br /> | ||

| + | Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05); | ||

| + | <csv>ams07_sum_friedman_fine.csv</csv> | ||

| + | <csv>ams07_detail_friedman_fine.csv</csv> | ||

| + | |||

| + | [[Image:2007 ams broad scores friedmans.png]] | ||

| + | |||

| + | ===Summary Results by Query=== | ||

| + | These are the mean FINE scores per query assigned by Evalutron graders. The FINE scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0.0 and 10.0. A perfect score would be 10. Genre labels have been included for reference. | ||

| + | |||

| + | <csv>ams07_fine_by_query_with_genre.csv</csv> | ||

| + | |||

| + | These are the mean BROAD scores per query assigned by Evalutron graders. The BROAD scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 (not similar) and 2 (very similar). A perfect score would be 2. Genre labels have been included for reference. | ||

| + | <csv>ams07_broad_by_query_with_genre.csv</csv> | ||

| + | |||

| + | ===Anonymized Metadata=== | ||

| + | [https://www.music-ir.org/mirex2007/results/anonymizedAudioSim07metaData.csv Anonymized Metadata]<br /> | ||

| − | + | ===Raw Scores=== | |

| + | The raw data derived from the Evalutron 6000 human evaluations are located on the [[Audio Music Similarity and Retrieval Raw Data]] page. | ||

Revision as of 13:58, 14 October 2009

Contents

Introduction

General Legend

Team ID

ANO = Anonymous

BF = Benjamin Fields

BSWH = Dmitry Bogdanov, Joan Serrà, Nicolas Wack, and Perfecto Herrera

CL1 = Chuan Cao, Ming Li

CL2 = Chuan Cao, Ming Li

GT = George Tzanetakis

LR = Thomas Lidy,Andreas Rauber

ME = Franc┬╕ois Maillet,Douglas Eck

PS1 = Tim Pohle1, Dominik Schnitzer1

PS2 = Tim Pohle1, Dominik Schnitzer1

SH = Stephan H├╝bler

Broad Categories

NS = Not Similar

SS = Somewhat Similar

VS = Very Similar

Calculating Summary Measures

Fine(1) = Sum of fine-grained human similarity decisions (0-10).

PSum(1) = Sum of human broad similarity decisions: NS=0, SS=1, VS=2.

WCsum(1) = 'World Cup' scoring: NS=0, SS=1, VS=3 (rewards Very Similar).

SDsum(1) = 'Stephen Downie' scoring: NS=0, SS=1, VS=4 (strongly rewards Very Similar).

Greater0(1) = NS=0, SS=1, VS=1 (binary relevance judgement).

Greater1(1) = NS=0, SS=0, VS=1 (binary relevance judgement using only Very Similar).

(1)Normalized to the range 0 to 1.

Overall Summary Results

NB: The results for BK2 were interpolated from partial data due to a runtime error.

file /nema-raid/www/mirex/results/ams07_overall_summary2.csv not found

Friedman Test with Multiple Comparisons Results (p=0.05)

The Friedman test was run in MATLAB against the Fine summary data over the 100 queries.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

file /nema-raid/www/mirex/results/ams07_sum_friedman_fine.csv not found

file /nema-raid/www/mirex/results/ams07_detail_friedman_fine.csv not found

Summary Results by Query

These are the mean FINE scores per query assigned by Evalutron graders. The FINE scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0.0 and 10.0. A perfect score would be 10. Genre labels have been included for reference.

file /nema-raid/www/mirex/results/ams07_fine_by_query_with_genre.csv not found

These are the mean BROAD scores per query assigned by Evalutron graders. The BROAD scores for the 5 candidates returned per algorithm, per query, have been averaged. Values are bounded between 0 (not similar) and 2 (very similar). A perfect score would be 2. Genre labels have been included for reference. file /nema-raid/www/mirex/results/ams07_broad_by_query_with_genre.csv not found

Anonymized Metadata

Raw Scores

The raw data derived from the Evalutron 6000 human evaluations are located on the Audio Music Similarity and Retrieval Raw Data page.