Difference between revisions of "2018:Patterns for Prediction Results"

Tom Collins (talk | contribs) m (→Training and Test Datasets) |

Tom Collins (talk | contribs) m (→Results) |

||

| Line 62: | Line 62: | ||

== Results == | == Results == | ||

| − | + | An intro spiel here... | |

| − | (For mathematical definitions of the various metrics, please see [[ | + | (For mathematical definitions of the various metrics, please see [[2018:Patterns_for_Prediction#Evaluation_Procedure]].) |

===SymMono=== | ===SymMono=== | ||

| − | + | Here are some results (cf. Figures 1-3), and some interpretation. Don't forget these as well (Figures 4-6), showing something. | |

| − | + | Remarks on runtime appropriate here too. | |

| − | |||

| − | |||

===SymPoly=== | ===SymPoly=== | ||

| − | + | And so on. | |

| − | |||

| − | |||

| − | |||

| − | |||

==Discussion== | ==Discussion== | ||

Revision as of 08:13, 18 September 2018

Contents

Introduction

THIS PAGE IS UNDER CONSTRUCTION!

The task: ...

Contribution

...

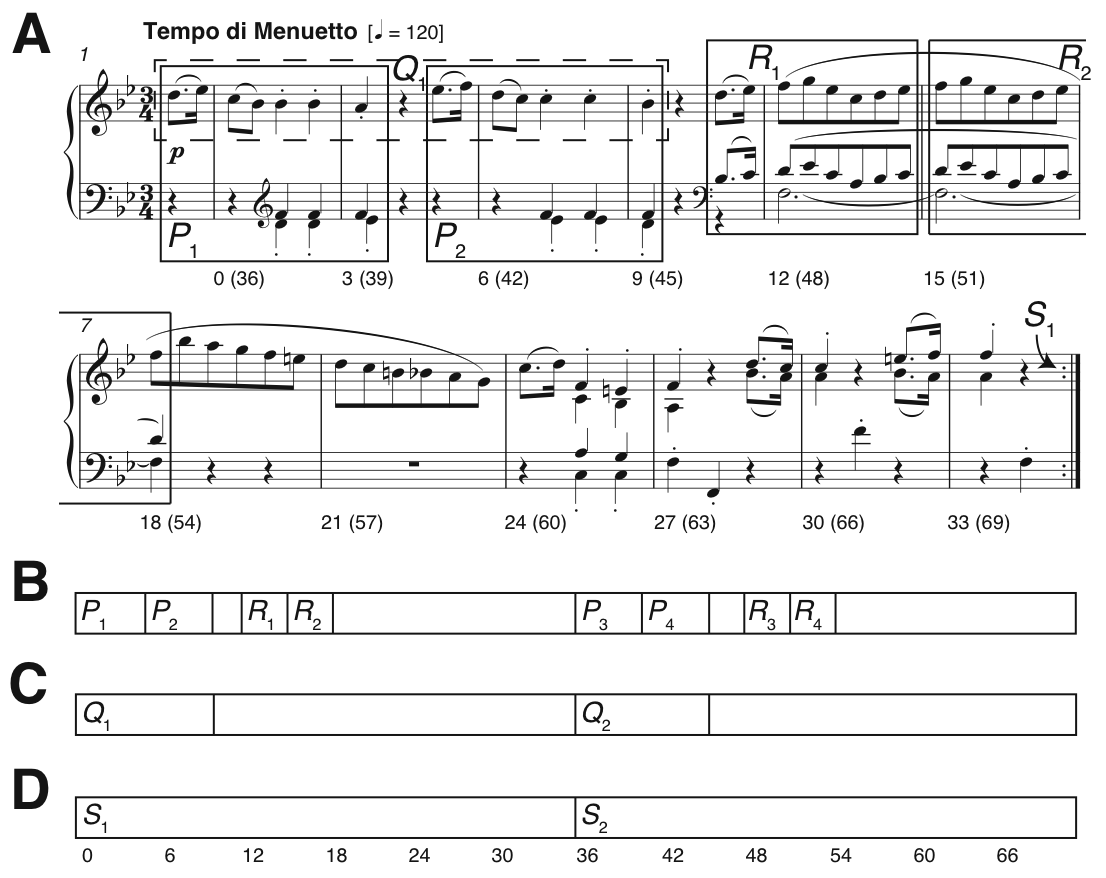

Figure 1. Pattern discovery v segmentation. (A) Bars 1-12 of Mozart’s Piano Sonata in E-flat major K282 mvt.2, showing some ground-truth themes and repeated sections; (B-D) Three linear segmentations. Numbers below the staff in Fig. 1A and below the segmentation in Fig. 1D indicate crotchet beats, from zero for bar 1 beat 1.

For a more detailed introduction to the task, please see 2018:Patterns for Prediction.

Training and Test Datasets

...

| Sub code | Submission name | Abstract | Contributors |

|---|---|---|---|

| Task Version | symMono | ||

| EN1 | Algo name here | Eric Nichols | |

| FC1 | Algo name here | Florian Colombo | |

| MM | Markov model | N/A | Intended as 'baseline' |

| Task Version | symPoly | ||

| FC1 | Algo name here | Florian Colombo | |

| MM | Markov model | N/A | Intended as 'baseline' |

Table 1. Algorithms submitted to Patterns for Prediction 2018.

Results

An intro spiel here...

(For mathematical definitions of the various metrics, please see 2018:Patterns_for_Prediction#Evaluation_Procedure.)

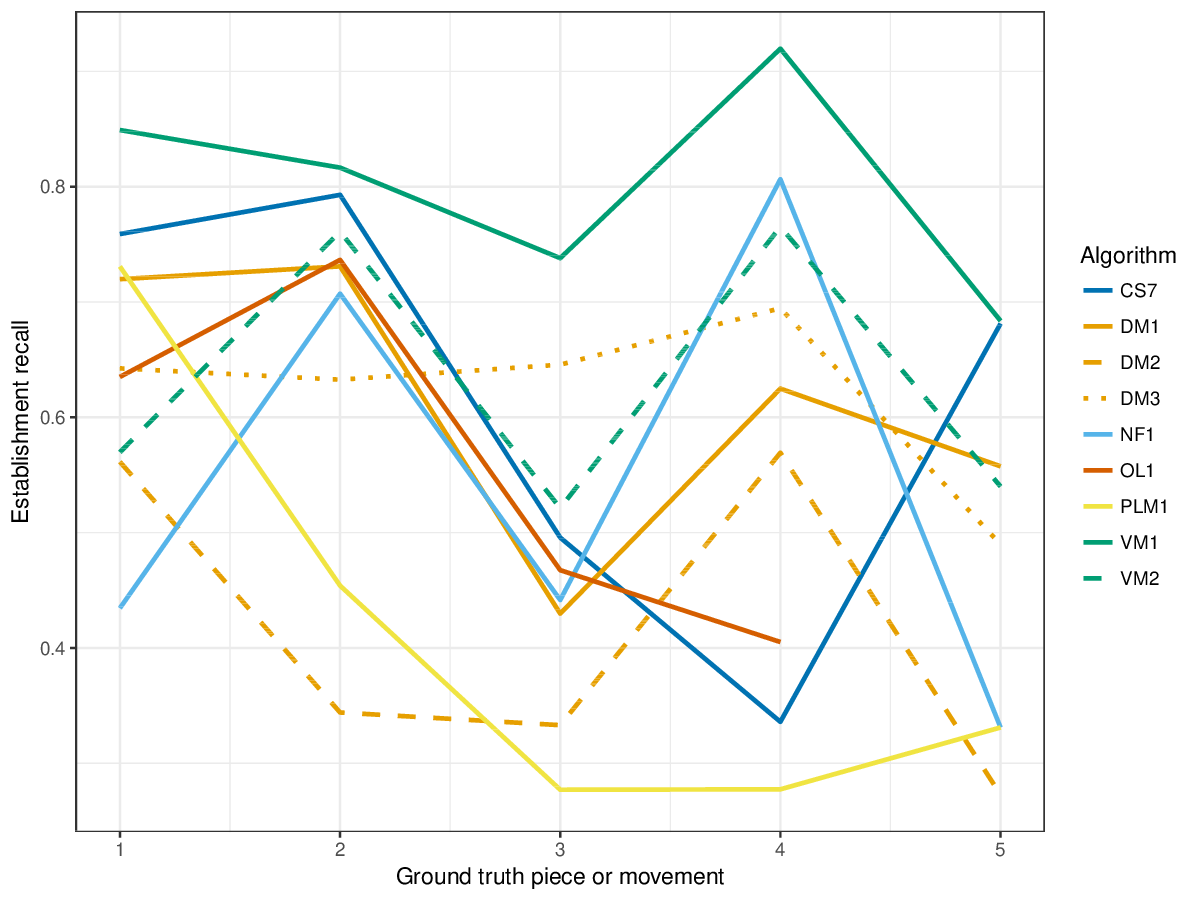

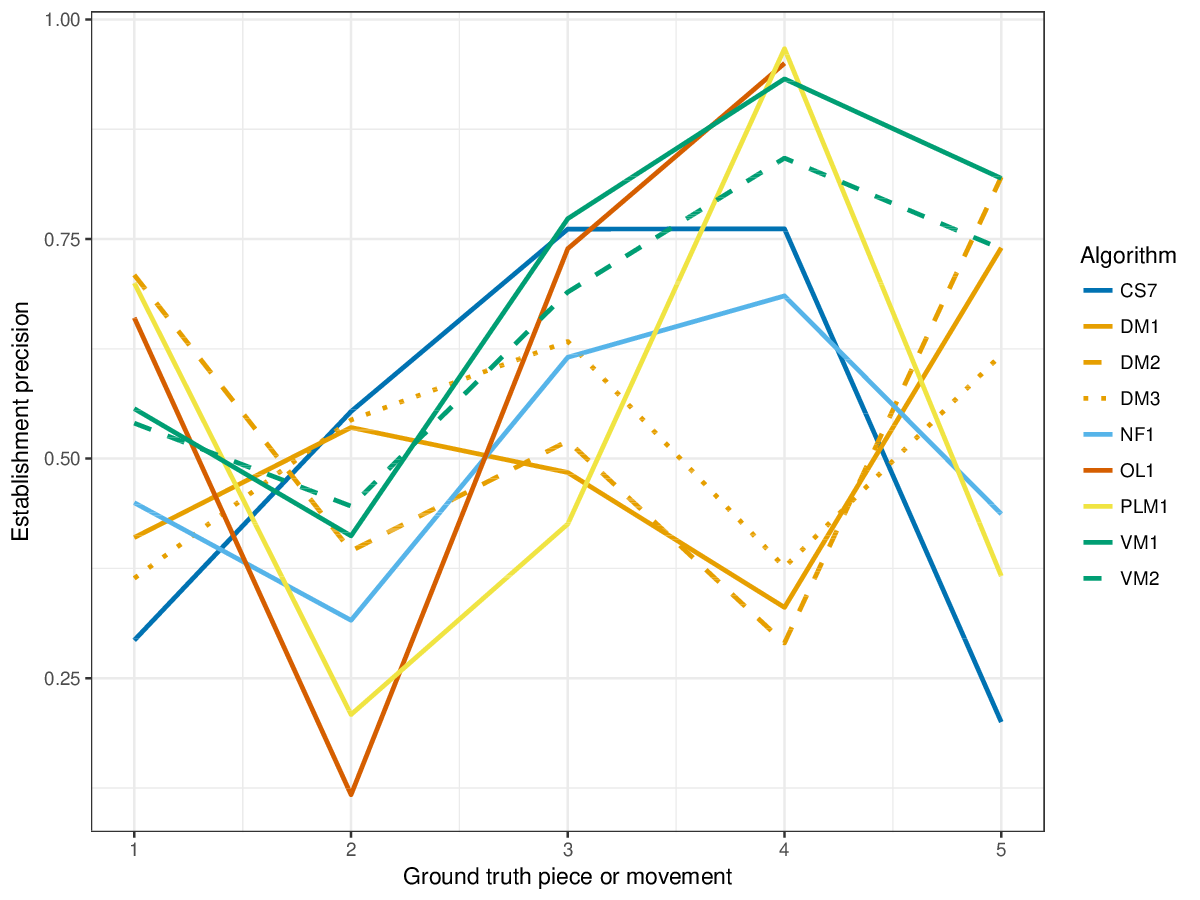

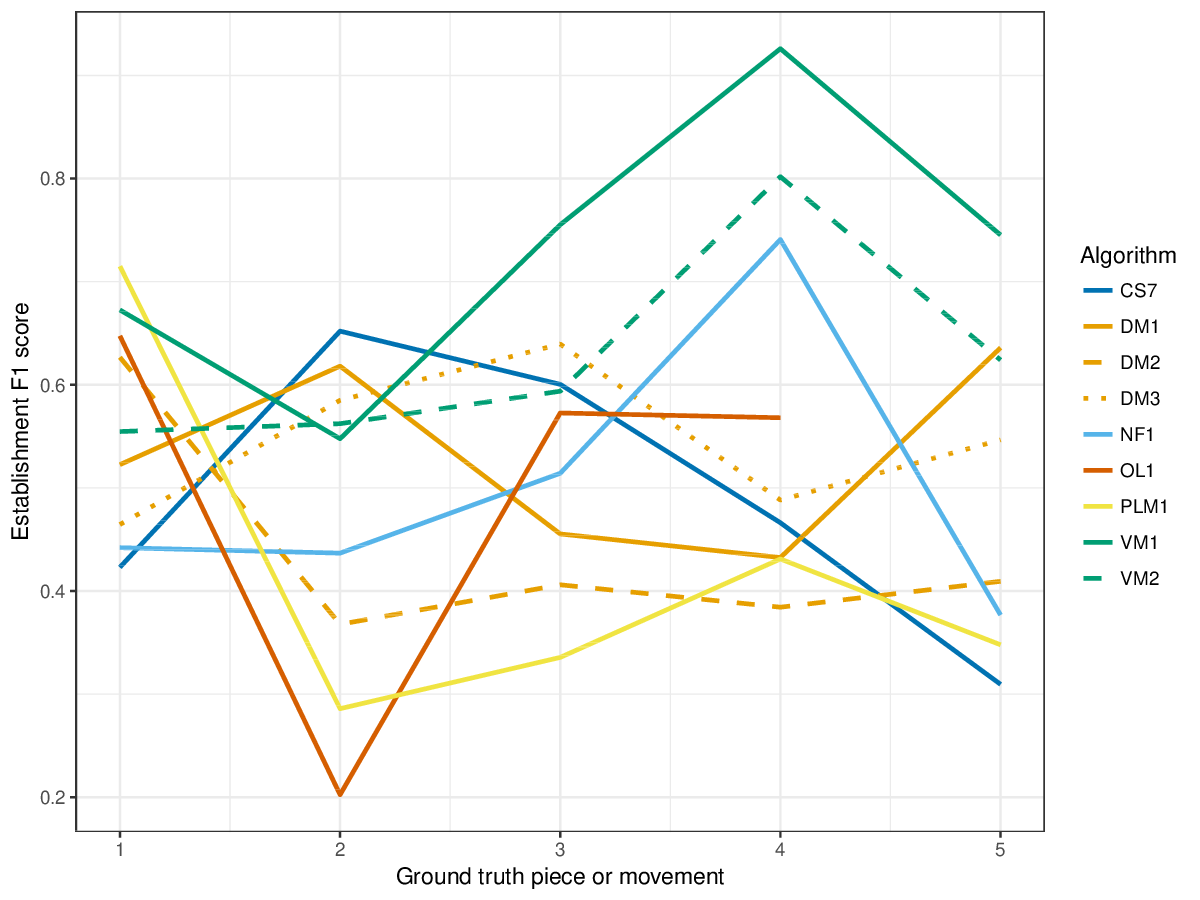

SymMono

Here are some results (cf. Figures 1-3), and some interpretation. Don't forget these as well (Figures 4-6), showing something.

Remarks on runtime appropriate here too.

SymPoly

And so on.

Discussion

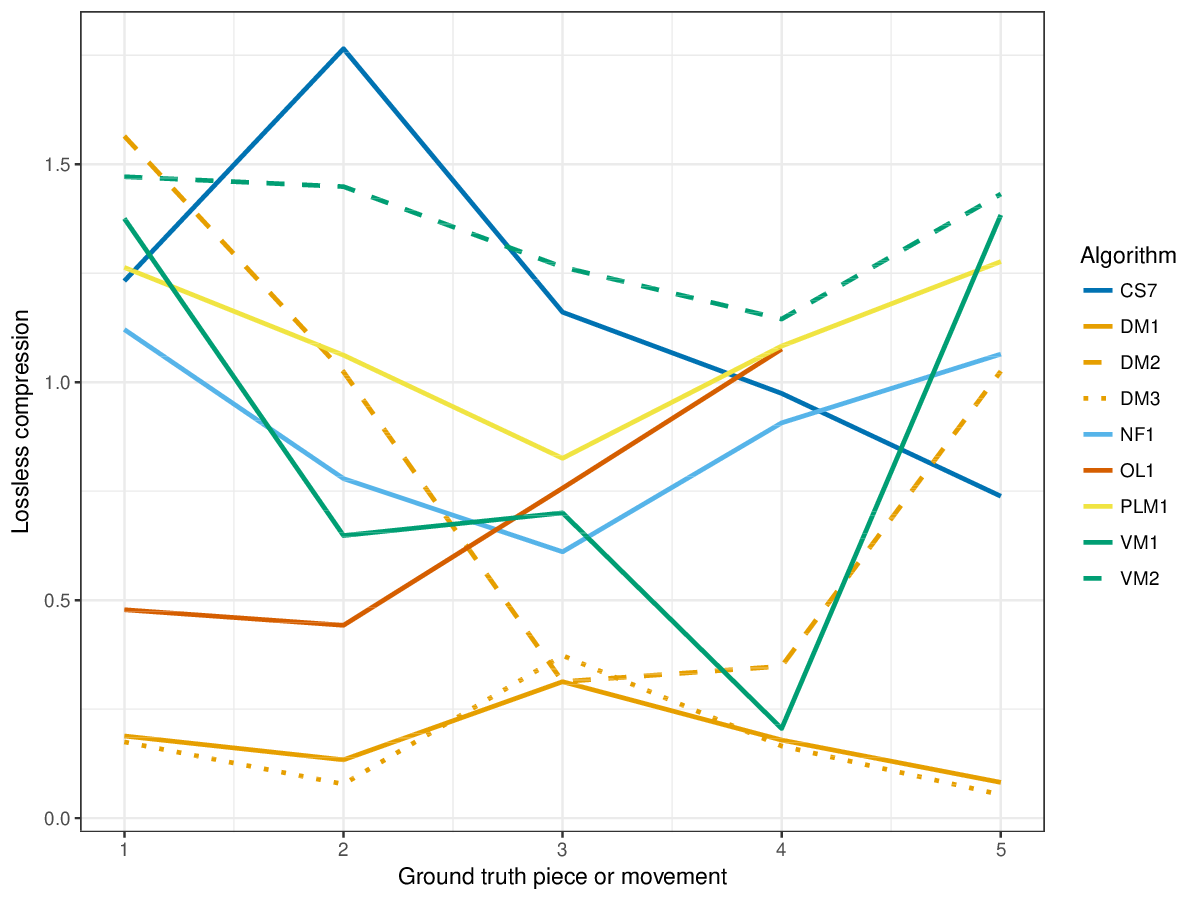

The new compression evaluation measures are not highly correlated with the metrics measuring retrieval of annotated patterns. This may be caused by the fact that lossless compression is lower for algorithms which find overlapping patterns: human annotators, and also some pattern discovery algorithms, may find valid overlapping patterns, as patterns may be hierarchically layered (e.g., motifs which are part of themes). We will add new, prediction based measures, and new ground truth pieces to the task next year.

Berit Janssen, Iris Ren, Tom Collins, Anja Volk.

Figures

SymMono

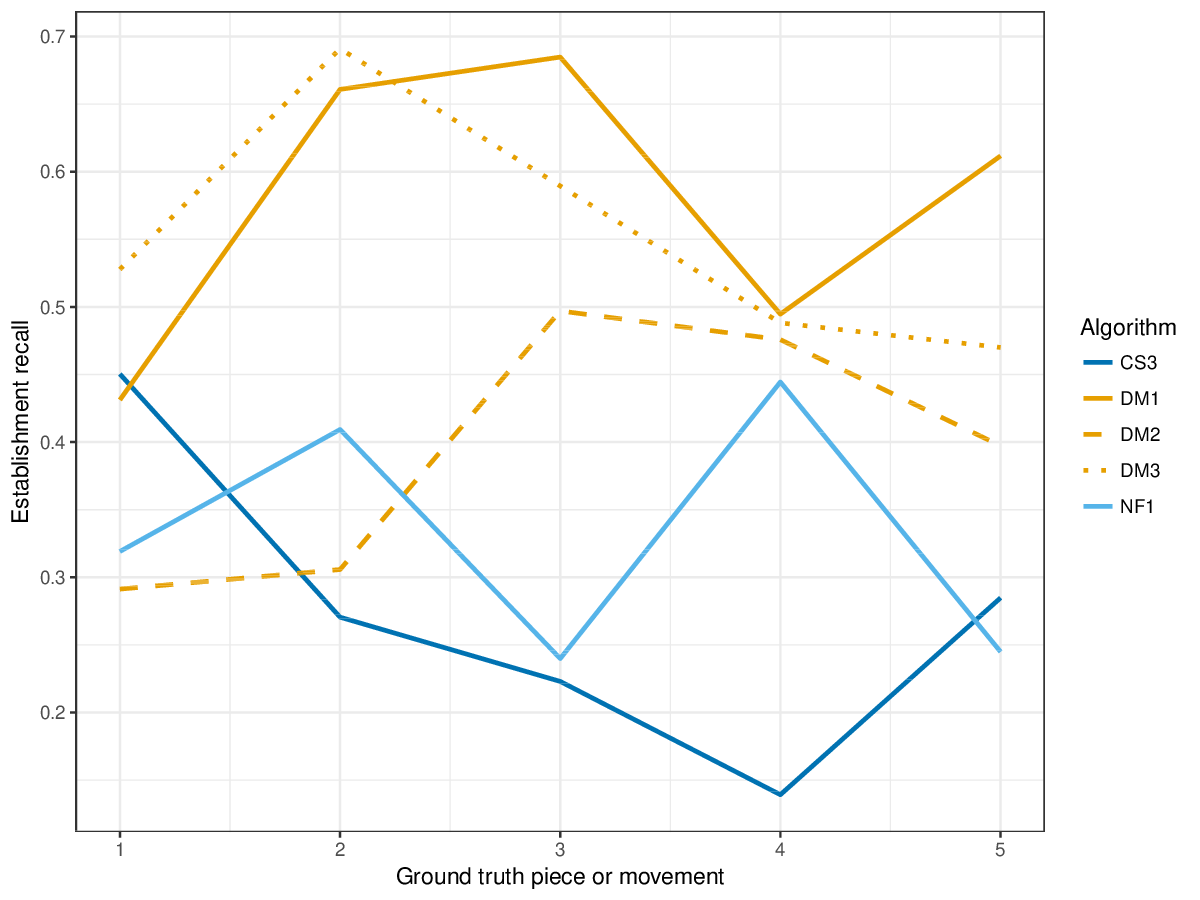

Figure 1. Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype?

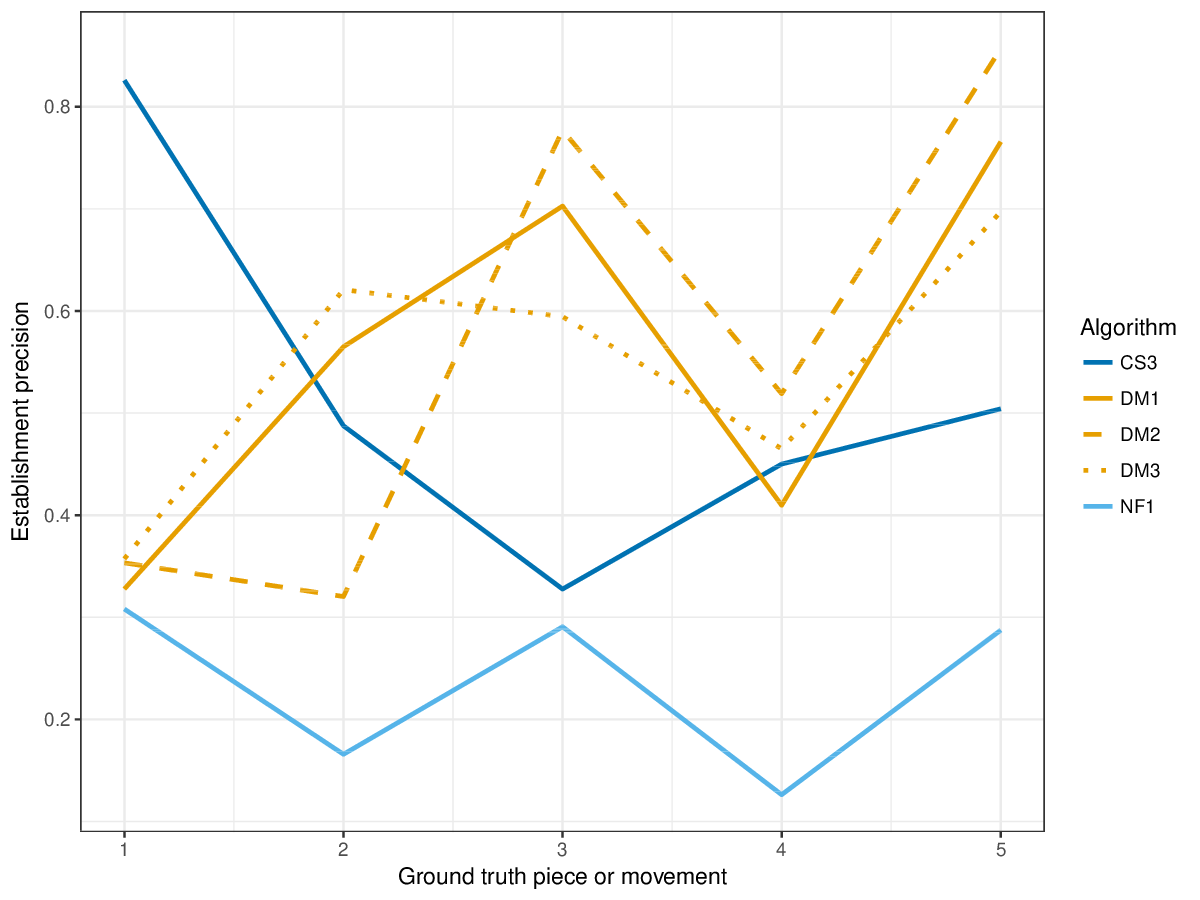

Figure 2. Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern?

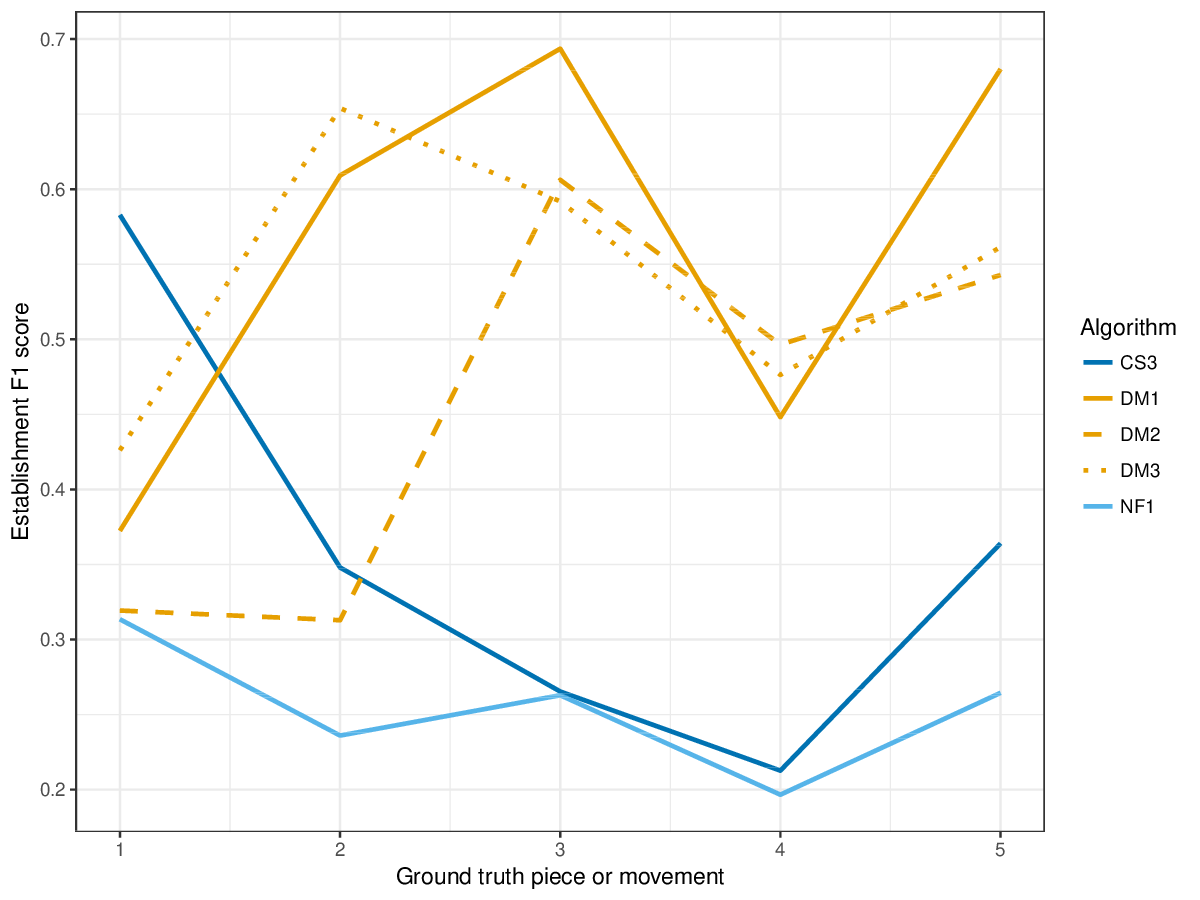

Figure 3. Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall.

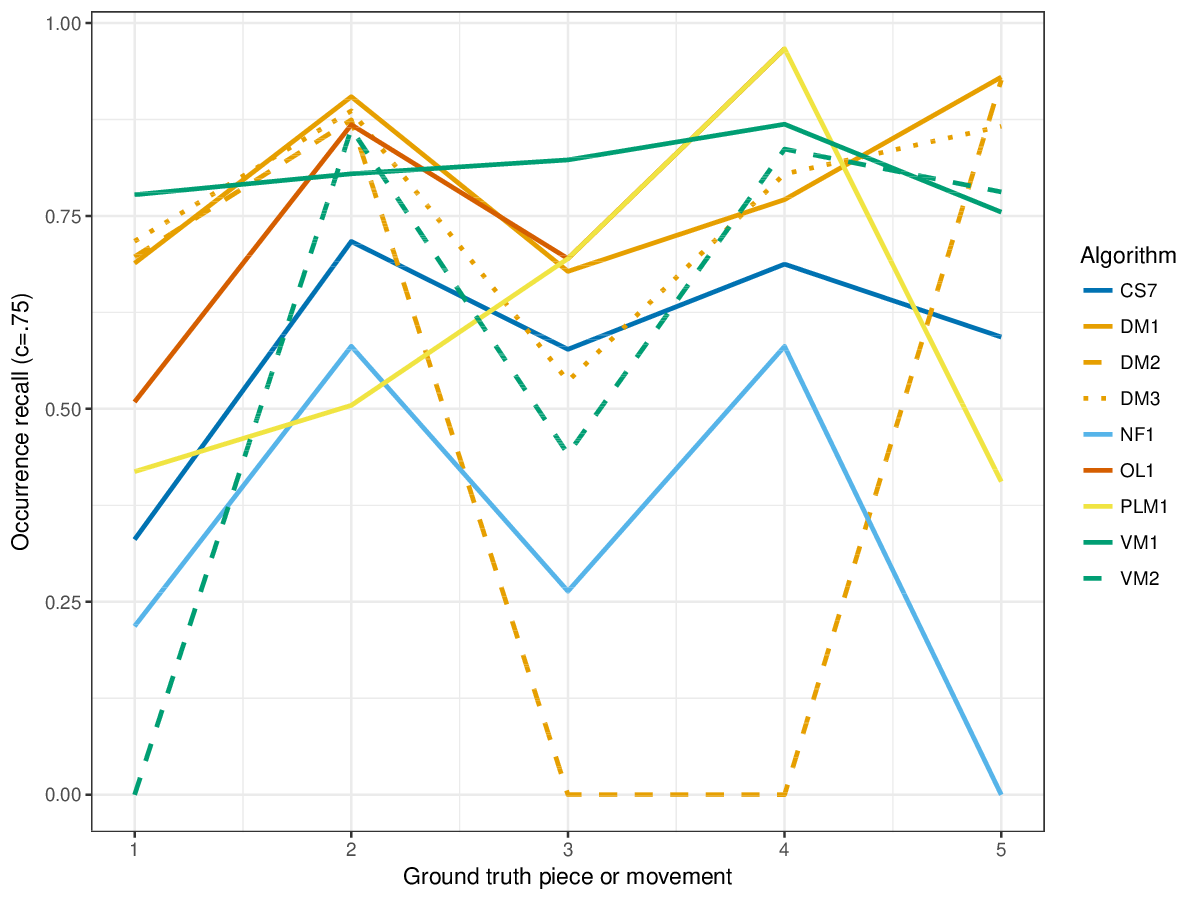

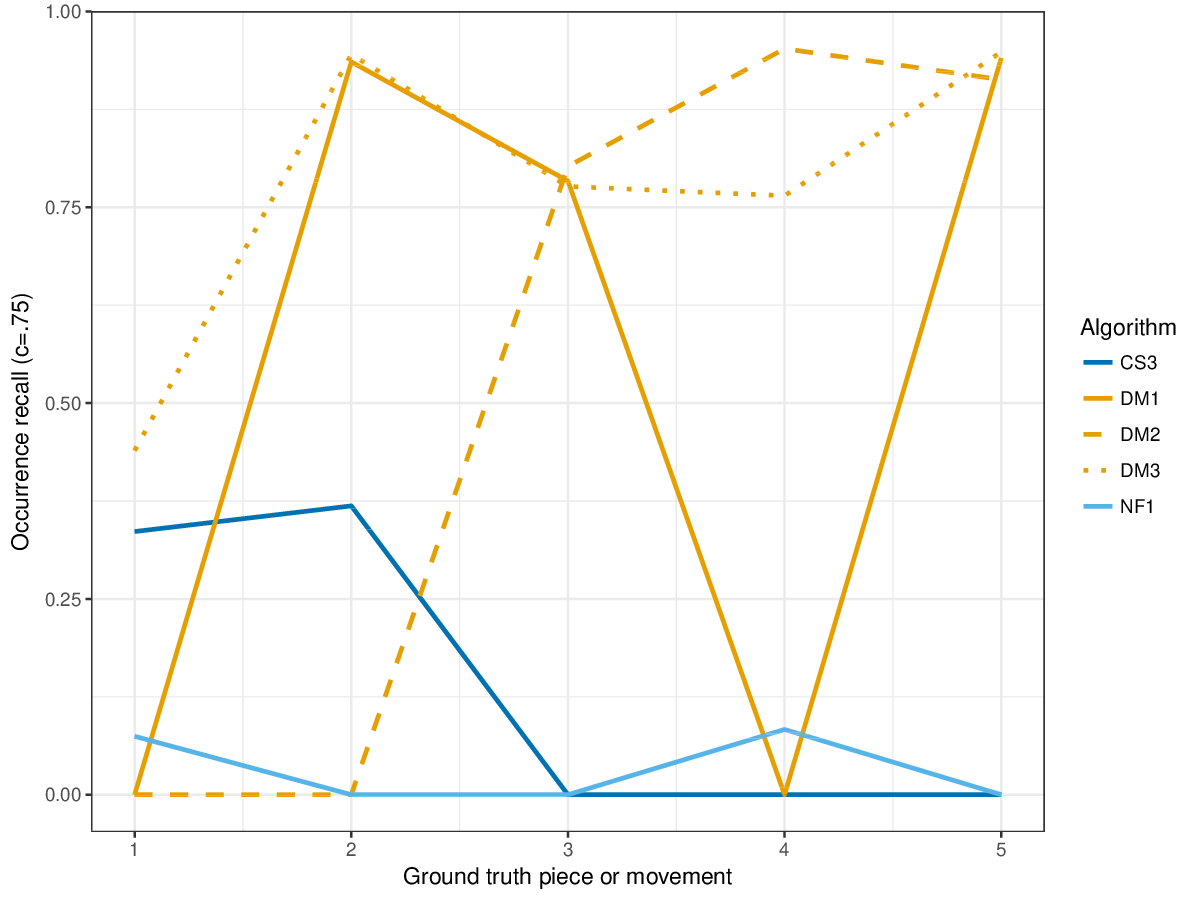

Figure 4. Occurrence recall () averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set?

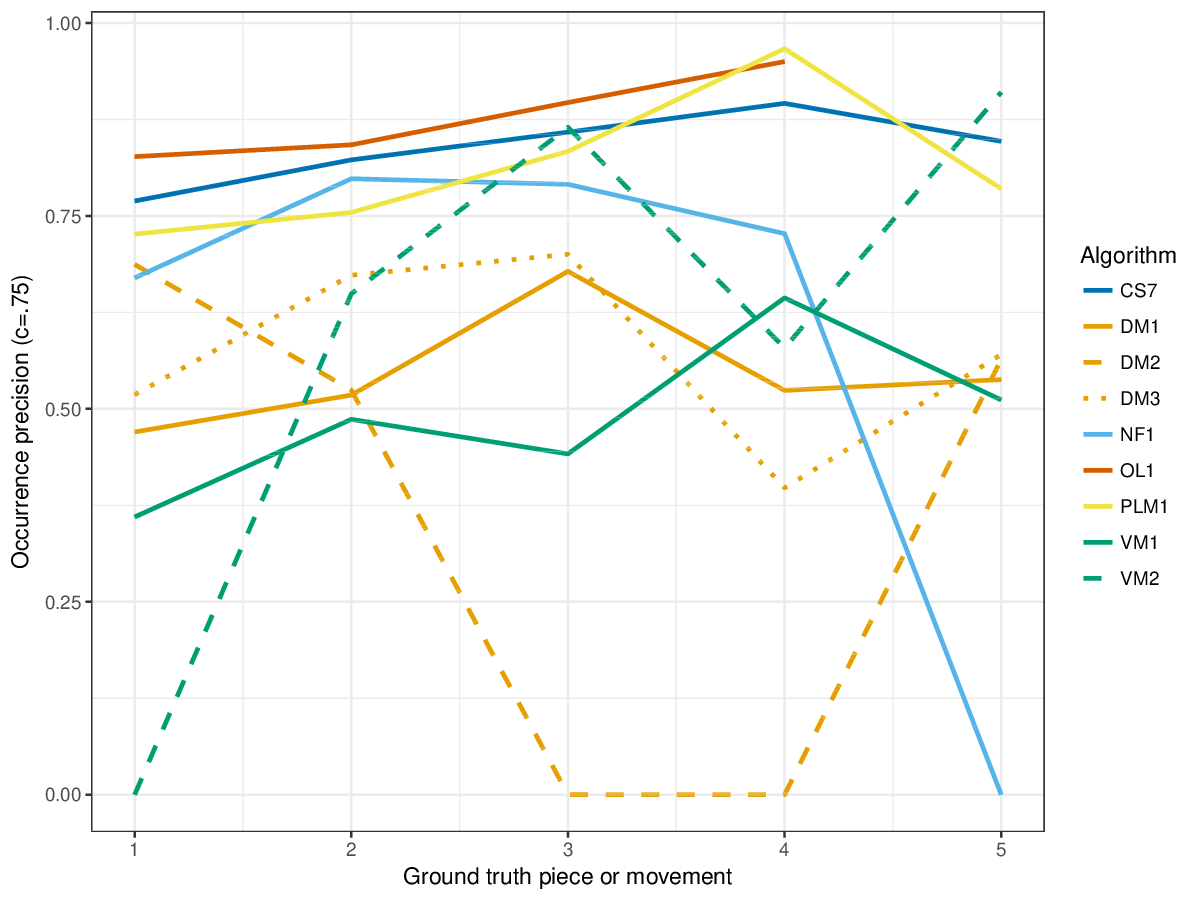

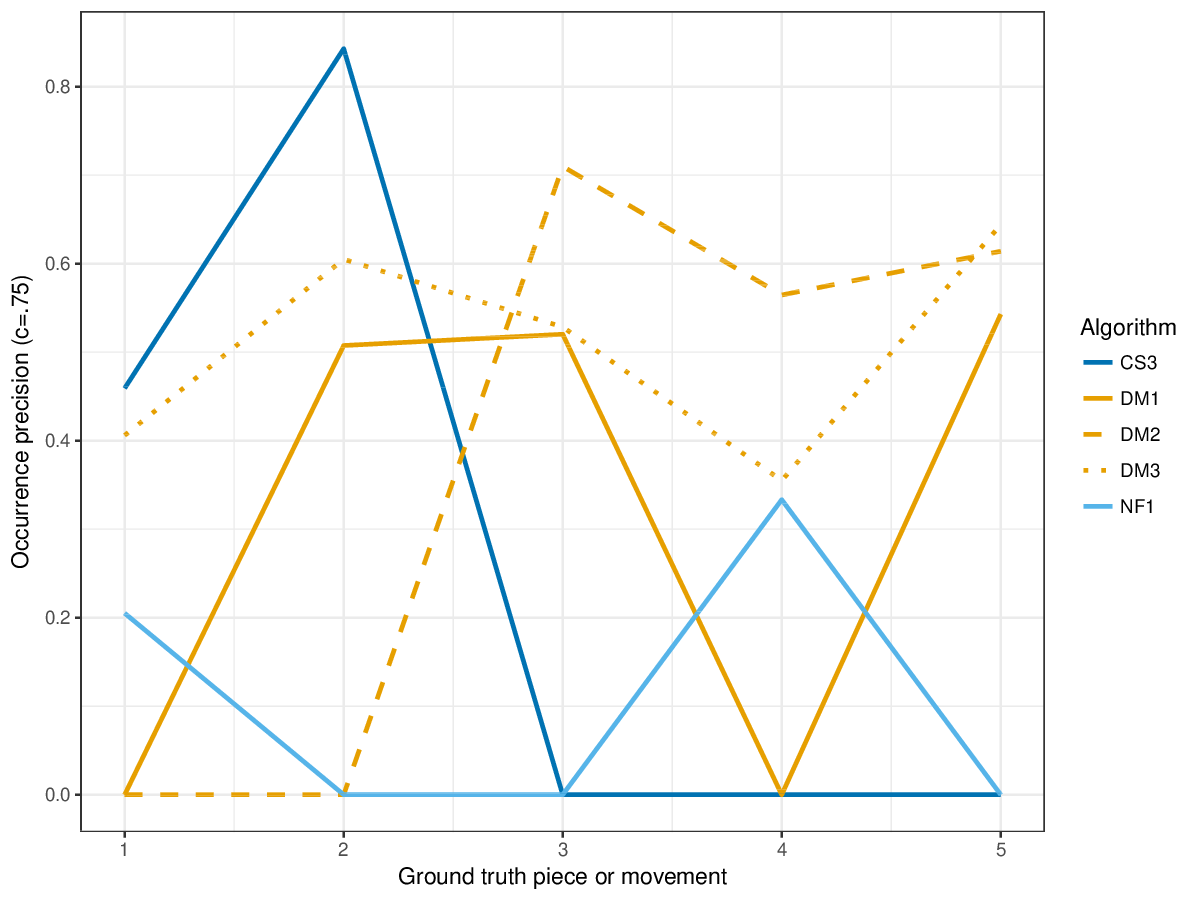

Figure 5. Occurrence precision (Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c = .75} ) averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences?

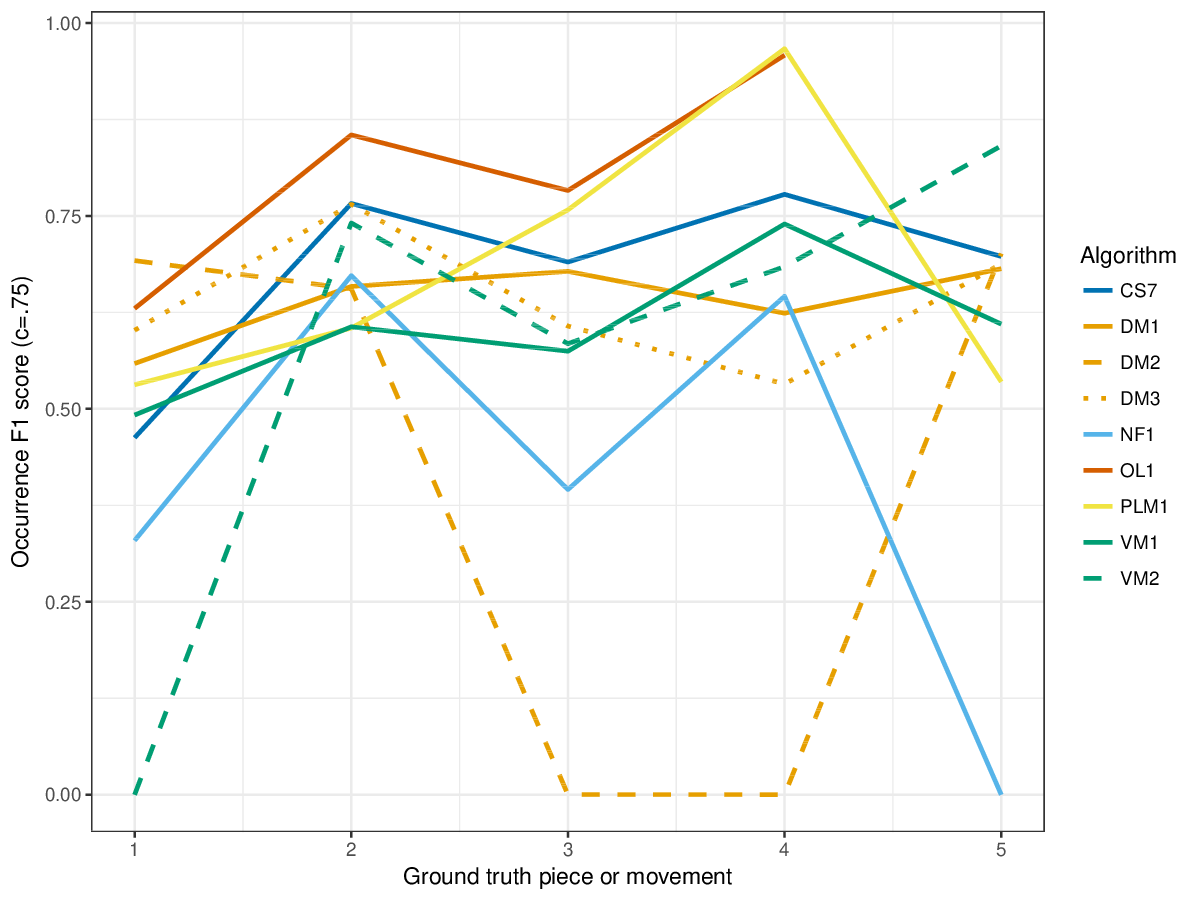

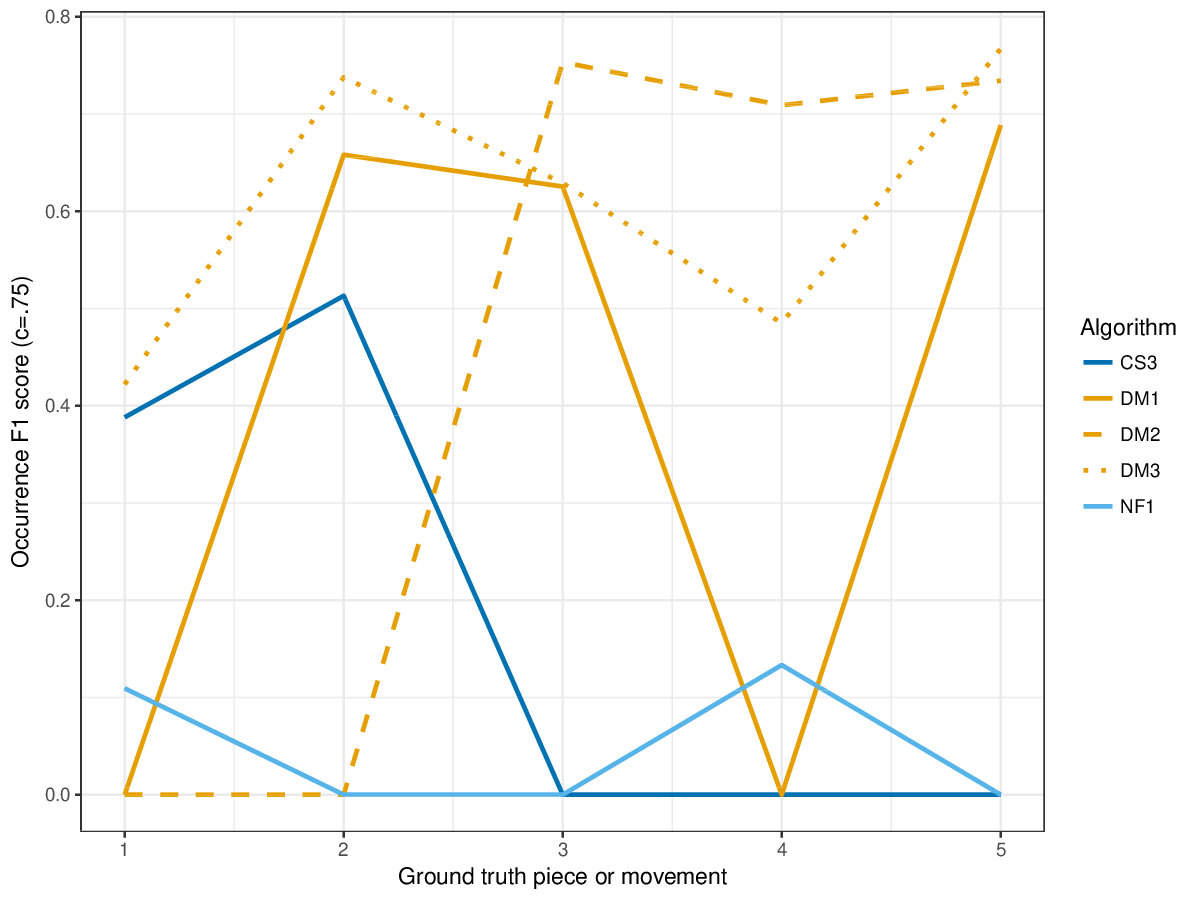

Figure 6. Occurrence F1 () averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall.

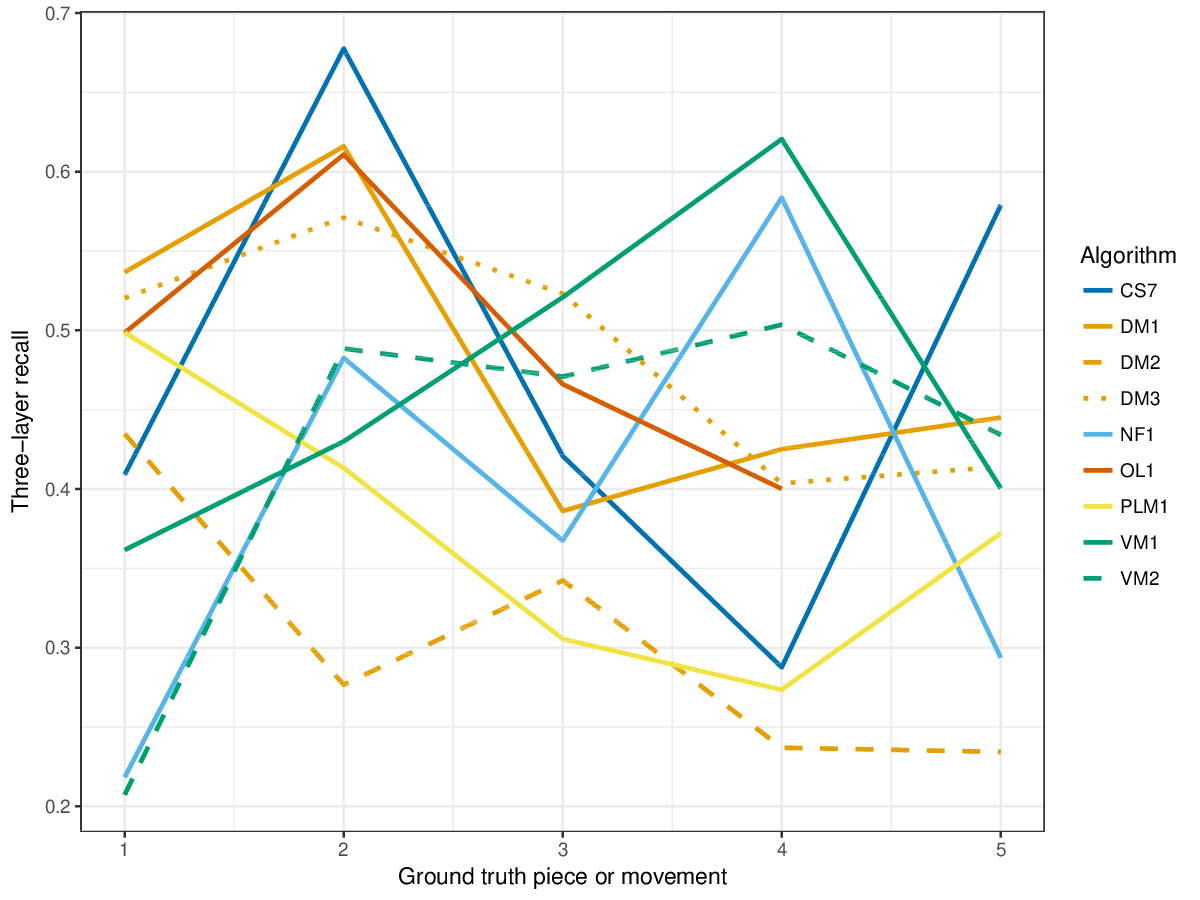

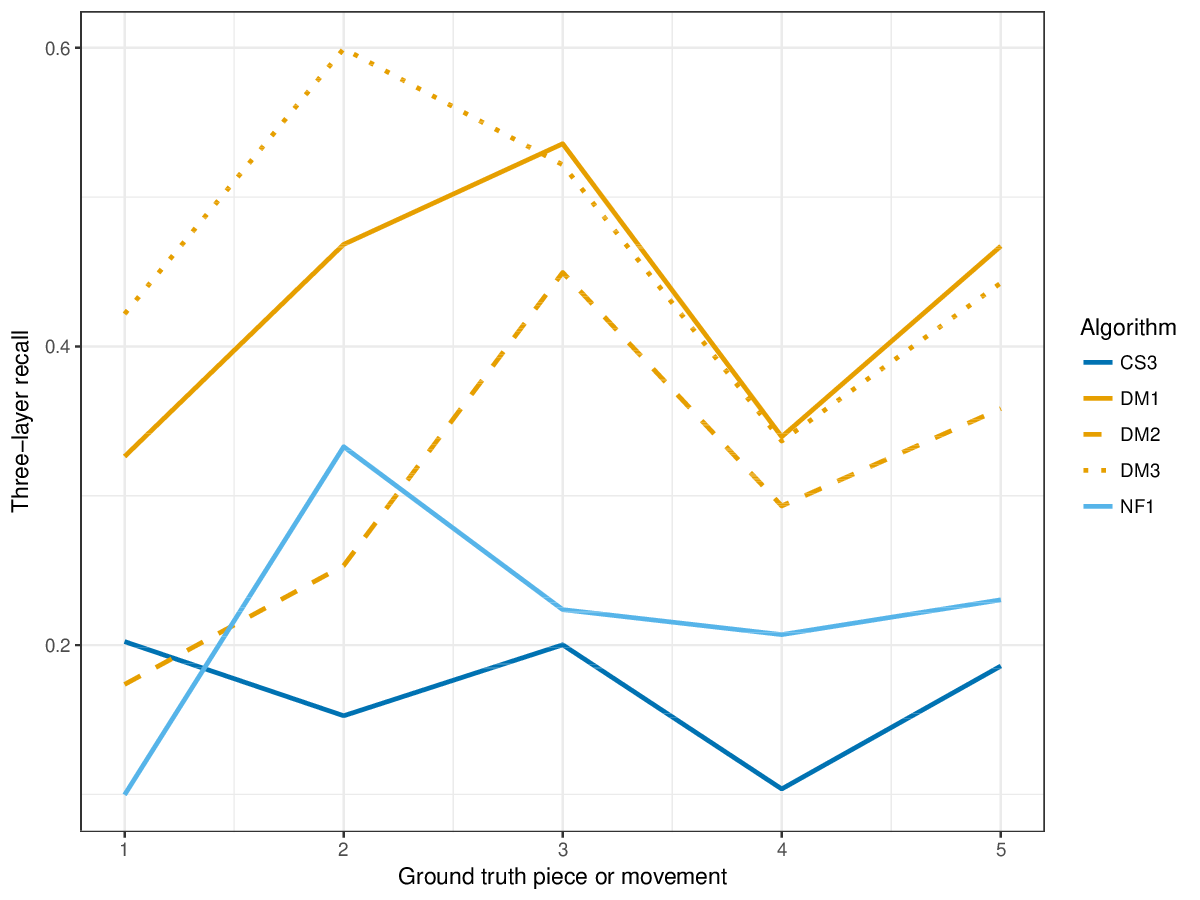

Figure 7. Three-layer recall averaged over each piece/movement. Rather than using Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |P \cap Q|/\max\{|P|, |Q|\}} as a similarity measure (which is the default for establishment recall), three-layer recall uses , which is a kind of F1 measure.

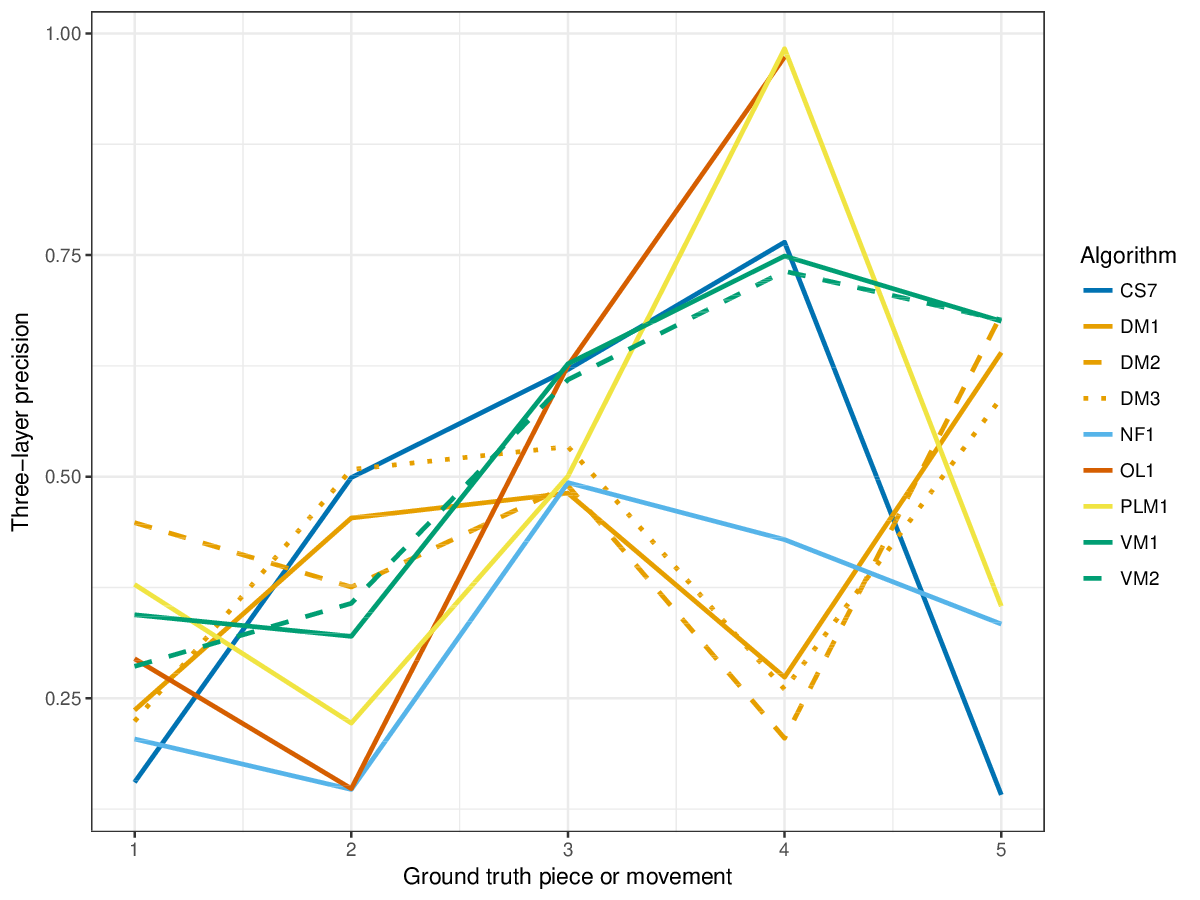

Figure 8. Three-layer precision averaged over each piece/movement. Rather than using Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |P \cap Q|/\max\{|P|, |Q|\}} as a similarity measure (which is the default for establishment precision), three-layer precision uses Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2|P \cap Q|/(|P| + |Q|)} , which is a kind of F1 measure.

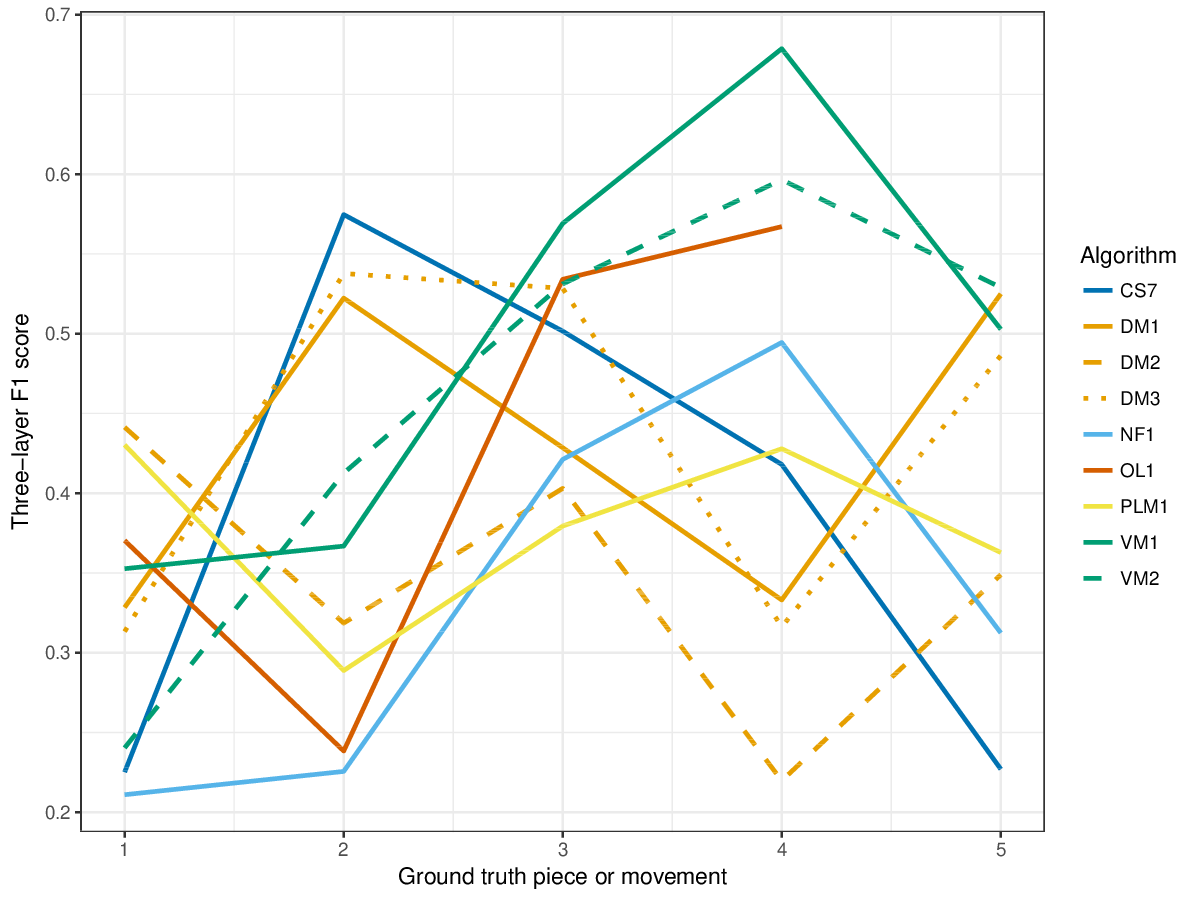

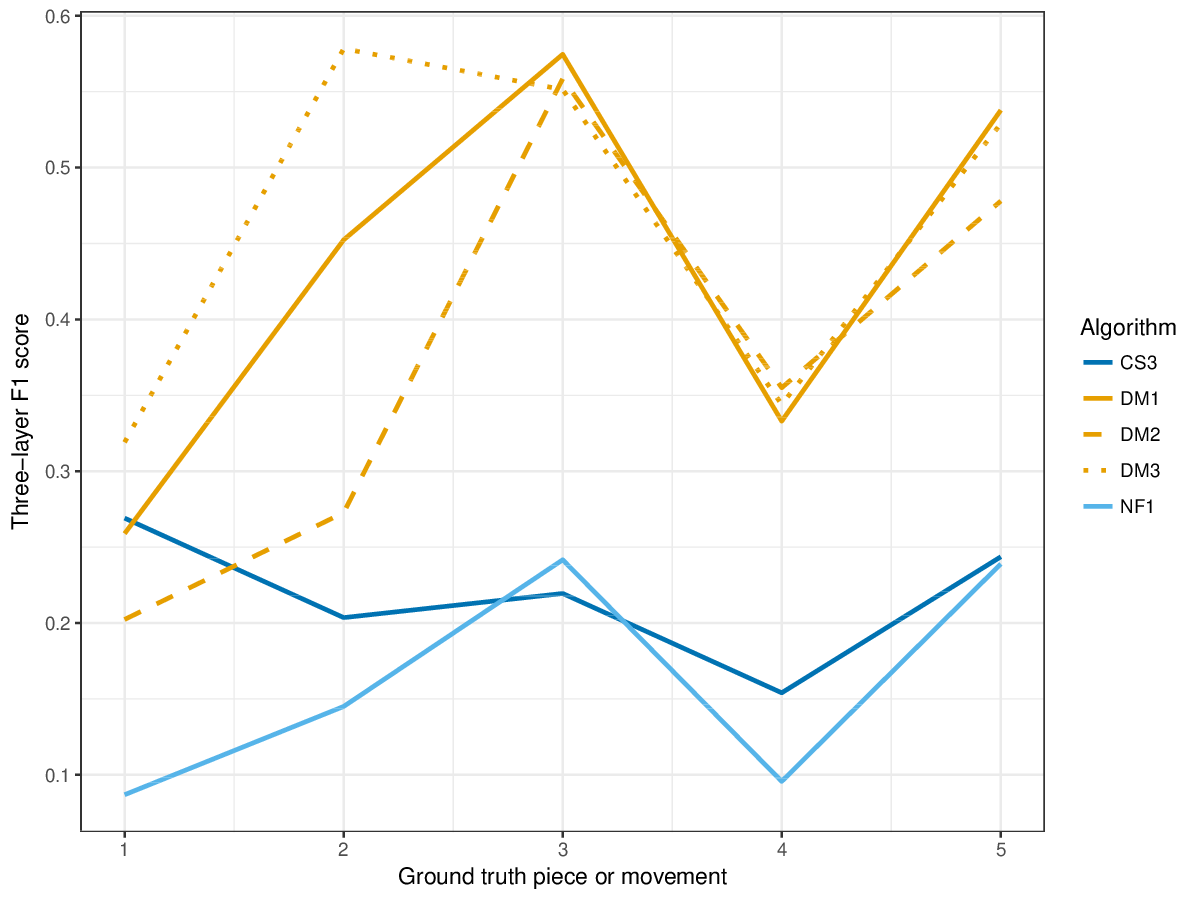

Figure 9. Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall.

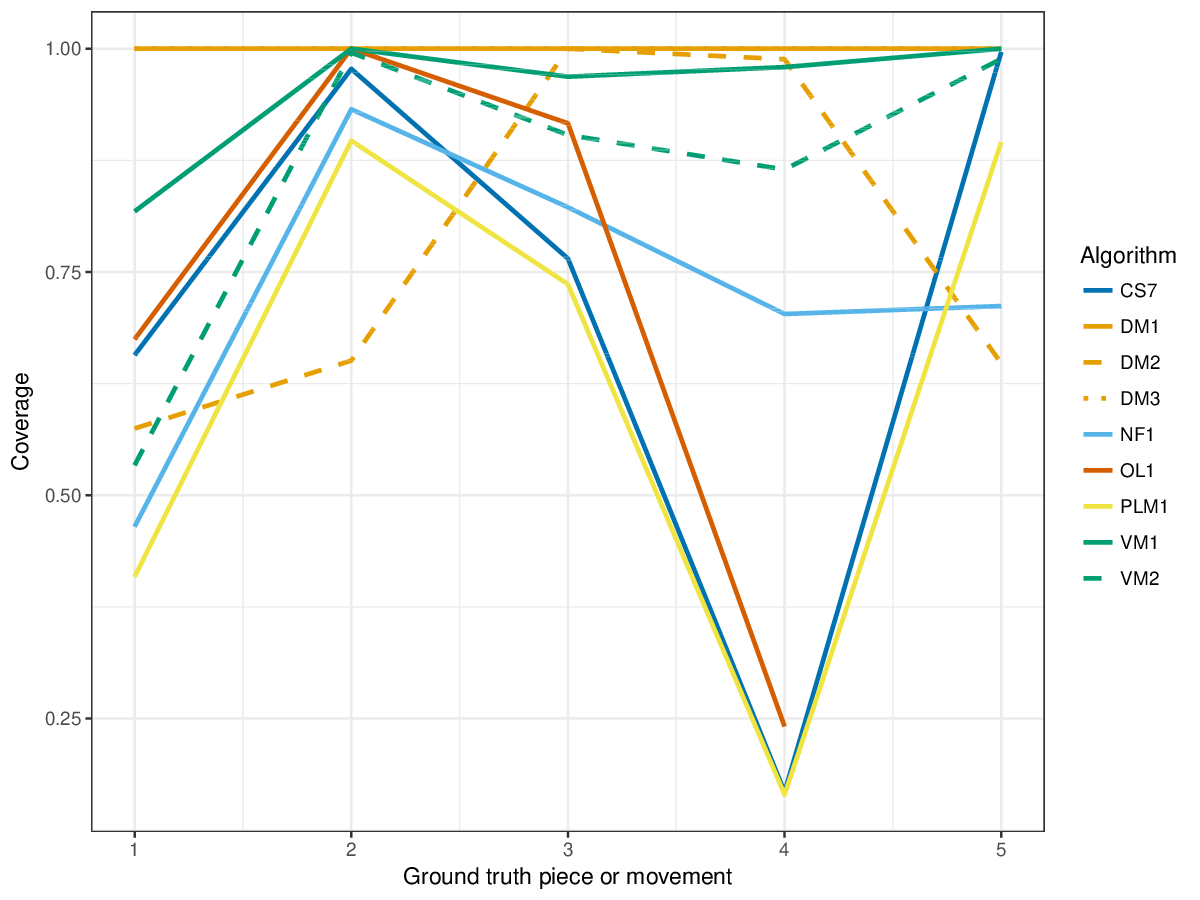

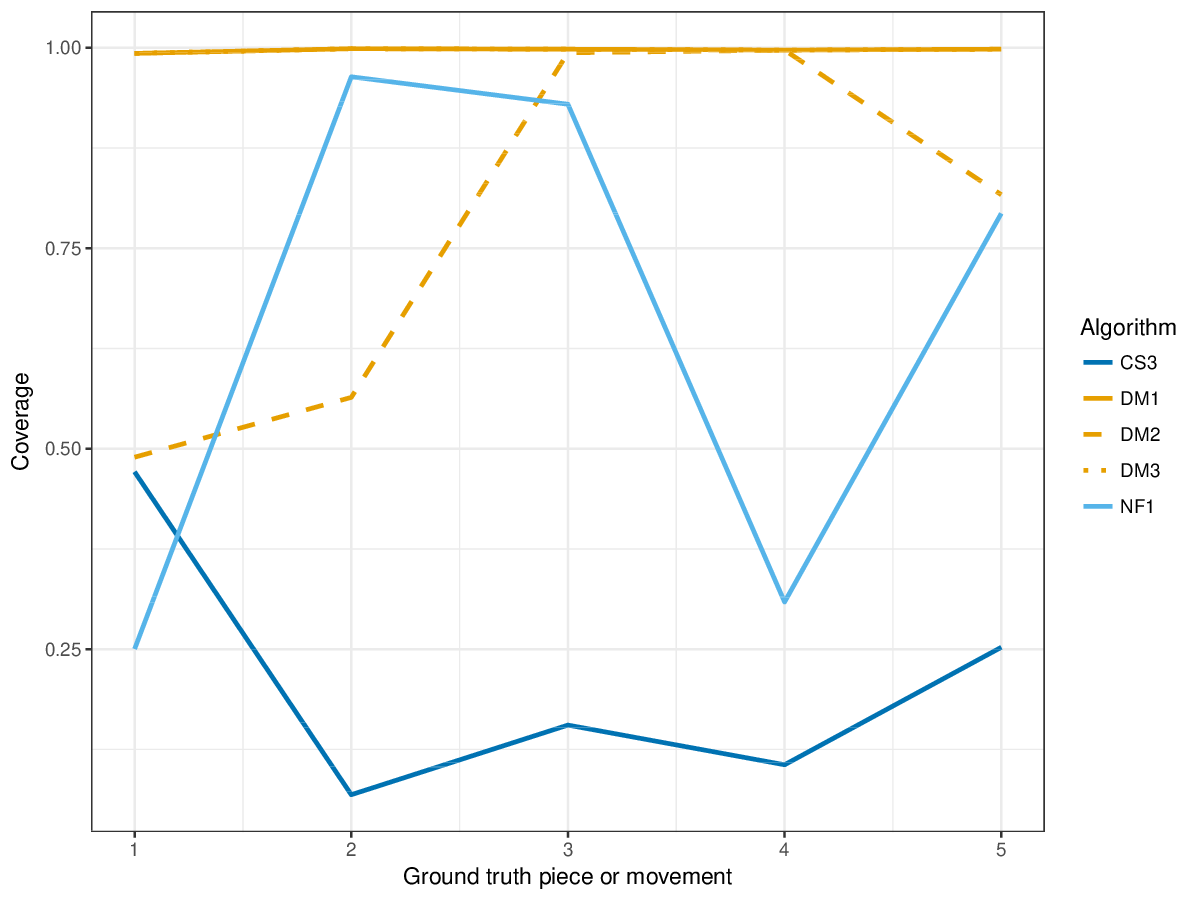

Figure 10. Coverage of the discovered patterns of each piece/movement. Coverage measures the fraction of notes of a piece covered by discovered patterns.

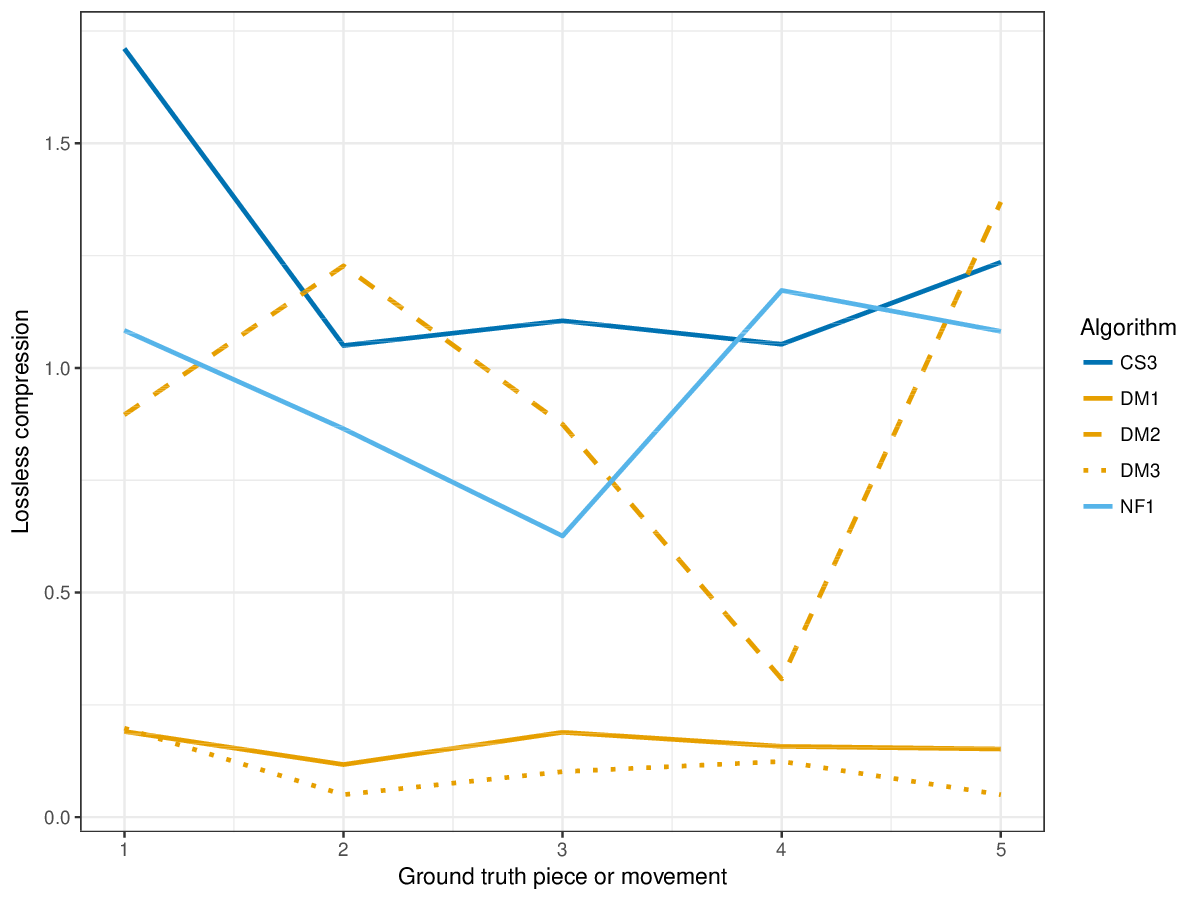

Figure 11. Lossless compression achieved by representing each piece/movement in terms of patterns discovered by a given algorithm. Next to patterns and their repetitions, also the uncovered notes are represented, such that the complete piece could be reconstructed from the compressed representation.

SymPoly

Figure 12. Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype?

Figure 13. Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern?

Figure 14. Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall.

Figure 15. Occurrence recall (Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c = .75} ) averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set?

Figure 16. Occurrence precision (Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c = .75} ) averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences?

Figure 17. Occurrence F1 (Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c = .75} ) averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall.

Figure 18. Three-layer recall averaged over each piece/movement. Rather than using Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |P \cap Q|/\max\{|P|, |Q|\}} as a similarity measure (which is the default for establishment recall), three-layer recall uses Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2|P \cap Q|/(|P| + |Q|)} , which is a kind of F1 measure.

Figure 19. Three-layer precision averaged over each piece/movement. Rather than using Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |P \cap Q|/\max\{|P|, |Q|\}} as a similarity measure (which is the default for establishment precision), three-layer precision uses Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2|P \cap Q|/(|P| + |Q|)} , which is a kind of F1 measure.

Figure 20. Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall.

Figure 21. Coverage of the discovered patterns of each piece/movement. Coverage measures the fraction of notes of a piece covered by discovered patterns.

Figure 22. Lossless compression achieved by representing each piece/movement in terms of patterns discovered by a given algorithm. Next to patterns and their repetitions, also the uncovered notes are represented, such that the complete piece could be reconstructed from the compressed representation.

Tables

SymMono

Click to download SymMono pattern retrieval results table

Click to download SymMono compression results table